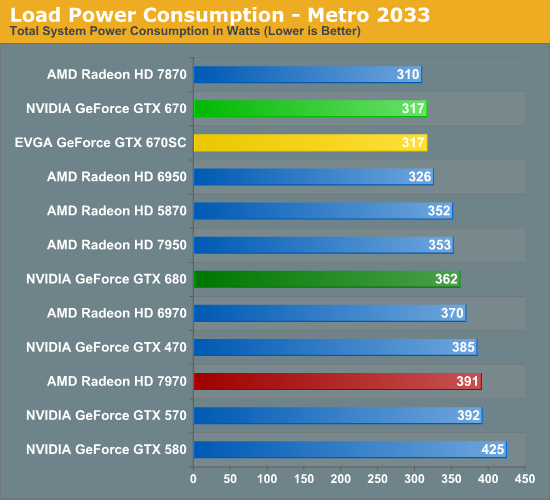

You all completely skipped over the point I was trying to make. If X gpu is more efficient when isolated, that's fine and dandy and neat to point out, but if X gpu causes the rest of the system to work harder and draw more power than the less efficient Y gpu then in my opinion it's just as important to have that information and to measure the total power draw of the system. Total system power is what shows up on the electric bill, so in my opinion that trumps anything when it comes to measuring power and efficiency.

tviceman, I did not ignore that total system power consumption should be considered. What I said is that you cannot use total system power consumption to derive GPU power consumption accurately without accounting for the PSU inefficiency. This is because the PSU inefficiency applies to all components. If you aggregate this number mathematically, you assign some of the PSU inefficiency to the GPU power consumption. This is mathematically incorrect.

Example:

1A) 200W GPU + 150W system @ load @ 80% PSU efficiency = 350W actual or 438W at the wall

2A) 200W GPU + 150W system @ load @ 92% PSU efficiency = 350W actual or 380W at the wall

vs.

1B) 150W GPU + 150W system @ load @ 80% PSU efficiency = 300W actual or 375W at the wall.

The GPU power consumption difference is 50W but this shows up as 63W due to PSU inefficiency.

2B) 150W GPU + 150W system @ load @ 92% PSU efficiency = 300W actual or 326W at the wall

The GPU power consumption difference is 50W but this shows up as 54W.

You cannot measure GPU power consumption unless you take PSU efficiency into account.

Also, under real world conditions a typical modern system draws 300W of power in gaming without a display:

Add to this your LCD/LED/Plasma, for many of us overclocked CPUs, maybe a lamp in your room and the extra 30-50W of power is mostly irrelevant in most of North America. In the context of a modern desktop PC rig in you room/office, the total power consumption of a desktop PC + monitor is likely to be 350-400W already.

In North America the cost savings argument is difficult to justify since even if you game 10 hours a day straight, 50W of extra power @ 24hr x 365 days @ 0.20 per Kwh = $36 per annum.

How many gamers here game 10 hours a day for 365 days?

Further, the cost savings argument against AMD HD7900 series of cards is undermined completely by virtue of AMD HD7900 series' making $ bitcoin mining. If in fact costs were your primary consideration in the overall system build, it would be difficult if not impossible to ignore the bitcoin mining feature of AMD cards. Thus, it is not a reasonable position to undertake since if saving $ was the

most important factor, then buying AMD HD7950/7970 cards would be the

only logical outcome. If bitcoin mining is not a consideration, the cost savings cannot be the most important factor by default imo.

It is also difficult to prove that if a modern system is drawing 350W of power with the monitor already that another 50W of power will exponentially heat up your room/office.

If we take the position that the extra power consumption is extremely important, one has to question why such a person does not just buy a Playstation 3 as the entire device in Slim SKU now draws just

108W of power at full load. If 50W of extra power consumption is so vital for your gaming PC, then why would you not purchase a PS3 and save 250W of power?

Furthermore, the cost argument is further invalidated given the fact that an 1025-1050mhz HD7950 draws 167-170W of power and yet delivers near GTX680 level of performance. The immediate cost savings in such a purchase is $170 as the 7950 retails for $320-330. Even against an after-market 670, this is a savings of $70-80. You cannot mathematically save $ on a GTX680's power consumption vs an overclocked 7950 since you can simply stop overclocking the minute you reach GTX680's level of performance which occurs very near GTX680's power consumption level. The end result is still a savings of $170.

The extra power consumption of an overclocked 7950 vs. an overclocked 660Ti part also provides superior performance. Thus, the extra power consumption is not a wasteful metric as it results in a measurable performance advantage. Since I presume you have chosen to game on a PC for its superior performance and image quality despite PS3 consuming 1/3 of the power of a gaming PC, why would you not assign any value to 7950 OCed 20% performance advantage against an overclocked 660Ti part? Of course, it's an individual's choice if performance/watt is more important than absolute performance. However, this creates a circular argument because GTX660Ti itself is not the leaders in performance/watt, which means if performance/watt is the most important metric, then 660Ti does not fit this metric either:

If then we assume that the extra power consumption of the 7950 and its 20% performance increase in overclocked state against an overclocked 660Ti is not worth it due to performance/watt being a key metric, we still arrive at the conclusion that HD7870 is superior to GTX660Ti. Since 660Ti offers much worse performance/$ and inferior performance/watt, therefore we arrive at the conclusion that 660Ti needs a price drop against the 7870 SKU.

So what has been stated earlier has been proven true:

1) If performance/watt is the most important metric, 660Ti needs a price drop against the 7870;

2) If performance/$ is the most important metric, 660Ti needs a price drop against the 7870;

3) If #1 and #2 are the most important metrics, 660Ti needs a price drop against the 7870;

4) If top OCed performance is the most important metric, 660Ti needs a price drop against the 7950.

5) If MSAA performance is most important metric, 660Ti needs a price drop against the 670 since a mid-range SKU and a top-tier SKU should not have linear price/performance scaling using historical market trends.