I was under the impression even for SFP, even the 680 does not beat the 580 due to the less cache. My application is not gaming, I am writing custom CUDA apps, less power is definitely nice tho.

I am using single precision, not double precision. I don't care about GTX580 double precision performance.

For single precision performance, the calculation is extremely simple:

# of Shaders x Shader clock speed x Operations / Clock cycle

GTX580 = 512 SPs x (772 GPU x 2 Shader clock) x 2 Ops / cycle = 1.581 Tflops

GTX680 = 1536 SPs x 1058 Shader clock x 2 Ops / cycle = 3.25 Tflops

HD7970 = 2048 SPs x 925 Shader/GPU clock x 2 Ops / cycle = 3.79 Tflops

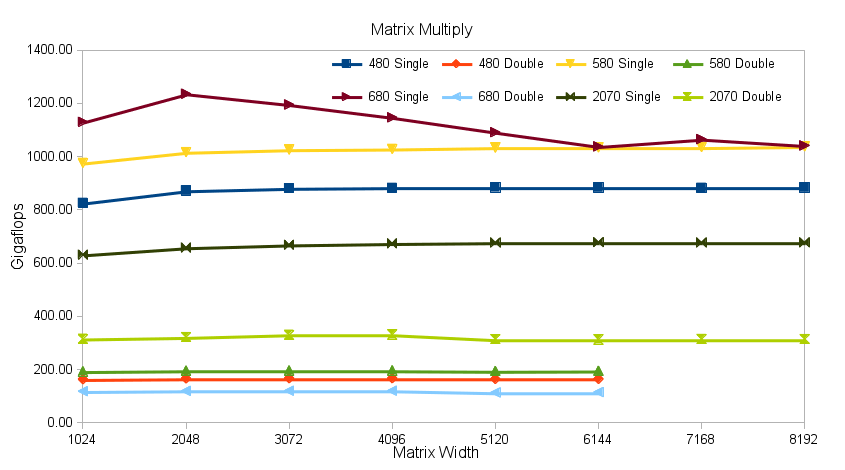

In Matrix Multiply a single GTX680 is almost 2x faster than C2070.

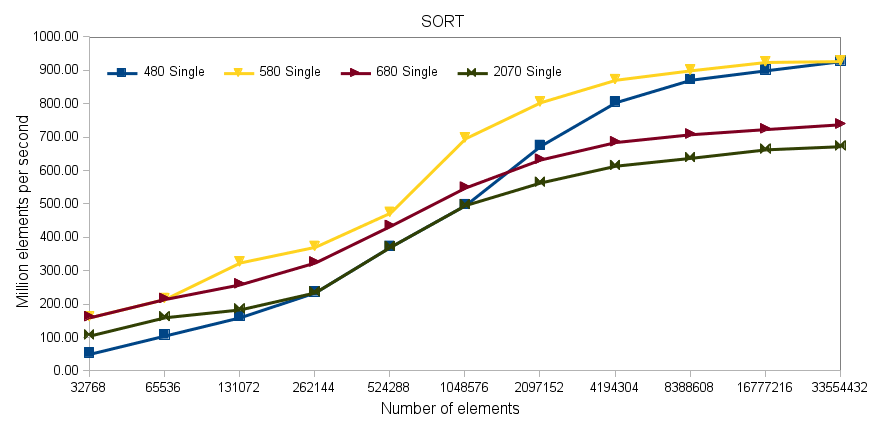

However, it doesn't mean all compute programs will scale perfectly linear.

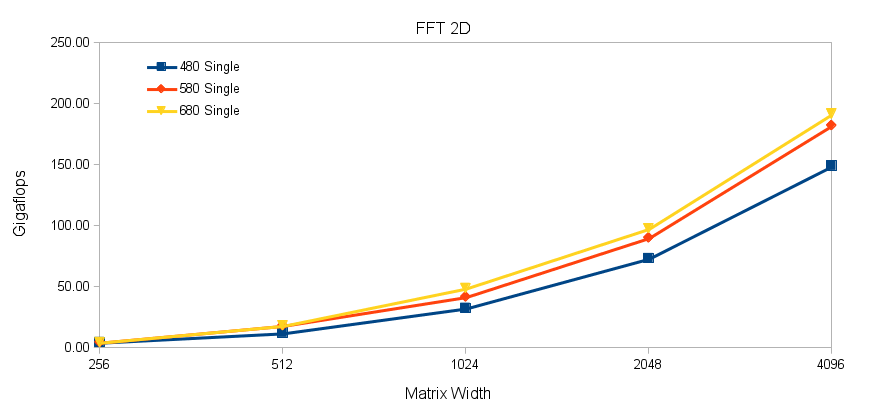

In some cases, the 580 can still come out on top of the 680:

Source

*** Looks like JS17 beat me to it. Didn't see his post prior to posting mine. ***