- Nov 16, 2006

- 8,399

- 9,807

- 136

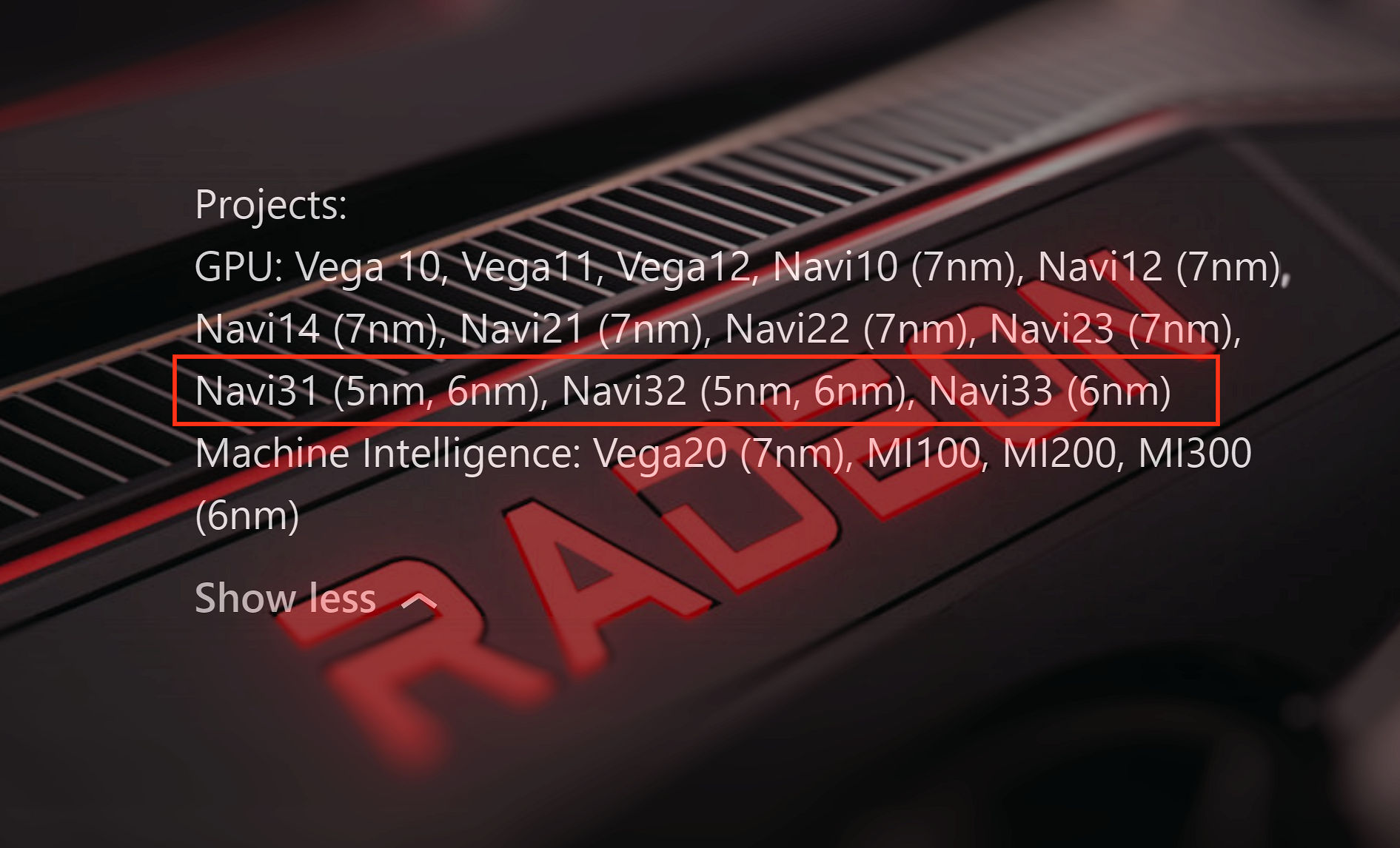

AMD Allegedly Preparing Refreshed 6 nm RDNA 2 Radeon RX 6000S GPU

AMD is allegedly preparing to announce the Radeon RX 6000S mobile graphics card based on a refreshed RDNA 2 architecture. The new card will be manufactured on TSMC's N6 process which offers an 18% logic density improvement over the N7 process currently used for RDNA 2 products resulting in...

- 6nm would bring 18% higher density (so more dies per wafer) + some performance or efficiency improvements as AMD chooses to tune the dies (Performance for desktop/Efficiency for laptops I suppose).

My understanding is that 6nm is a clean shrink of 7nm without the complexities and overhead of a full node shrink.

Wonder if AMD will end up running a couple simultaneous lines: 7nm for their 6000 series mainstream and bulk cards, 6nm for their mid to top end 6000 series S cards, and eventually 5nm for their RDNA 3 top end parts. Shift everything down a tier when 4/3nm whatever show up.