- May 16, 2002

- 27,397

- 16,239

- 136

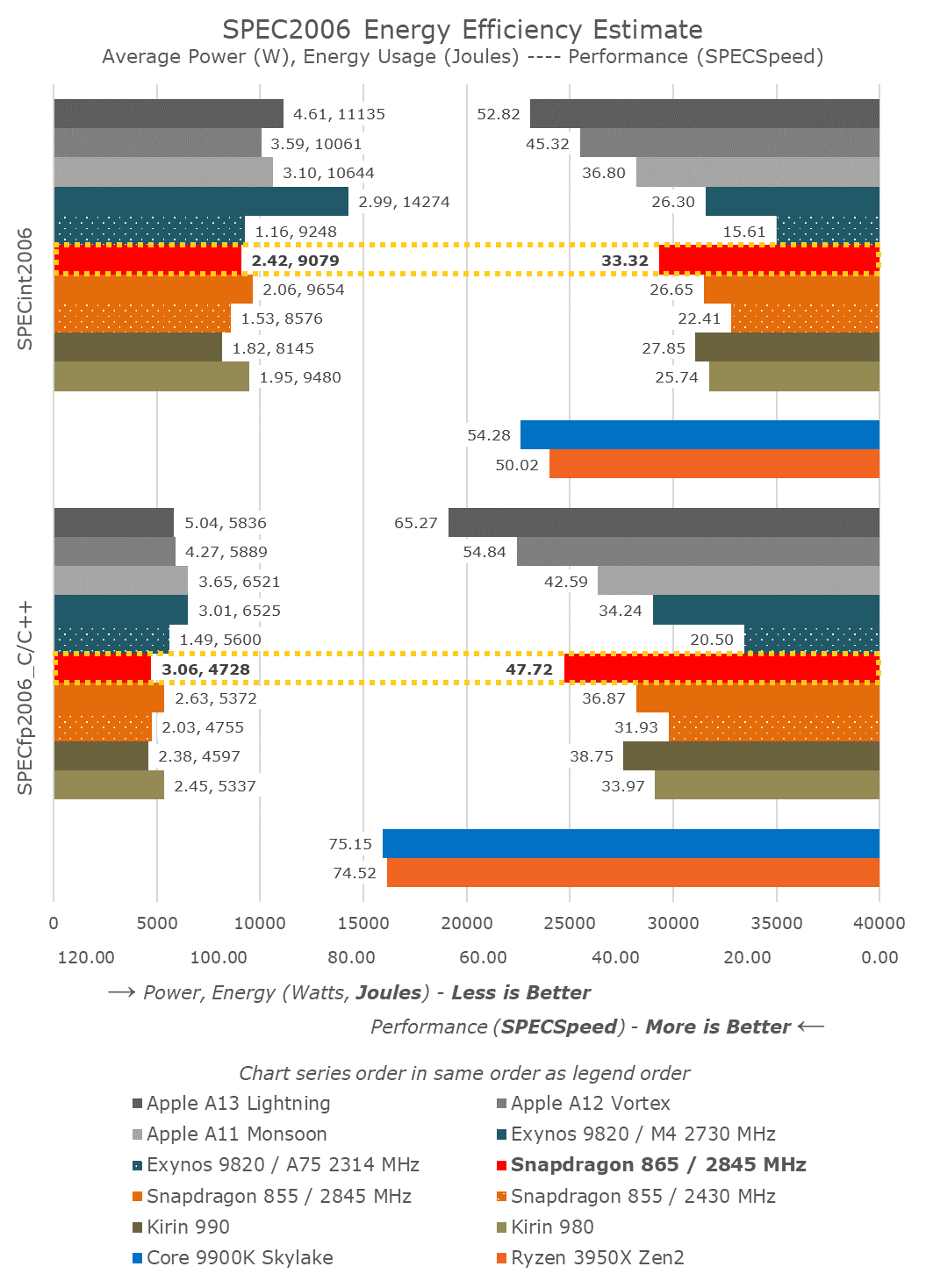

OK, now this is a very specific test, but as much as they are disparate, I don't think other scenarios would be that different.

So I got an 8 gig Raspberry PI (8 gig, 32 gig HD, A72 v8 4 core 1.5 ghz). Its does the Open Pandenics covid 19 WCG unit, 4 cores times 7.5 hours

My EPYC 7742 does 128 of these same units at 2 ghz in 2.5 hours.

So it would take 32 PI's to do the same work, but 3 times slower. An 32 PIs at 5 watts each is 160 watts, vs the 7742 @250 watts. (motherboard, memory and all) So more power and 3 times longer for the same work is 2 times the power usage. (1200 watts total vs 625)

Cost... 32 PIs at about $60 each (including power supplies and cables, probably more) would be at least $1920.

The EPYC is about $4000+480+580 or $5060. (I paid $3000 less, that retail on ebay)

So the EPYC effectively less because it cost 2 times less electricity for the same work.

This seems WAY different than I am seeing when people talk about ARM and efficiency and power usage. Please tell me where I am mistaken in my math., But don't be a jerk if you find the errors of my ways. The run times are real.. The PI is at 7.5 hours and hasn't actually finished a unit yes, no other tasks running on the PI. And I am looking at several units on the 7742, one is at 97% in 2:21.

Edit: The first unit finished in 7:50, almost 8 hours. The next 2 units are at 8 hours and still running at 96 and 98%.

And my comment on "wins by a large margin" has to do with total power usage, which in data centers, and for me is a big deal.

So I got an 8 gig Raspberry PI (8 gig, 32 gig HD, A72 v8 4 core 1.5 ghz). Its does the Open Pandenics covid 19 WCG unit, 4 cores times 7.5 hours

My EPYC 7742 does 128 of these same units at 2 ghz in 2.5 hours.

So it would take 32 PI's to do the same work, but 3 times slower. An 32 PIs at 5 watts each is 160 watts, vs the 7742 @250 watts. (motherboard, memory and all) So more power and 3 times longer for the same work is 2 times the power usage. (1200 watts total vs 625)

Cost... 32 PIs at about $60 each (including power supplies and cables, probably more) would be at least $1920.

The EPYC is about $4000+480+580 or $5060. (I paid $3000 less, that retail on ebay)

So the EPYC effectively less because it cost 2 times less electricity for the same work.

This seems WAY different than I am seeing when people talk about ARM and efficiency and power usage. Please tell me where I am mistaken in my math., But don't be a jerk if you find the errors of my ways. The run times are real.. The PI is at 7.5 hours and hasn't actually finished a unit yes, no other tasks running on the PI. And I am looking at several units on the 7742, one is at 97% in 2:21.

Edit: The first unit finished in 7:50, almost 8 hours. The next 2 units are at 8 hours and still running at 96 and 98%.

And my comment on "wins by a large margin" has to do with total power usage, which in data centers, and for me is a big deal.

Last edited: