blackened23

Diamond Member

- Jul 26, 2011

- 8,548

- 2

- 0

The interesting question will be:

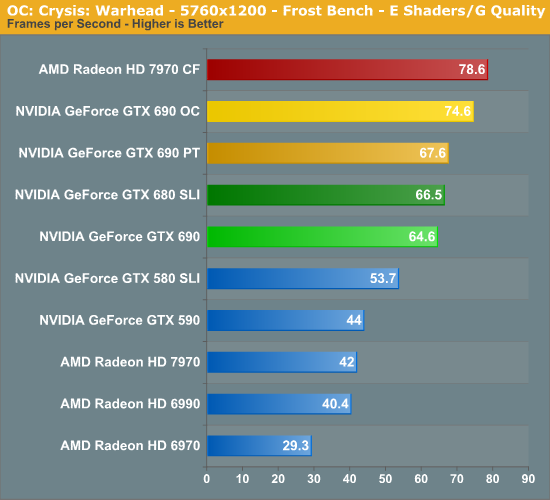

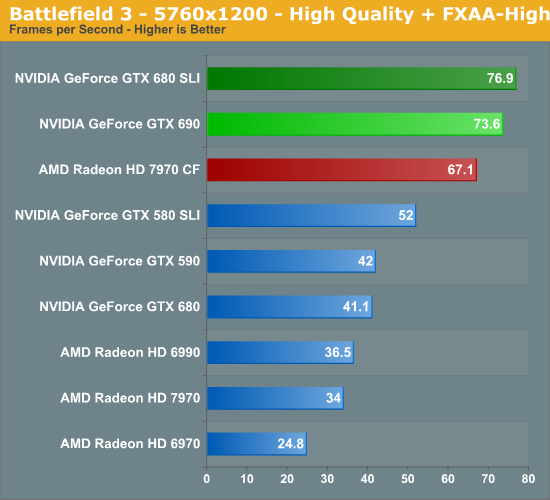

Will people be blinded by fps alone or will they take AFR woes into account? Were are these advanced frame time measurements? It's about time...

I've used both setups, 7970CF and 680 SLI and can state there is no such microstutter on 7970CF on a single screen. I do know that kyle commented on it during 3d surround resolutions, and have seen others mention jerkiness in eyefinity......So I don't know if its limited to eyefinity, but its definitely not there on a single screen at 2560 resolution. Of course this won't stop people from using this as ammo to pounce for the attack from people that haven't used both setups, but i'm honestly confused when I see people mention it. I never saw it, ever - maybe its limited to eyefinity. Shrug I dunno. I really don't plan on messing with surround in the near future. Most of the people commenting on it seem to be people that have never used either setup, so I won't say that I completely discount it but I'm just confused - maybe its eyefinity? I have no idea like I said.

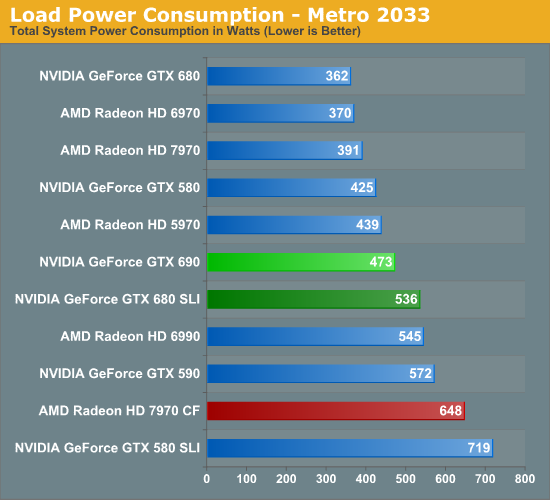

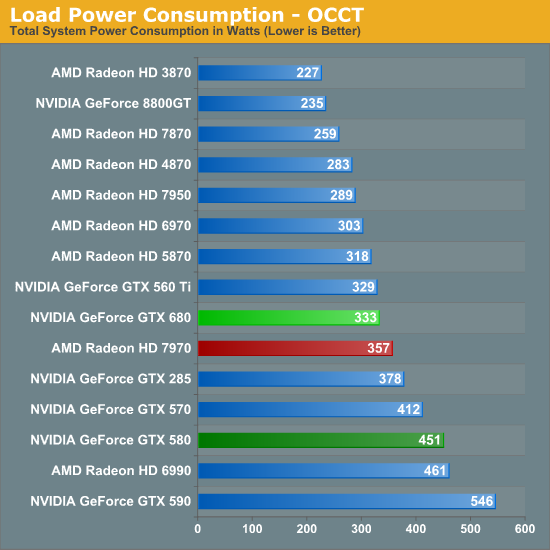

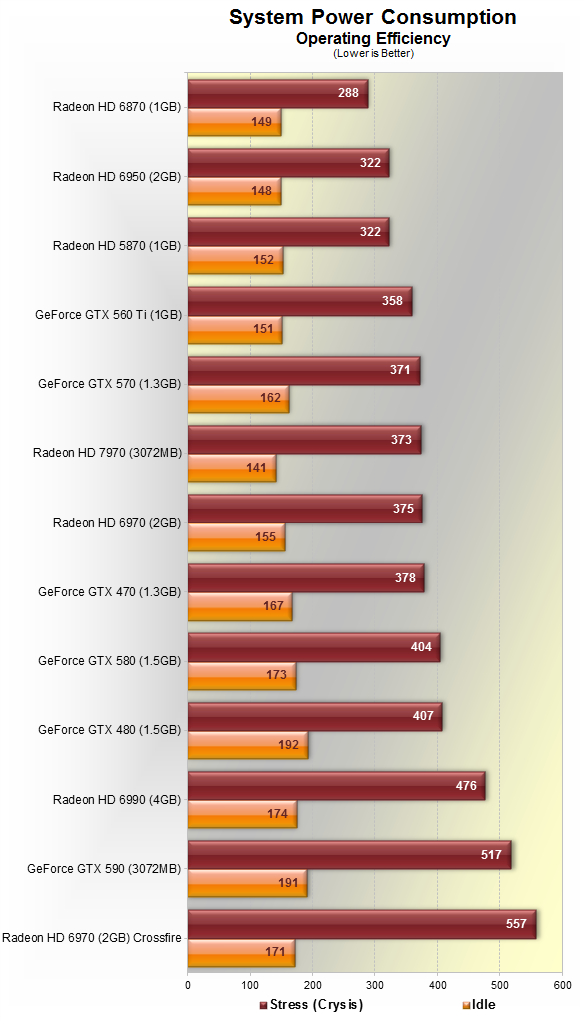

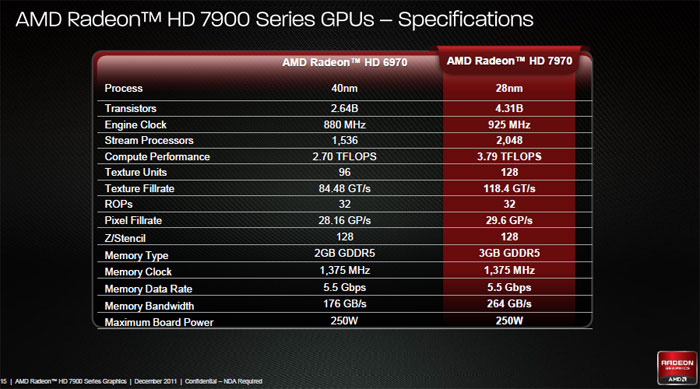

Anyway, if this news is true the 7990 won't win an outright battle with the 690 because the kepler is a more efficient chip -however, there are definitely things that work in the 7990s favor. Stuff that can work in the 7990s favor are price (849$ rumored), possibly availability, overclockability, no voltage lock (you cannot over volt the 690 at all.), and eyefinity tools (eyefinity desktop management is said to be worlds better than nv surround). Of course the 690 will win on other metrics as well - It (690) will have better efficiency and all of the benefits of being in the nvidia ecosystem in terms of good software support. So the 690 might win overall but there are definitely things to like about the 7990 if these rumors are true. I think its certain this card will be great for water cooling because it doesn't impose any limitations on voltage.....we'll have to wait and see if the rumored news is true I guess.

Last edited: