- Dec 10, 2010

- 6,240

- 2,559

- 136

Will update this list as more come along.

ArsTechnica:

(Ryzen 5800X3D, Asus ROG Crosshair VIII Dark Hero, 64GB DDR4-3200, Windows ???)

https://arstechnica.com/gadgets/202...0-gpus-are-great-4k-gaming-gpus-with-caveats/

Gamers Nexus:

https://www.youtube.com/watch?v=We71eXwKODw

Guru3D:

(Ryzen 5950X, ASUS X570 Crosshair VIII HERO, 32 GB (4x 8GB) DDR4 3600 MHz, Windows 10)

https://www.guru3d.com/articles-pages/amd-radeon-rx-7900-xtx-review,1.html

Hardware Canucks

(Ryzen 7700X, Asus X670E ROG Crosshair hero, 32GB DDR5-6000, Windows 11)

https://www.youtube.com/watch?v=t3XPNr506Dc

Hardware Unboxed:

(Ryzen 5800X3D, MSI MPG X570S Carbon Max WiFi, 32GB DDR4-3200, Windows 11)

https://www.youtube.com/watch?v=4UFiG7CwpHk

Igor's Lab:

(Ryzen 7950X, MSI MEG X670E Ace,32GB DDR5 6000)

https://www.igorslab.de/en/amd-rade...giant-step-ahead-and-a-smaller-step-sideways/

Jay's Two Cents:

https://www.youtube.com/watch?v=Yq6Yp2Zxnkk

KitGuruTech:

(Intel 12900K, MSI MAG Z690 Unified, 32GB DDR5)

https://www.youtube.com/watch?v=qThrADqleD0

Linus Tech Tips:

https://www.youtube.com/watch?v=TBJ-vo6Ri9c

Paul's Hardware:

(Ryzen 7950X, Asus X670E ROG Crosshair Hero, 32GB DDR5-6000, Windows 11)

https://www.youtube.com/watch?v=q10pefkW2qg

PC Mag:

(Intel 12900K, Asus ROG Maximus Z690 Hero, 32GB 5600MHz, Windows 11)

https://www.pcmag.com/reviews/amd-radeon-rx-7900-xtx

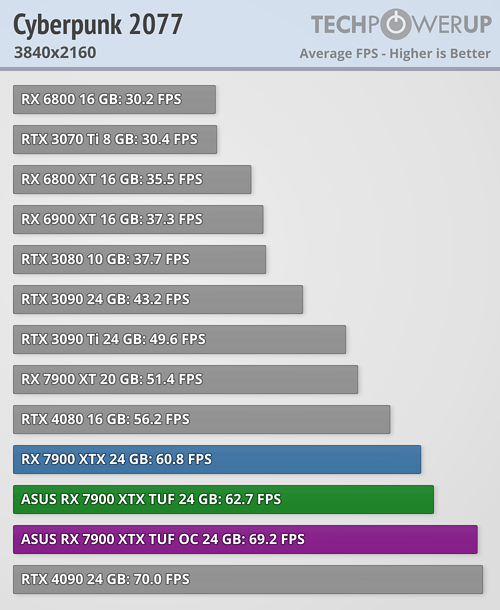

Tech Power Up:

(Intel 13900K, ASUS Z790 Maximus Hero, 2x 16 GB DDR5-6000 MHz, Windows 10)

AMD: https://www.techpowerup.com/review/amd-radeon-rx-7900-xtx/

ASUS: https://www.techpowerup.com/review/asus-radeon-rx-7900-xtx-tuf-oc/

XFX: https://www.techpowerup.com/review/xfx-radeon-rx-7900-xtx-merc-310-oc/

Tech Spot:

(Ryzen 5800X3D, MSI MPG X570S, 32GB of dual-rank, dual-channel DDR4-3200 CL14, Windows ???)

https://www.techspot.com/review/2588-amd-radeon-7900-xtx/

TechTesters:

(Intel 13900K, ASUS ROG Maximus Z790 HERO, 32GB DDR5-6000, Windows 11)

https://www.youtube.com/watch?v=3uQh4GkPopQ

ArsTechnica:

(Ryzen 5800X3D, Asus ROG Crosshair VIII Dark Hero, 64GB DDR4-3200, Windows ???)

https://arstechnica.com/gadgets/202...0-gpus-are-great-4k-gaming-gpus-with-caveats/

Gamers Nexus:

https://www.youtube.com/watch?v=We71eXwKODw

Guru3D:

(Ryzen 5950X, ASUS X570 Crosshair VIII HERO, 32 GB (4x 8GB) DDR4 3600 MHz, Windows 10)

https://www.guru3d.com/articles-pages/amd-radeon-rx-7900-xtx-review,1.html

Hardware Canucks

(Ryzen 7700X, Asus X670E ROG Crosshair hero, 32GB DDR5-6000, Windows 11)

https://www.youtube.com/watch?v=t3XPNr506Dc

Hardware Unboxed:

(Ryzen 5800X3D, MSI MPG X570S Carbon Max WiFi, 32GB DDR4-3200, Windows 11)

https://www.youtube.com/watch?v=4UFiG7CwpHk

Igor's Lab:

(Ryzen 7950X, MSI MEG X670E Ace,32GB DDR5 6000)

https://www.igorslab.de/en/amd-rade...giant-step-ahead-and-a-smaller-step-sideways/

Jay's Two Cents:

https://www.youtube.com/watch?v=Yq6Yp2Zxnkk

KitGuruTech:

(Intel 12900K, MSI MAG Z690 Unified, 32GB DDR5)

https://www.youtube.com/watch?v=qThrADqleD0

Linus Tech Tips:

https://www.youtube.com/watch?v=TBJ-vo6Ri9c

Paul's Hardware:

(Ryzen 7950X, Asus X670E ROG Crosshair Hero, 32GB DDR5-6000, Windows 11)

https://www.youtube.com/watch?v=q10pefkW2qg

PC Mag:

(Intel 12900K, Asus ROG Maximus Z690 Hero, 32GB 5600MHz, Windows 11)

https://www.pcmag.com/reviews/amd-radeon-rx-7900-xtx

Tech Power Up:

(Intel 13900K, ASUS Z790 Maximus Hero, 2x 16 GB DDR5-6000 MHz, Windows 10)

AMD: https://www.techpowerup.com/review/amd-radeon-rx-7900-xtx/

ASUS: https://www.techpowerup.com/review/asus-radeon-rx-7900-xtx-tuf-oc/

XFX: https://www.techpowerup.com/review/xfx-radeon-rx-7900-xtx-merc-310-oc/

Tech Spot:

(Ryzen 5800X3D, MSI MPG X570S, 32GB of dual-rank, dual-channel DDR4-3200 CL14, Windows ???)

https://www.techspot.com/review/2588-amd-radeon-7900-xtx/

TechTesters:

(Intel 13900K, ASUS ROG Maximus Z790 HERO, 32GB DDR5-6000, Windows 11)

https://www.youtube.com/watch?v=3uQh4GkPopQ

Last edited: