- Dec 15, 2021

- 4,384

- 2,762

- 106

Taking a look back at the leaks of Hamoa

First leak (November 2022), 20 months before X Elite's release

Overall, I am surprised how many things the leak got right (although there were several misses)!

Why did I write this? Because we just got the First Leak of the next generation of Snapdragon X. We are 16-22 months away from it's release (if the leak itself is to be believed that it'll come out in 2026H1).

First leak (November 2022), 20 months before X Elite's release

Second Leak (January 2023), 17 months before X Elite's release.In other news: Qualcomm's working on a 2024 desktop chip codename "Hamoa" with up to 12 (8P+4E) in-house cores (based on the Nuvia Phoenix design), similar mem/cache config as M1, explicit support for dGPUs and performance that is "extremely promising", according to my sources.

The efficiency cores bit is wrong. X Elite has only performance cores - 12 of them, and yes the all core clock is 3.4 GHz (except in the X1E-84, which runs at 3.8 GHz).First of all - the CPU.

As I previously leaked, the highest model of Hamoa has 8 performance cores and 4 power efficient ones. Qualcomm is testing the chip at ~3.4GHz (performance cores) and ~2.5GHz (efficient cores)

L2 cache amount is correct. L3 cache is wrong- there is no L3 cache. The system level cache is 6 MB.Each block of 4 cores has 12MB of shared L2 cache. There is also 8MB of L3 cache.

Additionally there is 12MB of system-level cache, as well as 4MB of memory for graphics use cases.

Correct.As for RAM, the integrated controller supports up to 64GB of 8-channel LPDDR5x with optional low-power features at up to 4.2GHz

Kinda correct, I suppose? The GPU in the X Elite identifies itself as the Adreno 741. It's based on the Adreno 740- same number of ALUs, but running at 1.5 GHz (twice the clock speed of 740).The integrated GPU is Adreno 740 - the same one as in Snapdragon 8 Gen 2. Qualcomm will be providing support for DirectX 12, Vulkan 1.3, OpenCL as well as DirectML

Correct.The 12 core SKU also provides support for discrete GPUs over its 8 lanes of PCIe 4.0.

There are also 4 lanes (configurable as 2x2) of PCIe 4.0 for NVMe drives, as well as some PCIe 3.0 lanes for the WiFi card and the modem.

Correct.The WiFi subsystem will support WiFi 7.

As for the modem, Qualcomm seems to be recommending (external) X65.

Correct. The Samsung Galaxy Books are the only laptops to feature UFS storage so far.For integrators not wanting to use NVMe for the boot drive, Qualcomm included a 2-lane UFS 4.0 controller with support of up to 1TB parts

Absolutely correct!Qualcomm has also updated their Hexagon Tensor Processor to provide up to 45 TOPS (INT 8) of AI performance

Correct.The chip also provides ample user-accessible IO: two USB 3.1 10Gbps ports, as well as three USB 4 (Thunderbolt 4) ports with DisplayPort 1.4a.

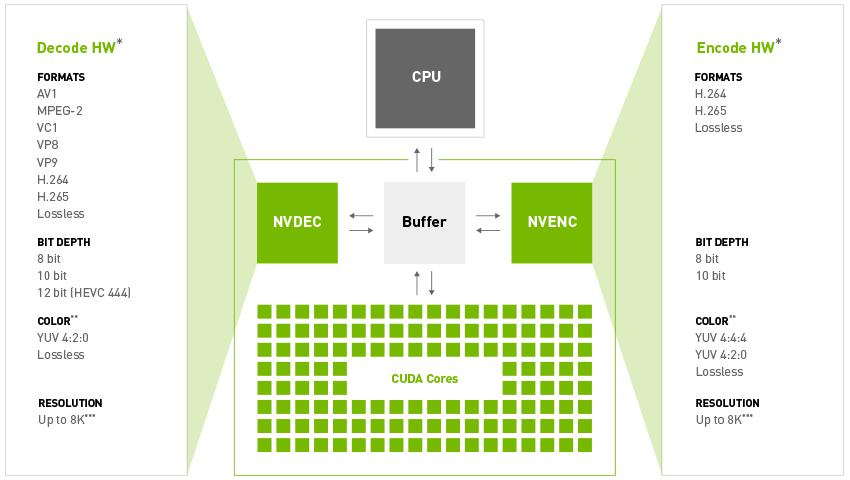

Correct!The video encode/decode block has also seen great improvements:

The chip can decode up to 4K120 and encode up to 4K60, including AV1 in both cases

Overall, I am surprised how many things the leak got right (although there were several misses)!

Why did I write this? Because we just got the First Leak of the next generation of Snapdragon X. We are 16-22 months away from it's release (if the leak itself is to be believed that it'll come out in 2026H1).

Last edited: