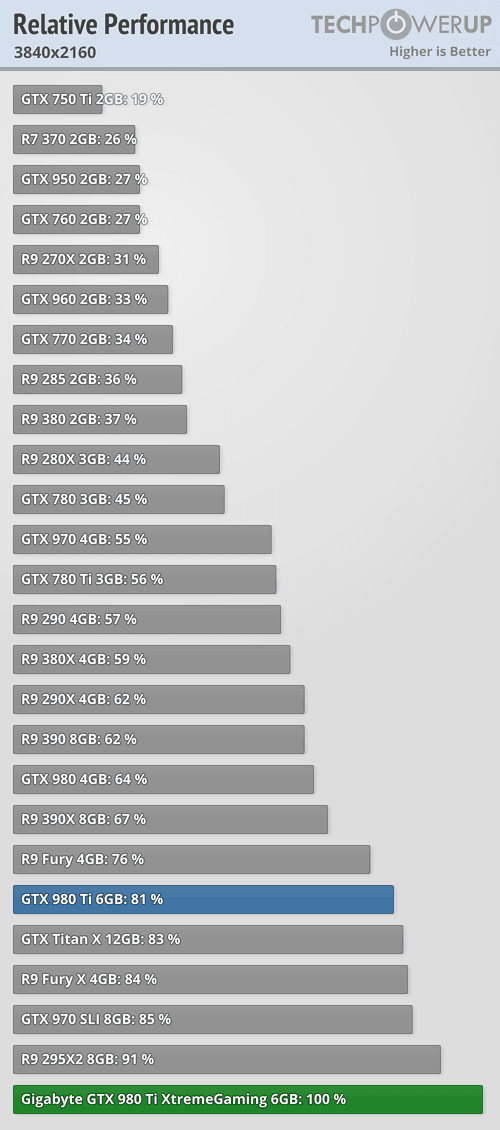

I think that Polaris regain the perf/mm2 lead versus Nvidia, even with normalized die sizes.

And perf/w goes to Polaris too, due to 14LPP power advantage over 16FF+(remains to be seen, but i bet that it is).

I think so as well, and for several reasons.

One is that GF s announced perf/watt improvements can be summarized (in respect of their own 28nm SLP) as either :

- 0.286x the power at same frequency.

- or 1.989x the frequency at same power.

Second is that TSMC announced (in respect of their own 28nm HP) either :

- 0.3x the power at same frequency

- or 1.65x the frequency at same power.

Obviously GF has better improvements but what is even more important is that their 28nm is 10-15% faster and 19-38% less leaky than TSMC s, so they improve more in %ages while starting with better specs.

Also AMD s Tahiti, and surely Hawai, are using TSMC s 28nm HP and that s a reason for their higher TDP than their Nvidia counterparts that use apparently a customised 28nm HPM that is 25% less leaky and 20% faster according to TSMC s numbers, this can be witnessed indiscutably by the operating voltages.

Should had they used GF s 28nm (wich wasnt ready in due time) that Hawai would had been improved in the same ratio as Kabini when it was ported at GF.