Got it. Thanks for clarifying. In the meantime, if someone wants more than decent 1080p performance and/or barely adequate 1440p performance and minimum VR performance, AMD has nothing for them.

LOL! Coming from a guy who bought a GTX980 for $550-600 less than 2 years ago. Today, AMD's $199-229 card is within 10-15% of that overpriced garbage 980! Generation after generation these relative concepts seem completely foreign to you.

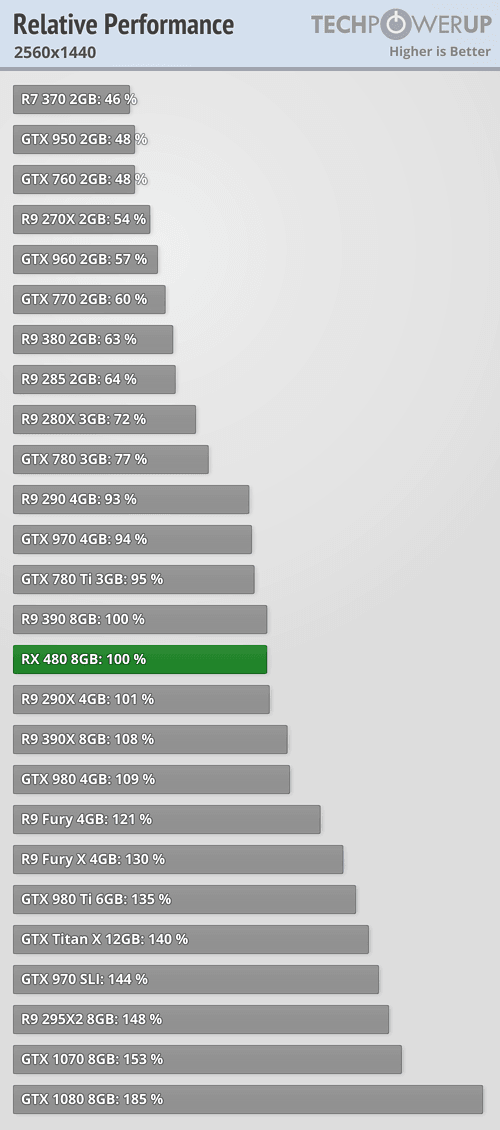

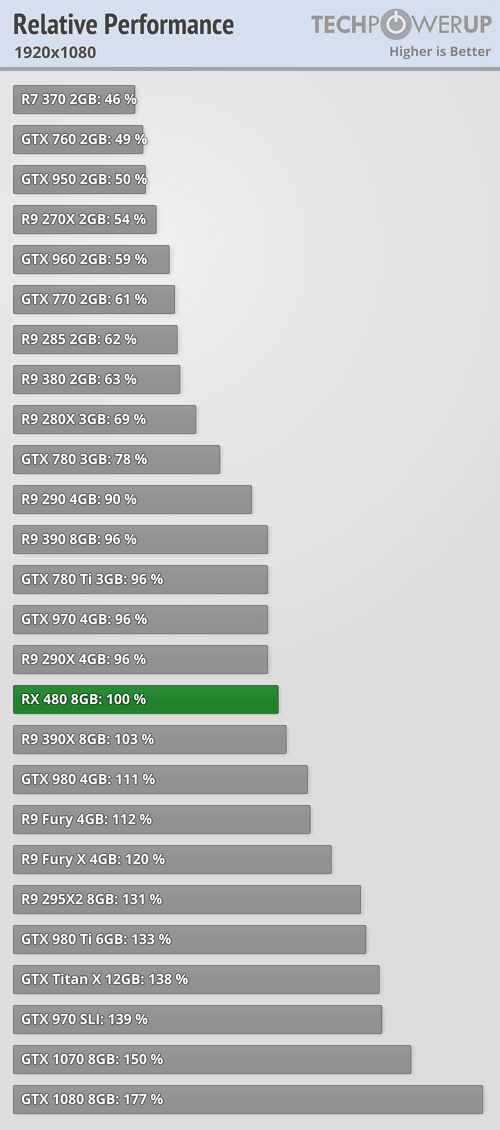

At 1080p, RX 480 is nearly

70% faster than a GTX960. How many times have you recommended R9 280X/380X/290 over GTX960? How many times have you recommended R9 270/270X over GTX750/750Ti?

https://www.techpowerup.com/reviews/AMD/RX_480/24.html

For the last 2 years, you defended/recommended GTX750/750Ti/950/960, while completely ignoring lack of longevity of NV cards and their horrible price/performance. If you actually cared about the best interests of PC gamers, you'd be objectively recommending some NV cards like GTX970 and 980Ti, while nailing the trash that they released in the last 10 years.

As I said before and it's only more evident: All you care about are metrics where NV wins, while constantly ignoring the price/performance metric as if it's irrelevant. Secondly, the

ONLY reason

you care for AMD to improve perf/watt and perf/mm2 is so that they give more competition to NV so that you pay less for NV cards.

On this forum, there have been many, many people who rightfully criticized AMD/ATI's cards over the years. You should be one of the LAST people to do so because you haven't bought an AMD/ATI card since 9800 series.

That means, you managed to buy

3 of the worst NV architectures of all time:

FX5800/5900 series = pure trash

GeForce 7 = more trash

Fermi = the very architecture that failed in nearly

every metric you keep discussing in modern times (perf/mm2, perf/watt, price/performance)

No offense, but your criticism against all AMD products has no merit in this case since you didn't purchase any excellent AMD/ATI cards since

what 2003?

Your other thread titles

Polaris vs. Pascal is

just as flawed or is intentionally misleading.

First, you compare ASICs (chips) but ignore that RX 480 and GTX1080 are videocards, not just chips. That means you are penalizing RX 480 for not having GDDR5X or alternatively, you are giving GTX1080 a huge advantage. Since RX 480 is a $199-239 product, it would have made no sense to have GDDR5X for it. Therefore, what you are really comparing is a RX 480 videocard vs. GTX1080 videocard, not GCN 4.0 architecture vs. Pascal.

Secondly, you assign no value whatsoever to exponential gains that come from shifting from 32 to 64 ROPs (hint: R9 290X vs. HD7970Ghz). This means the 314mm2 to 232mm2 comparison inherently assumes that a hypothetical 300mm2 Polaris 10 would scale non-linearly against the 232mm2. Another way to look at it, is if NV halved the number of ROPs in GTX1080 to 32 ROPs and used only GDDR5 and made a 250mm2 chip, it would get destroyed by the current GP104 1080 SKU. You don't understand the context of how videocards are made so you blindly compare perf/mm2. The biggest facepalm here is you bought GTX275 over HD4890, then GTX570 over HD6950/6970 and you bought GTX780 over R9 290, but like a broken record you keep discussing perf/mm2.

Additionally, in all of your comparisons of GTX970/980 to RX 480, you completely ignore DX12 performance where Maxwell bombs.

Let's face it, even if GTX1060 lost to RX 480 in the price/performance metric, and got gimped by 3GB of VRAM, you'd still recommend GTX1060 over RX 480. In the case of GTX1060 6GB vs. RX 480 4GB, you'd recommend people spend the extra $ on the 1060 6GB. Amiright?

I hate to say it, but I love all the hype and inevitable disappointment. It's unfortunate, but extremely entertaining. I wish AMD were able to be more competitive, but the reality is they are spread to thin on R&D and resources.

It's more sad that someone gets satisfaction that's derived from less competition. Maybe this actually explains why you like posting so much anti-AMD posts -- you just like to stir things up as you get satisfaction from AMD failing.

It's interesting you want to discuss how a $199-239 card is a "failure" in all key ways but ignore how the mighty $650 780 and then $699 780Ti turned into a giant turds!

I am pretty sure it's a bigger failure to have a $700 780Ti getting smoked by a reference $399 R9 290 in modern games. $500-650 GTX780 looks like laughable against a $299 R9 280X or a $399 R9 290. Shows how much of a failure Kepler architecture was -- the very architecture you bought not once, but twice (!!) with GTX670 2GB and GTX780 3GB. Now you are advising AMD to leave the high-end?

It probably would have been best if they just abandoned the high end again and devoted more resources to making Polaris better, then using a Polaris X2 card to compete on the high end.

What do you care? You don't buy AMD graphics cards. Let AMD and objective/brand agnostic PC gamers decide what's best for AMD. Even when AMD had a 6 months lead with HD5870, you didn't buy that. Then when AMD had clearly superior videocards with HD7950/7970, you still bought the 670 2GB. You even managed to buy a 780 -- easily one of the worst cards ever made - instead of the R9 290. :sneaky:

Do you know how your posts actually appear for the outside world?

"ATI/AMD have failed .... blah blah blah. Buys NV"

"ATI/AMD have an amazing product....Buys NV"

What's the real reason you are so upset that AMD is failing? Because you'll be paying $600+ for a

mid-range GTX1080, and deep down you aren't thrilled that you "have no choice" since "AMD forced you to pay those prices", amiright?

:thumbsup:

I wish AMD were able to be more competitive, but the reality is they are spread to thin on R&D and resources.

Ya, and how much have you personally contributed to AMD's R&D by buying their excellent cards over the last 10-13 years? Poor AMD. Even when HD6950

2GB unlocked into an HD6970 and made hundreds of dollars with Bitcoins, and cost $230-250, you

still bought a $350 GTX570

1.28GB. They never stood a chance!

For crying out loud, you actually defended NV locking down voltage on Kepler as a way to reduce RMA on their cards. Who the hell cares about NV's RMA from overvoltage control unless you are an NV stockholder...

At least they support their hardware with updated drivers even after the new models are out. I bet the 980 ti falls off a cliff compared to the 1070 in the next year or two. Is that good business for those that paid 6 or 7 hundred dollars for a 980 ti?

$699 780Ti November 2013

$549 GTX980 September 2014

--

$239 RX 480 4GB is faster than GTX780Ti and is within 10% of GTX980 (but what about future DX12 games, 980 would get beaten).

Polaris 10's perf/watt is very disappointing to me but as a product aimed at the mainstream market, $199-239 is a good deal. In fact, given the level of performance of this videocard, I'd actually not hesitate at all to recommend the cheaper $199 4GB version and just use that as a stop-gap for 2 years; then upgrade to a newer $200-250 card in 2018. Looking at the level of performance for RX 480, I am not sure the premium for the 8GB card even makes sense for those planning to keep the card for 2 years or less.