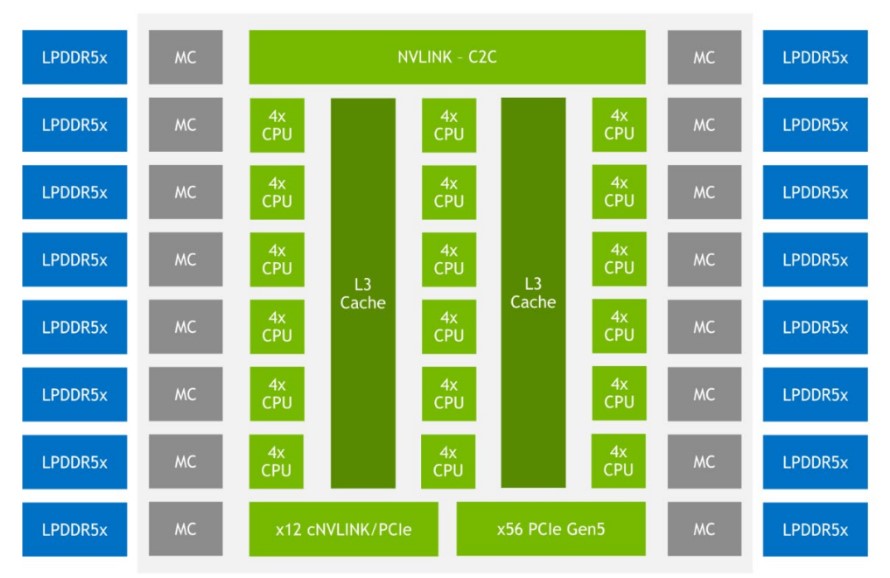

A very dirty estimate using the blurry image from sth and it looks like grace is around 80% of the size of hopper.

~650-675mm^2

Not too far from the 698mm^2 skylake-xcc.

Looking at it closer it might be another spr situation. Some of those cores/modules near the memory interfaces might be the memory controllers which would take 8 out of your calculations leaving a more reasonable 4 spare cores for yield. My only basis for that is that they look a bit darker.

The image is almost certainly just a rendering; STH article mentions that. I don’t know if the process it is to be made on is even finalized yet. They might have made the image based on the current state of the design work, but it could also just be essentially a completely fake graphic. You can’t base much on it.

Given the current data, it will not be usable for a lot of applications. Some applications have significant amount of work on the cpu before sending data to the gpu. This cpu will likely be too slow for many of them. There is no point in buying this if the expensive gpu portion would be under utilized due to insufficient cpu power. Not everything can easily be run on the gpu.

I suspect AMD and Intel will both have a similar solution. They will have much more powerful CPUs and likely GPUs of similar capability. They won’t have the cuda lock-in though. A lot of applications don’t actually need that high of bandwidth to system memory though, so a lot of applications will be able to get by with PCI express levels of bandwidth. It depends on the algorithm and amount of processing required. For many things, the copy to gpu memory can be overlapped with the compute to make good utilization of the gpu. They can also use multiple smaller GPUs, each with their separate link.