- Jun 1, 2017

- 5,248

- 8,463

- 136

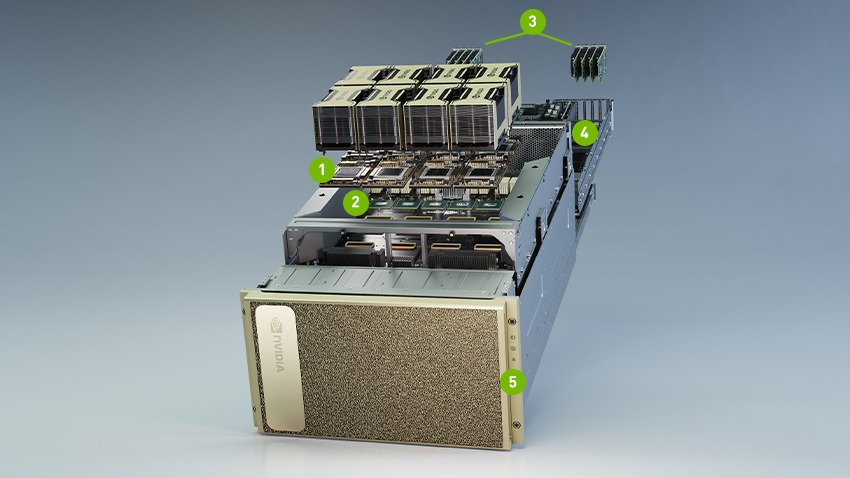

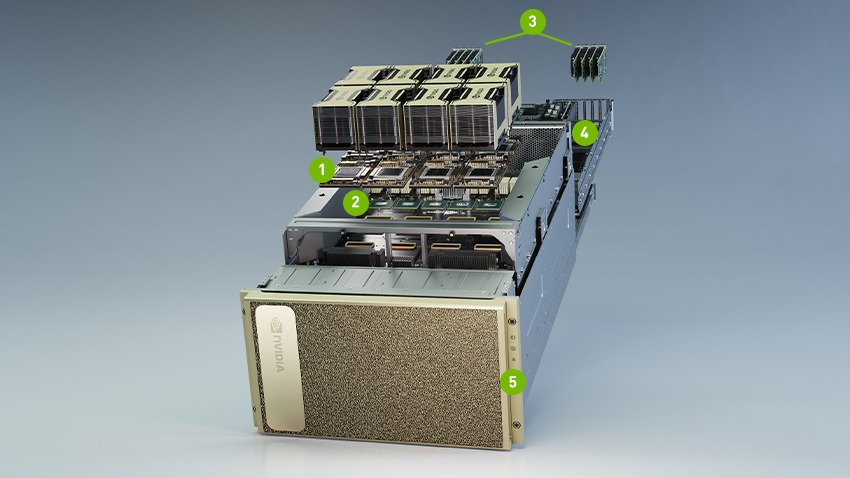

And that's quite the massive system they offer there: "the universal system for all AI workloads"

Related yellow press reporting:

- 8X NVIDIA A100 GPUS WITH 320 GB TOTAL GPU MEMORY

12 NVLinks/GPU, 600 GB/s GPU-to-GPU Bi-directonal Bandwidth - 6X NVIDIA NVSWITCHES

4.8 TB/s Bi-directional Bandwidth, 2X More than Previous Generation NVSwitch - 9x MELLANOX CONNECTX-6 200Gb/S NETWORK INTERFACE

450 GB/s Peak Bi-directional Bandwidth - DUAL 64-CORE AMD CPUs AND 1 TB SYSTEM MEMORY

3.2X More Cores to Power the Most Intensive AI Jobs - 15 TB GEN4 NVME SSD

25GB/s Peak Bandwidth, 2X Faster than Gen3 NVME SSDs

Related yellow press reporting:

Last edited: