cusideabelincoln

Diamond Member

- Aug 3, 2008

- 3,275

- 46

- 91

again, have a look at the results of the Phenom X2 550 in alienbabel review.

http://alienbabeltech.com/main/?p=22167

You will see that both GTX480 and HD5870 exhibit the same behavior with the dual core Phenom X2 550 and you will not find a trend forming to justify saying AMD cards perform better with dual core CPUs.

Here's where I see it:

Left 4 Dead

It's minimal but you can see it if you look at the dual core speed scaling. 5870 drops from 108 to 97 fps and the 480 drops from 104 to 91 (at 1900x1200).

X3:TC

This game is obviously hardcore CPU-limited, so it doesn't quite show the same kind of scaling. What it does show is that when the 480 is CPU-constrained it every so slightly trails the 5870. But if you look at i7-920 @ 3.8 GHz results you'll indeed see the 480 slightly ahead of the 5870.

Far Cry 2

The results speak for themselves, really.

World in Conflict

Game shows a similar pattern as X3:TC, but it's easier to see here. With the slowest processor the 5870 is clearly faster. With the fastest processor the 480 is clearly faster.

Just Cause 2

The 480 needs a Core i7 to best the 5870, otherwise it falls behind by a significant margin.

Resident Evil 5

Well there are odd results if you look at the phenom II x4 numbers, but if you look at everything else, especially lower resolution, you can see the pattern. With an i7-920 at 2.6 GHz the 5870 is faster; with the i7-920 at 3.8 GHz the 480 is faster. And if you just focus on the dual core scaling numbers you'll see a similar pattern as with X3:TC, where both cards are bottlenecked but the 480 is ever so slightly behind.

HAWX

There's some interesting things going on with a single AMD card on the Intel platform at lower resolutions. But if you look at 2560x1600 you can see the similar pattern: The 5870 is ahead when paired with the slowest processor and the 480 pulls ahead when paired with a faster processor. Also if you just focus on the dual core results at various clockspeeds, you will see the trend happening as well: Both cards are bottlenecked, the AMD card is slightly faster, but the 480 does indeed pull ahead with the 3.8 GHz at higher resolutions.

-----------------------------------

So it doesn't happen all the time, but it appears to not happen infrequently enough to dismiss.

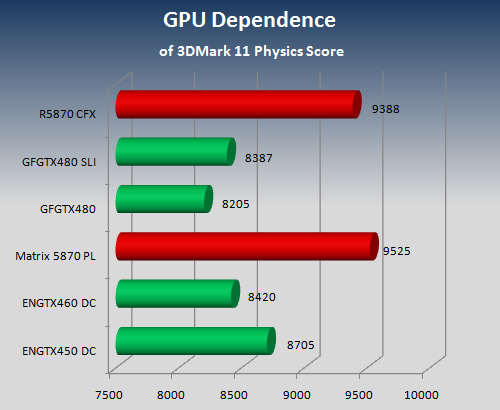

More testing needs to be done on midrange cards. We don't have to see every card, but it would be interesting to have the 6850, 460, 450, and 5750 have a go with more reasonable CPU choices: An Athlon II X2, Athlon II X3, Core i3, Phenom II X4, Core i5, and Core i7.

Last edited:

.

.