BFG10K

Lifer

Introduction

I thought I’d do a quick writeup of the new DLDSR just released in driver 511.23. DSR has been around for years but this new version uses Tensor cores to downscale instead of shaders used in the old version.

I used a 2070 with the 2.25x setting on a 1440p native display (4K effective), default 33% smoothing.

Performance

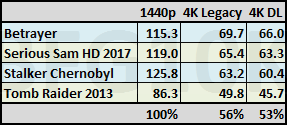

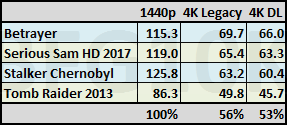

There’s a picture from nVidia showing Prey 2017 DLDSR 2.25x having the same performance as native, so let’s start there. I picked four older games that are still quite GPU limited at 1440p:

nVidia’s claim is clearly nonsense and it’s obvious they cherry-picked a CPU limited and/or framecapped scene for their screenshot. If you’re rendering 4K pixels you’re going to have the hit from 4K hit no matter how you downsample later.

What’s even more interesting is the new version is actually slower than the legacy version.

Image Quality

A quick subjective check in about half a dozen games shows DL is much sharper than legacy because nVidia are employing their old trick of applying massive oversharpening to Tensor core output, ala DLSS. While it does look sharper, thin edges like ropes, cables and power lines have more pronounced stair-stepping with DL compared to legacy.

nVidia’s claim that 2.25x DL image quality matches 4x legacy is also false. 4x has better image stability than 2.25x DL because it’s rendering far more pixels, and also because it’s integer scaling.

Conclusion

DLDSR has exactly the same problems as regular DSR. The biggest one is the display drops to 60Hz in a lot of games, and this problem has been around for years, way back in Windows 7. I’m amazed nVidia still haven’t fixed this.

Other problems are that some games’ UI are too small, and it also messes with display scaling and/or the position of background windows.

In my opinion DLDSR is a complete waste of time.

I thought I’d do a quick writeup of the new DLDSR just released in driver 511.23. DSR has been around for years but this new version uses Tensor cores to downscale instead of shaders used in the old version.

I used a 2070 with the 2.25x setting on a 1440p native display (4K effective), default 33% smoothing.

Performance

There’s a picture from nVidia showing Prey 2017 DLDSR 2.25x having the same performance as native, so let’s start there. I picked four older games that are still quite GPU limited at 1440p:

nVidia’s claim is clearly nonsense and it’s obvious they cherry-picked a CPU limited and/or framecapped scene for their screenshot. If you’re rendering 4K pixels you’re going to have the hit from 4K hit no matter how you downsample later.

What’s even more interesting is the new version is actually slower than the legacy version.

Image Quality

A quick subjective check in about half a dozen games shows DL is much sharper than legacy because nVidia are employing their old trick of applying massive oversharpening to Tensor core output, ala DLSS. While it does look sharper, thin edges like ropes, cables and power lines have more pronounced stair-stepping with DL compared to legacy.

nVidia’s claim that 2.25x DL image quality matches 4x legacy is also false. 4x has better image stability than 2.25x DL because it’s rendering far more pixels, and also because it’s integer scaling.

Conclusion

DLDSR has exactly the same problems as regular DSR. The biggest one is the display drops to 60Hz in a lot of games, and this problem has been around for years, way back in Windows 7. I’m amazed nVidia still haven’t fixed this.

Other problems are that some games’ UI are too small, and it also messes with display scaling and/or the position of background windows.

In my opinion DLDSR is a complete waste of time.

Last edited: