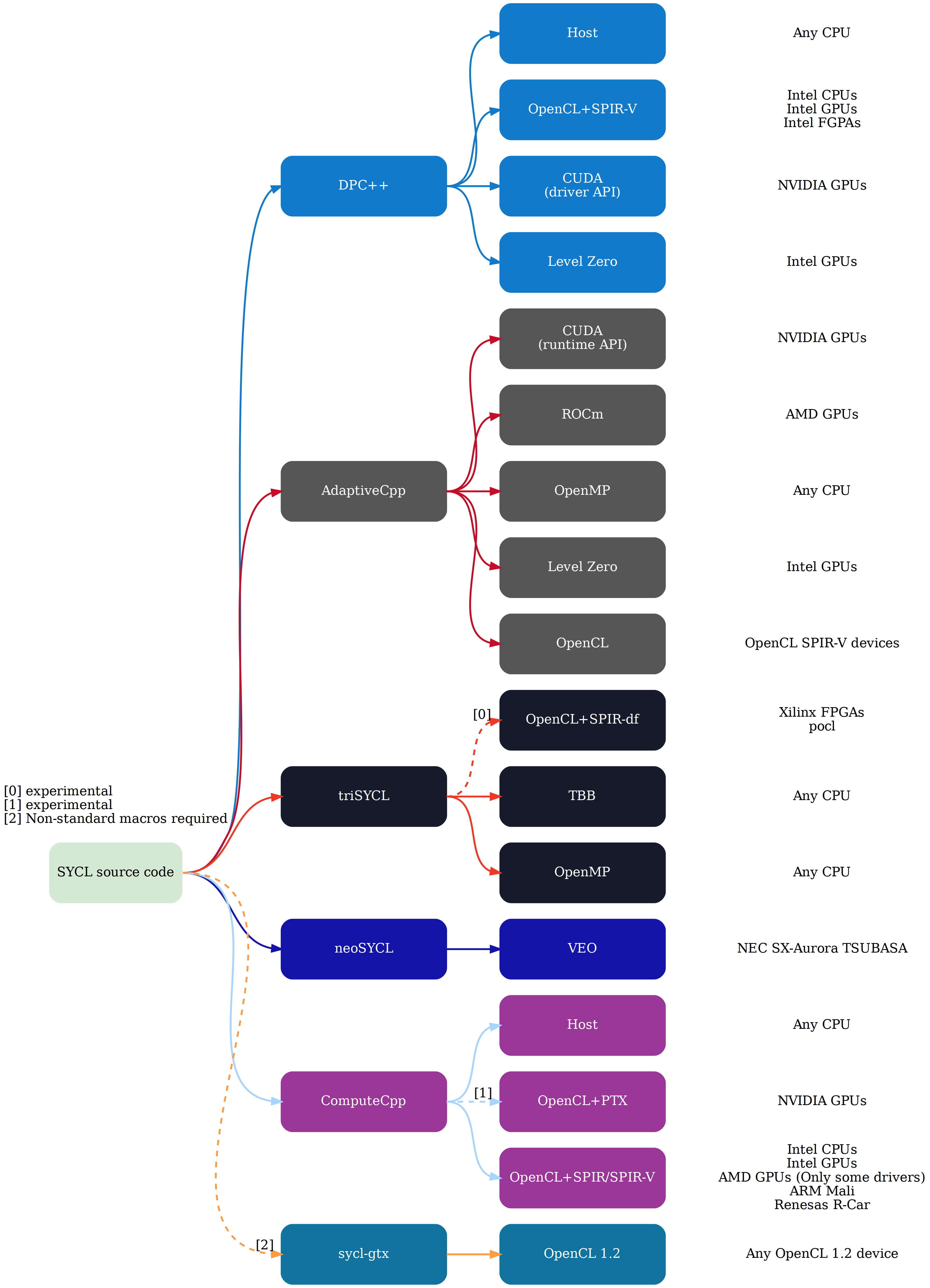

GPGPU for consumer workflows is basically dead, because we never got a good API that was well supported across all GPUs. Hell, OpenCL has had to pull features back out in an effort to get it better supported- they've basically abandoned OpenCL 2, and reverted to building off 1.2.

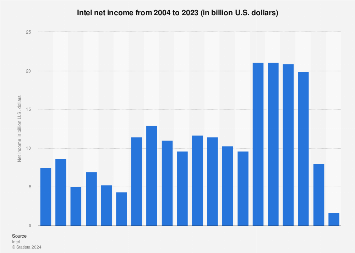

CUDA is great for applications where you control the target platform, like running on a specific supercomputer, a workstation that is guaranteed to have a Quadro, or a device that you ship with an Nvidia GPU. But when the vast majority of consumer devices run integrated graphics, and a sizeable minority of the gamer market runs on AMD, the economics just don't work. Why spend developer time on a proprietary API that most users can't benefit from?