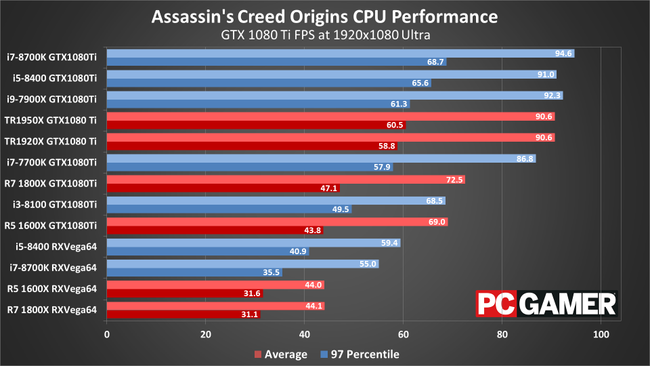

I was just browsing for the latest Assasins Creed: Origins benchmarks with the 1.03 patch and came across this:

http://www.pcgamer.com/assassins-creed-origins-performance-guide/

Of particular concern is how, on a very scaleable game engine (thread wise), Ryzen still seems to put in disproportionately poor scores - when I say disproportionate, I mean in terms of relative IPC, it shouldn't be that far behind the Intel chips. Is there some part of the Ryzen architecture that holds it back in certain games? Are some games just poorly optimised for Ryzen, or is there a technical flaw / bottleneck in Ryzens design that makes it struggle in games like AC:O?

Please keep this thread civil, I'm not trying to bash Ryzen here, but rather I'm looking for some technical discussion as to why the gaming performance of Ryzen can be inconsistent, depending on the game engine.

On a side note - Threadripper surprisingly does much better than Ryzen in this game - could the extra threads really make that much difference, or is there another explanation? Higher platform memory bandwith, perhaps?

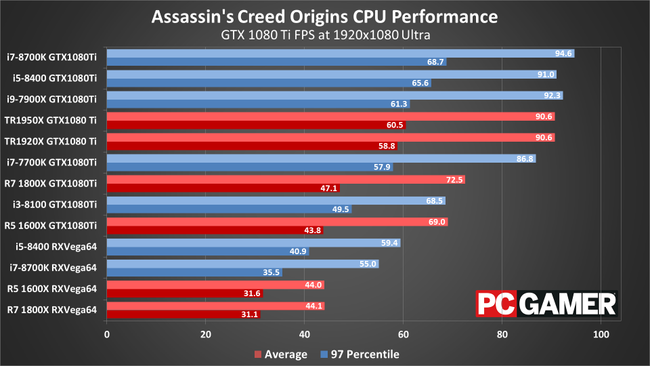

http://www.pcgamer.com/assassins-creed-origins-performance-guide/

Of particular concern is how, on a very scaleable game engine (thread wise), Ryzen still seems to put in disproportionately poor scores - when I say disproportionate, I mean in terms of relative IPC, it shouldn't be that far behind the Intel chips. Is there some part of the Ryzen architecture that holds it back in certain games? Are some games just poorly optimised for Ryzen, or is there a technical flaw / bottleneck in Ryzens design that makes it struggle in games like AC:O?

Please keep this thread civil, I'm not trying to bash Ryzen here, but rather I'm looking for some technical discussion as to why the gaming performance of Ryzen can be inconsistent, depending on the game engine.

On a side note - Threadripper surprisingly does much better than Ryzen in this game - could the extra threads really make that much difference, or is there another explanation? Higher platform memory bandwith, perhaps?

Last edited: