Question Is 10GB of Vram enough for 4K gaming for the next 3 years?

Page 14 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

This is misleading or a misunderstanding completely. The game code itself and libraries it use needs to load into memory, that's how programs run. Also, there's tons of memory usage outside of graphics, all the AI, calculations, basically everything happening in game requires some sorts of variable representing that's in memory. Bring up task manager and look at the memory usage of your programs and games. The game itself will be using multiple GBs, and the OS also use memory and keep some in reserve. Bad things happen when the system runs out of memory. The 16GB memory of the console will never be fully used by the GPU. In fact, the series X has a weird memory setup where 10GB has 560 GB/Sec bandwidth and the other 6GB has 336 GB/sec. That tells me the design is for 10GB reserved for graphics and 6GB for the game and OS. I'm not even sure if the full 10GB will be for graphics actually, some of the games take up a lot of memory and I'm not sure if 6GB will be enough for both the game and OS.As long as the PC has at least 16 GB of VRAM, and 16 GB of main ram, it will not need to prefetch any more then a console.

No, the stuttering is reported on new gpu too because of the memory configuration. The default .8 (think this is 80% of something, the total GPU memory?) is too high and it artificially cause unnecessary memory swaps. Besides, if the stuttering goes away because of a memory config, the power of the gpu doesn't matter.^ Can't you also fix the stuttering by getting a real GPU instead of one with 4-year-old specs?

psolord

Platinum Member

- Sep 16, 2009

- 2,142

- 1,265

- 136

Thanks for the link, Digital Foundry is awesome.

The video shows that 2060 Super cards have better raytracing than the consoles on this particular game. This is fine, Watch Dogs Legion is a ray-tracing showcase for nVidia where every surface is reflective on purpose.

Aside from the RT, please note the differences that I mentioned in my initial message.

These are with the same configuration files and have nothing to do with RT whatsoever.

Those reflections are really beautiful on PC, but overall the game is not, just look at those ugly pipes everywhere. It doesn't hold a candle to the Unreal 5 demo, and it shows: as said in the DF video, WD:L is built on top of the same old engine as the previous games.

It's certainly not using Mesh Shaders and Sampler Feedback like the U5 Demo. Those, combined with a fast SSD on an optimized path to GPU memory, are the real game changers exclusive to the consoles for now. Just watch the Mark Cerny PS5 presentation again, when he talks about assets streaming. Once the usage of this kind of technique becomes common, current PCs will have to adapt somehow.

Ah yes, presentations. I also remember the E3 PS3 presentation....remember what they were showing...? Meaning, don't believe everything console makers promise, because they always promise the stars and you end up with a led light.

The DEMO itself has been run faster on a 2080 equipped laptop.

GeForce 2070 Super and NVMe SSD can run Unreal Engine 5 demo on PC

Epic Games recently revealed Unreal Engine 5 to the world. The demo was running on PlayStation 5, but it turns out it'll run "pretty good" on a PC with an NVIDIA GeForce RTX 2070 Super and an SSD.

If you're interested in Unreal Engine 5 games on PC, you should invest in a powerful GPU — like something from our best graphics card roundup — and NVMe SSD later in 2021 when the engine launches and games are possibly available. An NVIDIA RTX 2080 laptop managed to run the demo at 40 frames per second.

And what kind of power does a 2080 laptop have? It could be one with half the power of a desktop one.

Don't forget the Sony-Epic collaboration.

Sony buys $250 million stake in Fortnite creator Epic Games

Sony and Epic have worked closely together for years.

Meaning don't be so surprised that Sweeny was so impressed by PS5. Remember Carmack back in the PS4 days that said the consoles can provide double the performance compared to a PC? I hear this crap even today! Have you ever seen anything like that, because I have seen the opposite! Come on guys, read between the lines and don't gobble up everything the net and corp agreements serve you.

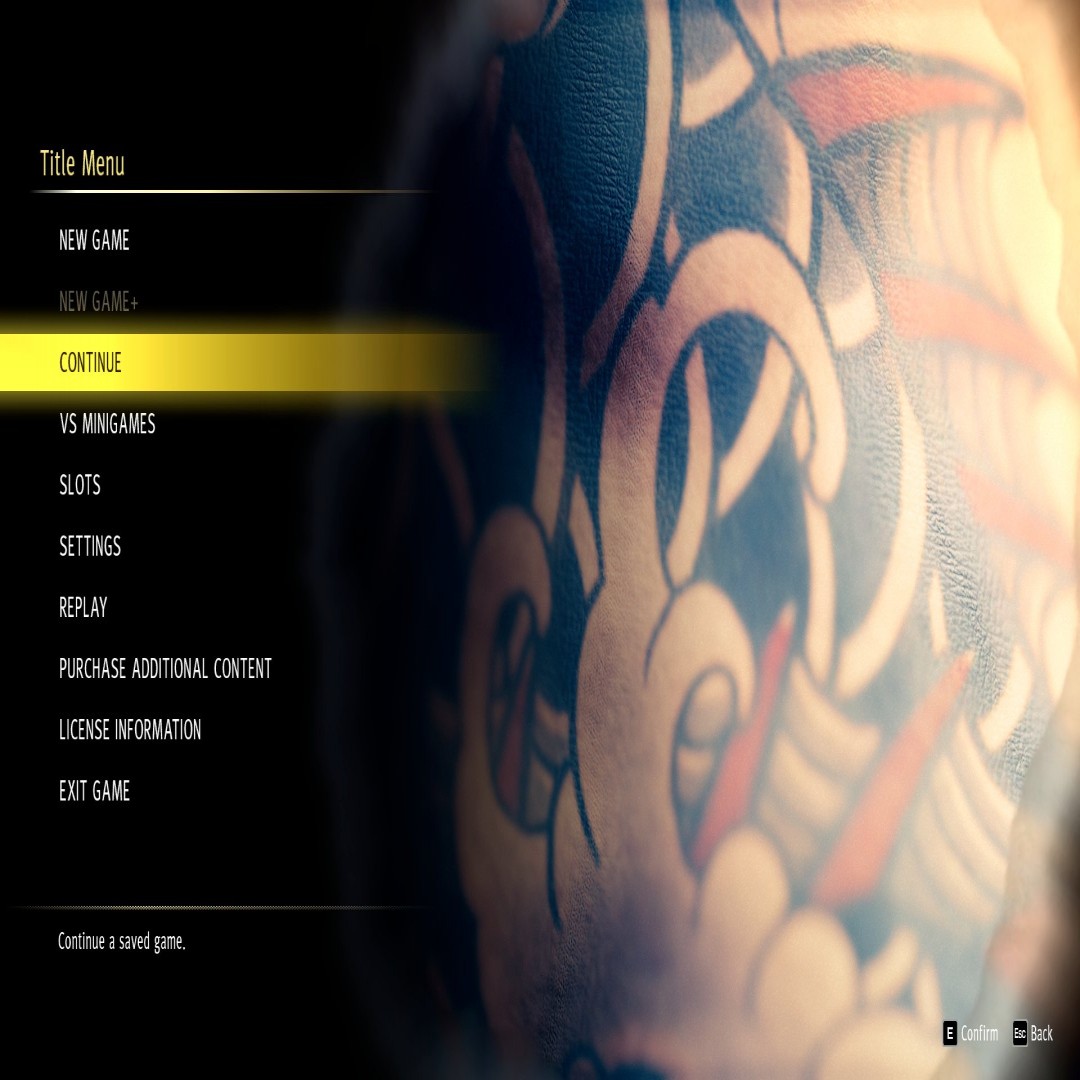

Case in point Yakuza Like a Dragon.

Again see Digital Foundry's test.

@6:49

1440p@60fps for normal mode, although there is a 1080p/60 mode for better sustainability of 60fps (lol?) but I'll leave it at 1440p/60 for this comparison.

And what do you need for 1440p/60 on the PC Ultra settings? A freakin 2060!

Here from gamegpu.

Yakuza Like a Dragon тест GPU/CPU | RPG/Ролевые | Тест GPU

Игровая серия Yakuza продолжает активно развиваться и теперь разработчики решили еще и ориентироваться на ПК п

psolord

Platinum Member

- Sep 16, 2009

- 2,142

- 1,265

- 136

Let me put it another way: the launch of the new consoles is one of those very few events where we are guaranteed to have games using more VRAM going forward, and the best nVidia could do at the 700USD price point and below was LESS memory than the 3 years old 1080 Ti.

I am not happy with the 10GBs of the 3080, but I DO belong on the camp that says 10GBs will be enough. Even so, if the rx6800XT comes close to the 3080, is 50coins cheaper AND has 60% more vram, I am not an idiot, I will go for the 6800XT for sure.

The 6800XT has double the specs of the PS5, while not having double the price. Yes you need the rest of the PC stuff, but I already have a lot of those.

Now, about saying that consoles are second rate hardware... maybe Nintendo consoles, because the Series X and the PS5 are quite something. The Series X consumes as much power as a 2060 Super alone (around 160w), while including 8 Zen2 cores (while not the latest, they're quite recent considering that game consoles have a large gestation period) plus really fast SSDs, chipset, and a GPU that AFAWK loses to a 2060S on RT but is pretty close to a 2080 on rasterisation. Not bad really for a fixed target that will be optimized to death in the coming years.

I wouldn't hold my breath on how great the next gen consoles are.

The 2060S is a 12nm last gen card. I don't know what good is the comparison with a next gen console on a newer fab process. Let's wait for equal in performance RDNA2 parts to make a comparison, yeah?

As of the Zen2 cores, I wouldn't hold my breath either. You do know they have 8MB L3, right? And do yo know what Zen2 CPU this resembles? The 4700G! And do you know what kind of performance difference an 8MB L3 Zen vs a 32MB L3 one has? This difference.

To make it easier for everyone, this is the performance delta.

NFS Heat : +11.11%

BF V: +17.36%

Far Cry New Dawn: +16.48%

Fallen Order : +20.65%

Resident Evil 3: +20.77%

AC Odyssey : +13.40%

Shadow of the Tomb Raider: +16.38%

Hitman 2 : +15.18%

Averall average, +16.42 for the 32MB L3. Now that is with the 4700G@4.5Ghz and the 3700X at 4.3Ghz. Also don't forget that the consoles are essentially 7 core and not 8core, since one core is reserved for the OS.

Now if you also take into consideration the much lower clocks of the consoles plus their limited power budget, yeah I wouldn't be surprised to see quad cores running next gen games just fine.

psolord

Platinum Member

- Sep 16, 2009

- 2,142

- 1,265

- 136

Continuing from above *sigh*

Case in point, my 2500K running Godfall with my GTX 1070 at almost epic settings. (non monetized video, not clickbaiting)

The impressive thing here, is not the 1070 running quite nicely, but rather the 2500k which is pushing 50-60fps at 50% load. Meaning it would be pushing 100fps at max load with a better video card. And that's on a 10 year old cpu, paired with a 4.5year old graphics card.

Also where is the game for the PS4 hmmm?

I know for a fact that PS owners need to pay up 400/500+80 for the game, while I paid 40 for the game only (key shop).

Not to mention that it is still playable on my 6year old GTX 970, OK at medium, but still pretty and still playable.

What kind of streaming do you think will be really required for next gen games? To put it differently, how big do you think games will get?

Let me give you another example and yes I ask to be forgiven because I will use again my own media, but honest to God I am not promoting anything here. My channel is hobbyist since 2006 and will remain that way. I just do what I do out of sheer love for PC gaming.

So....here is an article some of the largest PC games.

gamerant.com

gamerant.com

I happen to have benchmarked some of those.

Quantum Break 175GB, i7-860 from 2009 + 1070

[video=youtube;rHf3v5HG3eg]https://www.youtube.com/watch?v=rHf3v5HG3eg[/video]

Gears of War 4 112GB, i7-860 from 2009 + 1070

[video=youtube;AmbnikWCYVE]https://www.youtube.com/watch?v=AmbnikWCYVE[/video]

CoD Black Ops III 113GB, 2500K from 2011 + 970

[video=youtube;dxDZkYXmDpk]https://www.youtube.com/watch?v=dxDZkYXmDpk[/video]

All the above run from HDD and as you can see they run fine. So you trying to tell me that even if games tripled in size compared to these, a simple SSD couldn't get the job done?

Even if you are considering something in the likes of Rachet and Clank, how many games will be using portals to jump all over the place? Speaking for myself I find this disorienting, but I'm willing to bet that games will retain their serial progression. Even so, we still have nvme ssds that are mighty fast and we also have RAM, that consoles do not have, that is plenty cheap nowadays.

You can easily get 32GBs of ram that will give the system 50GB/sec throughput. How does that even compare to any SSD, the console ones included?

If these are not the case, are we talking loading times? I mean even without the hardware decompressors, are we going to have a problem on the PC if we wait a couple seconds longer?

Case in point, my 2500K running Godfall with my GTX 1070 at almost epic settings. (non monetized video, not clickbaiting)

The impressive thing here, is not the 1070 running quite nicely, but rather the 2500k which is pushing 50-60fps at 50% load. Meaning it would be pushing 100fps at max load with a better video card. And that's on a 10 year old cpu, paired with a 4.5year old graphics card.

Also where is the game for the PS4 hmmm?

I know for a fact that PS owners need to pay up 400/500+80 for the game, while I paid 40 for the game only (key shop).

Not to mention that it is still playable on my 6year old GTX 970, OK at medium, but still pretty and still playable.

So, the question then is how are PCs going to implement this same kind of asset streaming? Having more VRAM and being more aggresive prefetching data? Having PCIEx SSDs doing direct memory transfers to GPU memory using SAM? Using lower quality assets?

If you can upgrade your computer every year then this is not a problem, and I'd be glad for you. Unfortunately I know I can't upgrade so often, so I'd be really p***ed to spend 700USD on a video card just to end up using lower quality assets.

What kind of streaming do you think will be really required for next gen games? To put it differently, how big do you think games will get?

Let me give you another example and yes I ask to be forgiven because I will use again my own media, but honest to God I am not promoting anything here. My channel is hobbyist since 2006 and will remain that way. I just do what I do out of sheer love for PC gaming.

So....here is an article some of the largest PC games.

25 Of The Biggest PC Games By File Size, Ranked

Which PC game is going to take up the biggest slice of your hard drive? These are. Here are the ten biggest PC games according to their file size.

I happen to have benchmarked some of those.

Quantum Break 175GB, i7-860 from 2009 + 1070

[video=youtube;rHf3v5HG3eg]https://www.youtube.com/watch?v=rHf3v5HG3eg[/video]

Gears of War 4 112GB, i7-860 from 2009 + 1070

[video=youtube;AmbnikWCYVE]https://www.youtube.com/watch?v=AmbnikWCYVE[/video]

CoD Black Ops III 113GB, 2500K from 2011 + 970

[video=youtube;dxDZkYXmDpk]https://www.youtube.com/watch?v=dxDZkYXmDpk[/video]

All the above run from HDD and as you can see they run fine. So you trying to tell me that even if games tripled in size compared to these, a simple SSD couldn't get the job done?

Even if you are considering something in the likes of Rachet and Clank, how many games will be using portals to jump all over the place? Speaking for myself I find this disorienting, but I'm willing to bet that games will retain their serial progression. Even so, we still have nvme ssds that are mighty fast and we also have RAM, that consoles do not have, that is plenty cheap nowadays.

You can easily get 32GBs of ram that will give the system 50GB/sec throughput. How does that even compare to any SSD, the console ones included?

If these are not the case, are we talking loading times? I mean even without the hardware decompressors, are we going to have a problem on the PC if we wait a couple seconds longer?

Last edited:

psolord

Platinum Member

- Sep 16, 2009

- 2,142

- 1,265

- 136

Seriously, Forza Horizon 4 loads faster on the PC, again by Digital Foundry video

@3:55

They mention that the game needs to be recompresed to take advantage of the velocity architecture. So what? This will also come on the PC. And I play the game from HDD and still runs fine. Sure there are some waiting times, but they are barely enough to stretch my legs. Also why do you need to recompress it , if it already loads fine on the PC? How will this go? Create a need while there was no need for it prior?

There's too much being said about the SSDs and I will tell you why. They are trying to hide the rest of the shortcomings, like the ones I told you above. It's always been like that man. They busted our balls with the Cell processor and the RSX and you had games of the time running twice as fast and at 1080p instead of 720p on a 8800GT. Meh.

edit: RTX to RSX, ffs

@3:55

They mention that the game needs to be recompresed to take advantage of the velocity architecture. So what? This will also come on the PC. And I play the game from HDD and still runs fine. Sure there are some waiting times, but they are barely enough to stretch my legs. Also why do you need to recompress it , if it already loads fine on the PC? How will this go? Create a need while there was no need for it prior?

There's too much being said about the SSDs and I will tell you why. They are trying to hide the rest of the shortcomings, like the ones I told you above. It's always been like that man. They busted our balls with the Cell processor and the RSX and you had games of the time running twice as fast and at 1080p instead of 720p on a 8800GT. Meh.

edit: RTX to RSX, ffs

Last edited:

No, the stuttering is reported on new gpu too because of the memory configuration. The default .8 (think this is 80% of something, the total GPU memory?) is too high and it artificially cause unnecessary memory swaps. Besides, if the stuttering goes away because of a memory config, the power of the gpu doesn't matter.

I think he was being facetious and suggesting that NVidia's VRAM capacities for everything below the 3090 are akin to GPUs from four years ago.

That's what I kind of thought...but even with hard evidence that I have posted, I don't understand why this kind of mentality. Whatever.I think he was being facetious and suggesting that NVidia's VRAM capacities for everything below the 3090 are akin to GPUs from four years ago.

Rakehellion

Lifer

- Jan 15, 2013

- 12,181

- 35

- 91

I see Tarkov on this page mentioned. What are the other 2 please? Just curious.

I don't know if it was mentioned, but Final Fantasy XV does with texture packs.

Last edited:

So what's the consensus? If you want price for performance go with a 6800xt?

But if you want to run ray tracing go with a 3080 or wait until January for 3080ti.

The 3080Ti would most likely be a 6900 competitor, not a 6800XT competitor.

So what's the consensus? If you want price for performance go with a 6800xt?

But if you want to run ray tracing go with a 3080 or wait until January for 3080ti.

Buy whatever you can get, try it out, sell it at cost if your not satisfied? Keep the free game(s) for your troubles?

16 GB is beneficial in Ghost Recon

In Ghost Recon Breakpoint, the 10 GB of the GeForce RTX 3080 is not enough for maximum performance, here the 16 GB of memory of the two Radeon graphics cards have a clear advantage. How does that show? With the average FPS, the Radeon graphics cards do not fare well, the Radeon RX 6800 XT is 10 percent slower than the GeForce RTX 3080. In the percentile FPS, the Radeon RX 6800 XT suddenly performs 2 percent better - there the game's highest texture level will fit in 16 GB of memory. That's why the Radeon RX 6800 can expand the 9 percent average FPS advantage over the GeForce RTX 3070 to a full 26 percent in percentile FPS. The 8 GB of the Nvidia graphics cards are even less than the 10 GB of the larger model. The Radeon can at least maintain a stable 30 FPS in Ultra HD, the GeForce RTX 3070 cannot.[\quote]

AMD Radeon RX 6800 und RX 6800 XT im Test: Die Taktraten, Benchmarks in Full HD, WQHD sowie Ultra HD und Raytracing

AMD Radeon RX 6800 XT im Test: Die Taktraten, Benchmarks in Full HD, WQHD sowie Ultra HD und Raytracing / Testsystem und Testmethodikwww.computerbase.de

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

I personally based off of vram would be more comfortable with a 6800xt. But I do want the ray trace performance and a 3080 is a no go for me with only 10gb. Gonna keep whatever card I end up with a while.The 3080Ti would most likely be a 6900 competitor, not a 6800XT competitor.

I'm tempted to try a 6800xt with my gsync monitor and see how it fares. Although I would definitely go with a 3080Ti for the next 3 years.Buy whatever you can get, try it out, sell it at cost if your not satisfied? Keep the free game(s) for your troubles?

I'm skeptical of the 6800xt ray tracing performance for the future. Regarding the performance compared to a 3080, it's super close and if rays weren't a thing I'd pick it right now.

If you have a GSync monitor I'd go with an NVidia card for that reason alone.

None of the cards released now are going to have good enough RT performance for the future. We probably need another two generations of doubling performance before we start to get there.

None of the cards released now are going to have good enough RT performance for the future. We probably need another two generations of doubling performance before we start to get there.

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

That's how I'm leaning so I guess I'll wait to see if the speculated 3080Ti is a thing. If not, I can put myself on the EVGA shopping queue for a 3080.If you have a GSync monitor I'd go with an NVidia card for that reason alone.

None of the cards released now are going to have good enough RT performance for the future. We probably need another two generations of doubling performance before we start to get there.

I'm tempted to try a 6800xt with my gsync monitor and see how it fares. Although I would definitely go with a 3080Ti for the next 3 years.

I'm skeptical of the 6800xt ray tracing performance for the future. Regarding the performance compared to a 3080, it's super close and if rays weren't a thing I'd pick it right now.

BestBuy has RTX's popping in and out of stock currently. I just snagged a 3070 intended for my daughters boyfriends new build. If he decides he doesn't want it I'm just going to offer it up for local trade for a 6800 or 6800XT and I'll throw in some cash.

So what's the consensus? If you want price for performance go with a 6800xt?

I think the consensus is just buy whatever one might happen upon in stock, and thank whatever deity you feel appropriate for said good fortune.

I think the consensus is just buy whatever one might happen upon in stock, and thank whatever deity you feel appropriate for said good fortune.

Do you know any that I can make sacrifices to in order to improve my chances of getting one?

I know someone who raises goats, but I'm not necessarily above going on a raid for some human captives if utterly necessary.

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

Having wasted so much time and effort to finally get a PS5 console..I really am so tired of 2020 and this scarcity of products. I hate constantly fighting the bots/scalpers.Do you know any that I can make sacrifices to in order to improve my chances of getting one?

I know someone who raises goats, but I'm not necessarily above going on a raid for some human captives if utterly necessary.

I doubt stock will be any better in January but I feel like I should put myself on EVGA's purchase list for a 3080. I just want to play cyberpunk with no compromises maxed out. Then sell that 3080 as soon as the 3080ti announcement comes out..wonder if I'll lose some money.

CastleBravo

Member

- Dec 6, 2019

- 185

- 424

- 136

Having wasted so much time and effort to finally get a PS5 console..I really am so tired of 2020 and this scarcity of products. I hate constantly fighting the bots/scalpers.

I doubt stock will be any better in January but I feel like I should put myself on EVGA's purchase list for a 3080. I just want to play cyberpunk with no compromises maxed out. Then sell that 3080 as soon as the 3080ti announcement comes out..wonder if I'll lose some money.

EVGA is still working providing 3080s to people who clicked the notify me button on launch day.

Having wasted so much time and effort to finally get a PS5 console..I really am so tired of 2020 and this scarcity of products. I hate constantly fighting the bots/scalpers.

It's always like that with new consoles / products. I remember when the PS3 launched and you couldn't get those either. Hell, I stood outside overnight in the cold so I could pick up a Wii at launch, so it wasn't really any different a decade ago.

Getting anything at launch is more of a hassle than it's honestly worth. The only reason I bothered doing it for a Wii was because I hadn't done anything like that before and it was as much about the experience as getting the Wii.

Godfall using 14.7 GB of vram at 4k and 13.9 at 1440p with max settings and ray tracing with a 6800xt. I wonder how much of that is actually needed though. I guess we will see when they let Nvidia cards use ray tracing later.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.