Question Is 10GB of Vram enough for 4K gaming for the next 3 years?

Page 9 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

StinkyPinky

Diamond Member

- Jul 6, 2002

- 6,986

- 1,283

- 126

That 20GB version is the one to get. I wouldn't ever consider Vram again with a 20GB card. I've enjoyed that carefree use with the 1080Ti for almost 4 years and I'd hate to be concerned about Vram starting day 1 of having a new card. The 10GB version is a no-go and should be renamed the buyer's remorse version.

Exactly. Feels like the 3gb version of the 1060. I feel 12-16 should be the spot for high end cards but obviously they cannot do that on the 3080 and therefore took the cheaper option.

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

That's what I'm thinking. Speaking of which I need to start working on a bot script to try and get myself 1 3090 next week lol.the 3080 w/ 20GB wont cost above 850$ for the FE version.

Provided you can bot it.

Who am I kidding, I don't have any experience in that sort of thing despite being a developer. But it seems that it is something to look into for the future.

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

This cracks me up

GeForce RTX 3080 get 420fps while gaming at 69hrtz my mom is the ceo of Nividia | eBay

Find many great new & used options and get the best deals for GeForce RTX 3080 get 420fps while gaming at 69hrtz my mom is the ceo of Nividia at the best online prices at eBay! Free shipping for many products!

www.ebay.com

This cracks me up

GeForce RTX 3080 get 420fps while gaming at 69hrtz my mom is the ceo of Nividia | eBay

Find many great new & used options and get the best deals for GeForce RTX 3080 get 420fps while gaming at 69hrtz my mom is the ceo of Nividia at the best online prices at eBay! Free shipping for many products!www.ebay.com

And all this time I thought JHH was a man /s

Crazy world we live in!

StinkyPinky

Diamond Member

- Jul 6, 2002

- 6,986

- 1,283

- 126

I wonder if a 12gb 3090 will be released. Could make it $150 cheaper, I'd suggest that would be a popular card since I think 12gb vram is enough for the next 5 years. (unlike possibly 10gb)

Heartbreaker

Diamond Member

- Apr 3, 2006

- 5,229

- 6,857

- 136

I wonder if a 12gb 3090 will be released. Could make it $150 cheaper, I'd suggest that would be a popular card since I think 12gb vram is enough for the next 5 years. (unlike possibly 10gb)

More likely a 12GB version is the 3080 Ti, a little later when yields improve, so cheaper die and less VRAM gets the price down to $999.

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

This is useful for checking stock. Need to have node installed.

TheF34RChannel

Senior member

- May 18, 2017

- 786

- 310

- 136

That RTX 3070 is basically obsolete right out of the box IMO. 8GB should be for 3060 class cards for 1080p-1440p gaming. Is 8GB even enough for 1440p moving forward?

Heck no!

I'll have to bring to your attention the fact that people don't buy brand new "flagship" cards just to have to lower settings to get playable performance on day one! If the cards simply came with a couple more gigs of ram, this wouldn't even be worth talking about. However, it is worth talking about because games are pushing past 8gigs already, often getting close to it at 1440p. That's today. There is a Marvel comics (or whatever) game that blows past 10.5GB at 4k already using HD textures. You telling me people spending $700+ on a new "flagship" GPU won't be pissed when they get hitching because they had the audacity to use an HD texture pack in a new game?

Forget 3 years; what about 1 year from now? This isn't going to get any better and no amount of Nvidia IO magical BS (that needs developer support, am I right?) is going to solve the 10GB problem. 2080Ti was their last-gen flagship. It had 11GB of ram. If the 3080 is their new flagship, why did they think it was a good idea to reduce it down to 10? When in the history of PC hardware has a new flagship release ever had less of a component as critical as RAM? The answer is *never*. The 3080 is not the flagship. It's a 2080 replacement and suitable for 1440p gaming over the next few years. It's not a legit 4K card moving forward.

8800GTS to 8800GTS G92: 640MB to 512MB. Had both, the former being my first high end card

But yeah, well said with your post!!!

JohanYello

Junior Member

- Sep 23, 2020

- 5

- 0

- 6

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

The 8800 I think was one of my first Nvidia cards. I recently came across the retail box last year when I was moving. Good memories.Heck no!

8800GTS to 8800GTS G92: 640MB to 512MB. Had both, the former being my first high end card

But yeah, well said with your post!!!

elhefegaming

Member

- Aug 23, 2017

- 157

- 70

- 101

I'm gonna game at 1440p

Based on what I read here I'm gonna get the 3080 10gb and save the difference for a cpu upgrade in a year

Based on what I read here I'm gonna get the 3080 10gb and save the difference for a cpu upgrade in a year

TheF34RChannel

Senior member

- May 18, 2017

- 786

- 310

- 136

The 8800 I think was one of my first Nvidia cards. I recently came across the retail box last year when I was moving. Good memories.

I went from an Nvidia FX5200 to that 8800GTS 640. It was like going from crawling straight into a rocketship.

But yeah, that 20GB model; with the price gap surely it'll be 1000-1100 USD, a bit steep for my liking.

I'm gonna game at 1440p

Based on what I read here I'm gonna get the 3080 10gb and save the difference for a cpu upgrade in a year

I'm in the same boat. 1440p 144hz, don't plan on moving to 4k anytime soon and am not worried about 10GB @ 1440p. I figure I'll be using a 4080 or an RDNA3 GPU by the time I make the jump past 1440p anyway.

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

I'm currently at 3440x1440 @120hz so unless they come out with 4k ultrawide I'm not changing resolution anytime soon. Ultrawide has been super productive for me working from home anyway.I went from an Nvidia FX5200 to that 8800GTS 640. It was like going from crawling straight into a rocketship.

But yeah, that 20GB model; with the price gap surely it'll be 1000-1100 USD, a bit steep for my liking.

While $1k-1100 sucks to pay for the 20gb model I'm not planning on getting rid of it until 3 years down the line and if it means I can crank all settings max and enjoy the latest games no issues, most likely worth it for that time frame.

elhefegaming

Member

- Aug 23, 2017

- 157

- 70

- 101

the 3080 w/ 20GB wont cost above 850$ for the FE version.

Provided you can bot it.

I doubt it, look at prices of GDDR6 a year ago

I think it will be 899

- Jan 8, 2011

- 10,734

- 3,454

- 136

I'm not liking the reports of stability issues beyond 2ghz. It just feels like the chip is being pushed to its absolute limits and it on the ragged edge of failure. Combined with the ram capacity issues, this is less and less attractive by the day.

elhefegaming

Member

- Aug 23, 2017

- 157

- 70

- 101

I'm not liking the reports of stability issues beyond 2ghz. It just feels like the chip is being pushed to its absolute limits and it on the ragged edge of failure. Combined with the ram capacity issues, this is less and less attractive by the day.

isnt the clock like 1.8? i couldnt care less for OCing this.

TheF34RChannel

Senior member

- May 18, 2017

- 786

- 310

- 136

I'm not liking the reports of stability issues beyond 2ghz. It just feels like the chip is being pushed to its absolute limits and it on the ragged edge of failure. Combined with the ram capacity issues, this is less and less attractive by the day.

I look at it this way: every chip has got its limits and it appears 2 is this one's. So we shouldn't go beyond that (must we, even?). To me it's not an issue but merely a property of that chip family.

Heartbreaker

Diamond Member

- Apr 3, 2006

- 5,229

- 6,857

- 136

I look at it this way: every chip has got its limits and it appears 2 is this one's. So we shouldn't go beyond that (must we, even?). To me it's not an issue but merely a property of that chip family.

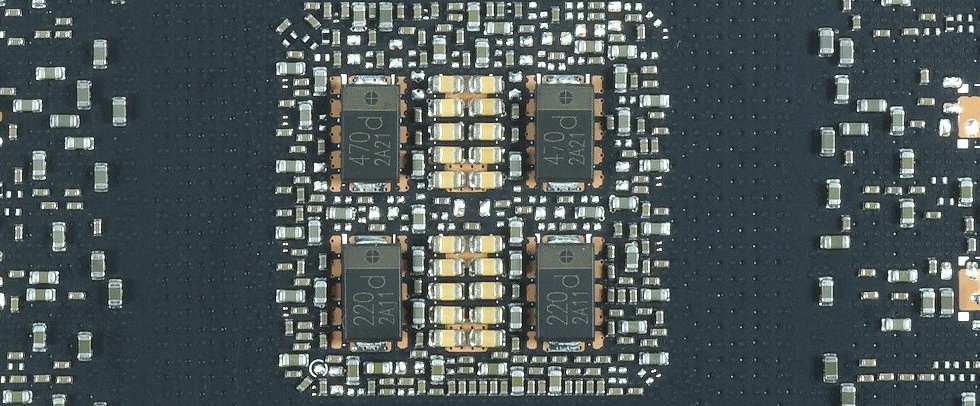

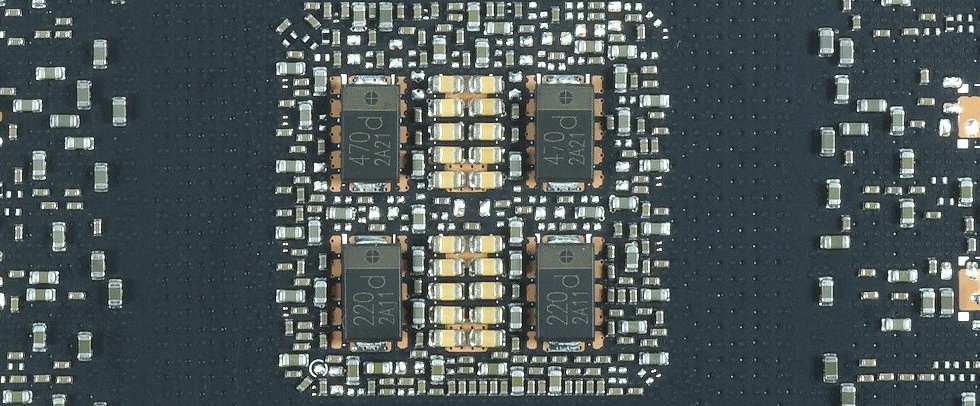

Igor's lab posted some investigation. His investigation implicated capacitor setup. No idea if this is reality or red herring:

The possible reason for crashes and instabilities of the NVIDIA GeForce RTX 3080 and RTX 3090 | Investigative | igor´sLAB

Not only the editors and testers were surprised by sudden instabilities of the new GeForce RTX 3080 and RTX 3090, but also the first customers who were able to get board partner cards from the first…

TheF34RChannel

Senior member

- May 18, 2017

- 786

- 310

- 136

Igor's lab posted some investigation. His investigation implicated capacitor setup. No idea if this is reality or red herring:

The possible reason for crashes and instabilities of the NVIDIA GeForce RTX 3080 and RTX 3090 | Investigative | igor´sLAB

Not only the editors and testers were surprised by sudden instabilities of the new GeForce RTX 3080 and RTX 3090, but also the first customers who were able to get board partner cards from the first…www.igorslab.de

Excellent reply, I'll check it out. Maybe Samsung's lackluster 8nm may play a role too? Either way, this may change my earlier opinion.

CakeMonster

Golden Member

- Nov 22, 2012

- 1,650

- 829

- 136

Do we know the Samsung 8nm is bad? I see it being repeated but do we have any chips that are remotely similar to compare performance to?

- Jan 8, 2011

- 10,734

- 3,454

- 136

Apparently, AIB's cheaping out with junk capacitors to save a few bucks is limiting the cards' ability to fully boost to their higher bins. I'm throwing this right out there now: they discovered the issue early, but not early enough to fix them before launch, thus explaining the extremely limited quantity of cards on release. Less RMA, damage control, yada yada. They knew it was going to be discovered and NO ONE spending $800 wants to get stuck with a card that won't give it's best performance due to building the card BELOW reference spec to save a buck. Whada pile of crap.

Actually, does that explain the limited quantity? I don't think it does, because some vendors built good cards and the FE cards are good, and those are also in short supply. Oh well, whatevs. I ain't buying one until I'm convinced they aren't all broken, lol.

Actually, does that explain the limited quantity? I don't think it does, because some vendors built good cards and the FE cards are good, and those are also in short supply. Oh well, whatevs. I ain't buying one until I'm convinced they aren't all broken, lol.

Panasonic SP-Caps are hardly junk or cheap caps. Polymer caps often serve a different purpose than a ceramic cap, but a single SP-Cap is often more expensive than a bunch of ceramics. Unfortunately I can't understand the video, but his explanation is pretty garbage and saying that any board designer would know ceramics work better than low ESR electrolytics is like any car buff knows that Corvettes are better than Peterbilts.Apparently, AIB's cheaping out with junk capacitors to save a few bucks is limiting the cards' ability to fully boost to their higher bins. I'm throwing this right out there now: they discovered the issue early, but not early enough to fix them before launch, thus explaining the extremely limited quantity of cards on release. Less RMA, damage control, yada yada. They knew it was going to be discovered and NO ONE spending $800 wants to get stuck with a card that won't give it's best performance due to building the card BELOW reference spec to save a buck. Whada pile of crap.

Actually, does that explain the limited quantity? I don't think it does, because some vendors built good cards and the FE cards are good, and those are also in short supply. Oh well, whatevs. I ain't buying one until I'm convinced they aren't all broken, lol.

Heartbreaker

Diamond Member

- Apr 3, 2006

- 5,229

- 6,857

- 136

Excellent reply, I'll check it out. Maybe Samsung's lackluster 8nm may play a role too? Either way, this may change my earlier opinion.

Looks like confirmation from EVGA on the Capacitor issue:

https://forums.evga.com/Message-about-EVGA-GeForce-RTX-3080-POSCAPs-m3095238.aspx

...

During our mass production QC testing we discovered a full 6 POSCAPs solution cannot pass the real world applications testing. It took almost a week of R&D effort to find the cause and reduce the POSCAPs to 4 and add 20 MLCC caps prior to shipping production boards, this is why the EVGA GeForce RTX 3080 FTW3 series was delayed at launch. There were no 6 POSCAP production EVGA GeForce RTX 3080 FTW3 boards shipped.

But, due to the time crunch, some of the reviewers were sent a pre-production version with 6 POSCAP’s, we are working with those reviewers directly to replace their boards with production versions.

EVGA GeForce RTX 3080 XC3 series with 5 POSCAPs + 10 MLCC solution is matched with the XC3 spec without issues.

Also note that we have updated the product pictures at EVGA.com to reflect the production components that shipped to gamers and enthusiasts since day 1 of product launch.

Once you receive the card you can compare for yourself, EVGA stands behind its products!

Thanks

EVGA

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.