Question Is 10GB of Vram enough for 4K gaming for the next 3 years?

- Thread starter moonbogg

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Microsoft Flight Simulator (2020): PC graphics performance benchmark review (Page 4)

Are you ready to take a short flight with us? We look at one of the most anticipated games of 2020, Microsoft Flight Simulator, in a PC graphics performance and PC gamer way. We'll test the game on t...

We also ran a cross-check with the GeForce RTX 2080 Ti, and flying over a dense London resulted in full graphics memory usage close to 11GB.

IMO no.

- Jan 8, 2011

- 10,635

- 3,095

- 136

Yes it is enough for 1440p and even for 4K. Again let me remind you that PC games have various graphics presets like Low, Medium, High, Very High and Ultra.

Ultra is useless as it is just for showing off. 8GB is enough for 4K at medium or High settings unless you can prove otherwise.

I'll have to bring to your attention the fact that people don't buy brand new "flagship" cards just to have to lower settings to get playable performance on day one! If the cards simply came with a couple more gigs of ram, this wouldn't even be worth talking about. However, it is worth talking about because games are pushing past 8gigs already, often getting close to it at 1440p. That's today. There is a Marvel comics (or whatever) game that blows past 10.5GB at 4k already using HD textures. You telling me people spending $700+ on a new "flagship" GPU won't be pissed when they get hitching because they had the audacity to use an HD texture pack in a new game?

Forget 3 years; what about 1 year from now? This isn't going to get any better and no amount of Nvidia IO magical BS (that needs developer support, am I right?) is going to solve the 10GB problem. 2080Ti was their last-gen flagship. It had 11GB of ram. If the 3080 is their new flagship, why did they think it was a good idea to reduce it down to 10? When in the history of PC hardware has a new flagship release ever had less of a component as critical as RAM? The answer is *never*. The 3080 is not the flagship. It's a 2080 replacement and suitable for 1440p gaming over the next few years. It's not a legit 4K card moving forward.

sze5003

Lifer

- Aug 18, 2012

- 14,183

- 625

- 126

Was that actual usage measured by an app on the background? Becuause if it showed orange in the settings page of the game, I don't think that is memory that is actually used but what the game "thinks" it will need. But I do agree, if you want all bells and whistles on at higher than 1080p with rays tracing, you want to feel safer with more than 10gb.I went back to Resident Evil 2 remake for another playthrough today. Full ultra 3440x1440, 12.5GB of VRAM usage and an orange warning (my card has 16GB). Marvel Avengers spiked above 8GB as well with settings below max. Just 10GB feels way too low for anything above 1080p to me.

psolord

Golden Member

- Sep 16, 2009

- 1,928

- 1,194

- 136

I went back to Resident Evil 2 remake for another playthrough today. Full ultra 3440x1440, 12.5GB of VRAM usage and an orange warning (my card has 16GB). Marvel Avengers spiked above 8GB as well with settings below max. Just 10GB feels way too low for anything above 1080p to me.

I did two tests on RE3 remake a while back. One on my 7950 and one on my GTX 970.

I uploaded the videos if anyone cares (not clickbaiting, non monetized, non sponsored, hobbyist channel)

The game estimates 12.34GB for maxed settings, yet both the 7950 with 3GBs and the 970 with *ahem* 3.5GBs, did OK.

I tend to think that Capcom overstimates the VRAM requirements.

That is without ray tracing that will come laterGodfall is running at 70-100fps at 4K Ultra/Epic on the 3080. So it's OK I guess.

It runs at 1440p/60 or 4k/30 on the PS5 I think, so it's a-ok...

Going back to my #6 point, a 4K screen (3840*2160), if you had an uncompressed image that size at 32bits color per pixel = 3840*2160*32=265,420,00 bits = 33,177,600 Bytes = 33.2MB. For 1GB, you can hold almost 31 of these images. That's uncompressed. Are game developers so unoptimized where they're just using graphic assets without thinking about memory, optimizations, and reuse?

I guess I can make a game where each of my on screen characters use a unique texture file where I need the entire file even if only a fraction will be display. Each inanimate object also use distinct textures. Instead of 4k, why not use 16k quality assets. That will make it look super uber detailed on a 1080p screen right? Sure, memory will become a problem.

Games do compress textures, of course, and employ a lot of different tricks to save memory. The DirectX preferred texture formats are DXT1 for RGB textures and DXT5 for RGBA textures. A 4096x4096 texture consumes, respectively, 10.7MB and 21.3MB, including the generated mipmaps. But, games use textures for many things, even on the same object: color textures, normal maps, smoothness/metallic maps, subsurface scattering maps, ambient occlusion maps, detail textures, etc. Some of those can be combined in a single texture, but it's not uncommon for games to use 3 or more textures for a single object. Every object needs those maps, since they're crucial to make the object react realistically with the scene's light sources.

Games also generate many large textures at runtime as temporary buffers, each frame, for effects like shadows, motion blur, depth of field, ambient occlusion, etc. Those are compressed just to save bandwidth, but still consume just as much memory as uncompressed textures.

And then there is geometry and animation data, which also stays on VRAM, doubly so when RT is used. And also the data used to simulate particle effects/NPCs on the GPU. The list of uses for VRAM can be quite large!

Anyway, next-gen consoles will be able to swap textures much faster than current PCs, so PCs will have to hold more textures in memory to compensate or offer worse image quality.

And that's another reason why nVidia messed up their line-up: a 500USD console will offer better image quality than a 700USD nVidia graphics card plus the added cost of a whole computer, just because they skimped on memory.

The same will be true when comparing Ampere to the corresponding Navi cards. The 3090 aside, the current Ampere line-up will age like fine milk. And don't get me wrong, I got a Radeon 5700, it's also fine milk.

mohit9206

Golden Member

- Jul 2, 2013

- 1,381

- 511

- 136

At what settings?

Usually there are many graphics presets in games namely Low, Medium, High, Very High and Ultra.

Since you are referring to 3080, I would imagine that anyone who buys that card would prefer to play at either Very High or Ultra. I am fairly confident that 10GB will be enough for both these settings at 4K for the next 3 years.

As for 3070,one would have to make do with V High or High in the tail end of its life. As that one "only" has 8GB.

Usually there are many graphics presets in games namely Low, Medium, High, Very High and Ultra.

Since you are referring to 3080, I would imagine that anyone who buys that card would prefer to play at either Very High or Ultra. I am fairly confident that 10GB will be enough for both these settings at 4K for the next 3 years.

As for 3070,one would have to make do with V High or High in the tail end of its life. As that one "only" has 8GB.

alcoholbob

Diamond Member

- May 24, 2005

- 6,271

- 323

- 126

Well you can look at it this way, console assets will be 4K native now, so your high or ultra texture quality should be the same between console and PC, and the Xbox Series X has 10GB of video optimized memory so that should be the cut-off point. It's enough for 4K. If there are higher ultra quality PC only options however, you probably want more than 10GB. This will probably be a very small subset of games.

Having only a yes and no option seems too limiting. My answers would be, "Yes for 99% of games, but there will be one or two before that point where 10 GB is a limiting factor."

How much of a deal that is probably depends on the game. I don't think it will be a major issue unless someone keeps the card for 5+ years and at that point people are likely running lower settings anyhow.

Even with consoles moving to 16 GB of memory now it's still going to take a while before games use that as a baseline.

How much of a deal that is probably depends on the game. I don't think it will be a major issue unless someone keeps the card for 5+ years and at that point people are likely running lower settings anyhow.

Even with consoles moving to 16 GB of memory now it's still going to take a while before games use that as a baseline.

- Jan 8, 2011

- 10,635

- 3,095

- 136

Having only a yes and no option seems too limiting. My answers would be, "Yes for 99% of games, but there will be one or two before that point where 10 GB is a limiting factor."

How much of a deal that is probably depends on the game. I don't think it will be a major issue unless someone keeps the card for 5+ years and at that point people are likely running lower settings anyhow.

Even with consoles moving to 16 GB of memory now it's still going to take a while before games use that as a baseline.

Choose the best answer and discuss the nuance in the comments.

Tup3x

Senior member

- Dec 31, 2016

- 965

- 951

- 136

Xbox will have 10 GB fast dedicated for GPU. Games get to use 13,5 GB max and rest is for OS and other background stuff. 10 GB will probably be fine for quite some time.Having only a yes and no option seems too limiting. My answers would be, "Yes for 99% of games, but there will be one or two before that point where 10 GB is a limiting factor."

How much of a deal that is probably depends on the game. I don't think it will be a major issue unless someone keeps the card for 5+ years and at that point people are likely running lower settings anyhow.

Even with consoles moving to 16 GB of memory now it's still going to take a while before games use that as a baseline.

Saylick

Diamond Member

- Sep 10, 2012

- 3,172

- 6,410

- 136

I said "No" because I believe that in 3 years, we'll have console ports that leverage most, if not all, of the console's available RAM (~10-13 GB), and knowing how PCs are less optimized than consoles, those games will require more VRAM than they do on consoles.

The future of video games is to compress as much of the game assets as possible, and then stream in the compressed data as fast as realistically possible, ideally in real-time right before it's needed if possible. Any data that needs to arrive faster than real-time will need to be moved onto RAM ahead of when it's actually needed, and given how PCs don't have dedicated compression and decompression hardware, I think there will be likely that console-to-PC ports take up a larger memory footprint than they do on consoles. In other words, games that are not designed to leverage SSDs and just-in-time streaming of assets will need to have more assets stored in RAM at any given time if they are to maintain the same asset quality of games that do leverage JIT streaming.

The future of video games is to compress as much of the game assets as possible, and then stream in the compressed data as fast as realistically possible, ideally in real-time right before it's needed if possible. Any data that needs to arrive faster than real-time will need to be moved onto RAM ahead of when it's actually needed, and given how PCs don't have dedicated compression and decompression hardware, I think there will be likely that console-to-PC ports take up a larger memory footprint than they do on consoles. In other words, games that are not designed to leverage SSDs and just-in-time streaming of assets will need to have more assets stored in RAM at any given time if they are to maintain the same asset quality of games that do leverage JIT streaming.

CastleBravo

Member

- Dec 6, 2019

- 119

- 271

- 96

I said "No" because I believe that in 3 years, we'll have console ports that leverage most, if not all, of the console's available RAM (~10-13 GB), and knowing how PCs are less optimized than consoles, those games will require more VRAM than they do on consoles.

The future of video games is to compress as much of the game assets as possible, and then stream in the compressed data as fast as realistically possible, ideally in real-time right before it's needed if possible. Any data that needs to arrive faster than real-time will need to be moved onto RAM ahead of when it's actually needed, and given how PCs don't have dedicated compression and decompression hardware, I think there will be likely that console-to-PC ports take up a larger memory footprint than they do on consoles. In other words, games that are not designed to leverage SSDs and just-in-time streaming of assets will need to have more assets stored in RAM at any given time if they are to maintain the same asset quality of games that do leverage JIT streaming.

We know Ampere will support Microsoft DirectStorage, which is supposedly the JIT streaming tech in the Xbox Series X. I will be very disappointed in AMD if they don't include the same tech in RDNA2.

I chose no simply because if I were buying a 3080, I would be doing so to play at 4K, no compromises (as advertised) and I don't think that will be possible with 10 GB of RAM over the next even 2 years, not for at least a handful of AAA games. Now, I'm sure you could turn down some settings here and there and probably hardly notice a difference and be fine but that's not what I would be buying the "flagship" model for.

Saylick

Diamond Member

- Sep 10, 2012

- 3,172

- 6,410

- 136

I wonder how games will manage then if you don't have a GPU or SSD capable of supporting JIT streaming. Will certain settings, e.g. high res textures, be simply blocked off to you if the game detects you don't have a system capable of streaming in the assets fast enough without bogging down the game? I suppose the alternative is that you get texture pop but that can be alleviated by simply having more RAM, if I'm not mistaken, which goes against the idea of needing less RAM moving foward.We know Ampere will support Microsoft DirectStorage, which is supposedly the JIT streaming tech in the Xbox Series X. I will be very disappointed in AMD if they don't include the same tech in RDNA2.

blckgrffn

Diamond Member

I wonder how games will manage then if you don't have a GPU or SSD capable of supporting JIT streaming. Will certain settings, e.g. high res textures, be simply blocked off to you if the game detects you don't have a system capable of streaming in the assets fast enough without bogging down the game? I suppose the alternative is that you get texture pop but that can be alleviated by simply having more RAM, if I'm not mistaken, which goes against the idea of needing less RAM moving foward.

Exactly. That API works when you have hardware that meets a performance baseline. Is the game going to run a storage benchmark on the assets before it lets you flip that on? I mean, flipping that on when you have all your games on rust drive is likely to have very little impact.

Even SATA SSDs are unlikely to have the raw throughput that games built around this API/Console storage subsystems are expecting. To get similar performance, you'll need a solid SSD (read, anyway) on a PCIe interface for huge burst transfers with low latency.

That makes the amount of normal gamer PCs that could really leverage this technology as likely laughably low.

I bought a 2TB NVME because I was tired of having an sata connections at all in my rig, but I think it is much more fashionable right now for a NVME boot drive backed by one or more larger and cheaper sata SSD drives.

Practically speaking, most computers can really only have one NVME drive at full bandwidth atm?

(to be clear, I feel like the inclusion of this API is nearly throw away at this point, to create some illusion of parity with the consoles, I could be wrong)

CastleBravo

Member

- Dec 6, 2019

- 119

- 271

- 96

Exactly. That API works when you have hardware that meets a performance baseline. Is the game going to run a storage benchmark on the assets before it lets you flip that on? I mean, flipping that on when you have all your games on rust drive is likely to have very little impact.

Even SATA SSDs are unlikely to have the raw throughput that games built around this API/Console storage subsystems are expecting. To get similar performance, you'll need a solid SSD (read, anyway) on a PCIe interface for huge burst transfers with low latency.

That makes the amount of normal gamer PCs that could really leverage this technology as likely laughably low.

I bought a 2TB NVME because I was tired of having an sata connections at all in my rig, but I think it is much more fashionable right now for a NVME boot drive backed by one or more larger and cheaper sata SSD drives.

Practically speaking, most computers can really only have one NVME drive at full bandwidth atm?

I don't have details on how the DirectStorage stuff works, but they might be able to leverage multiple tiers of storage including system RAM, an SSD cache, and the actual storage (SSD or HDD) that the game is installed to, all of which will be compressed until it gets to the GPU. This way you would have uncompressed assets needed right now on the GPU's VRAM, compressed assets that might be needed very soon on system RAM, compressed assets that might be needed soonish on an SSD cache drive, and the rest on of it compressed on spinning rust. Done right, PCs with 16+ GB of system RAM in addition to 8+ GB of VRAM might have a significant advantage over consoles that have less (V)RAM, but maybe faster storage.

I don't have details on how the DirectStorage stuff works, but they might be able to leverage multiple tiers of storage including system RAM, an SSD cache, and the actual storage (SSD or HDD) that the game is installed to, all of which will be compressed until it gets to the GPU. This way you would have uncompressed assets needed right now on the GPU's VRAM, compressed assets that might be needed very soon on system RAM, compressed assets that might be needed soonish on an SSD cache drive, and the rest on of it compressed on spinning rust. Done right, PCs with 16+ GB of system RAM in addition to 8+ GB of VRAM might have a significant advantage over consoles that have less (V)RAM, but maybe faster storage.

I didn't read the whole thing, but I think it's NVMe only?

Why NVMe?

NVMe devices are not only extremely high bandwidth SSD based devices, but they also have hardware data access pipes called NVMe queues which are particularly suited to gaming workloads. To get data off the drive, an OS submits a request to the drive and data is delivered to the app via these queues. An NVMe device can have multiple queues and each queue can contain many requests at a time. This is a perfect match to the parallel and batched nature of modern gaming workloads. The DirectStorage programming model essentially gives developers direct control over that highly optimized hardware.

DirectStorage is coming to PC - DirectX Developer Blog

Earlier this year, Microsoft showed the world how the Xbox Series X, with its portfolio of technology innovations, will introduce a new era of no-compromise gameplay. Alongside the actual console announcements, we unveiled the Xbox Velocity Architecture, a key part of how the Xbox Series X will...

devblogs.microsoft.com

devblogs.microsoft.com

I'd assume Nvidia's will just piggy back on Microsofts.

StinkyPinky

Diamond Member

- Jul 6, 2002

- 6,766

- 784

- 126

I would not feel comfortable with 10gb vram on a flagship level card with new 4K consoles about to be released. That's just me though. 8GB for the 3070 is just crazy imo. I'd definitely get the 16GB version of that model.

Kinda reminds me of the 3gb v 6gb debate and we all know how that panned out (spoiler alert, the 3gb version aged like milk)

Kinda reminds me of the 3gb v 6gb debate and we all know how that panned out (spoiler alert, the 3gb version aged like milk)

sze5003

Lifer

- Aug 18, 2012

- 14,183

- 625

- 126

Gonna say no. I can tell MS flight sim 2020 is making my 1080ti chug. I've seen some other games like resident evil 2 and call of duty use up my vram pretty quickly if I want to turn up the settings like I used to.

I would prefer more especially for 4k but I currently game in 3440x1440 and in VR sometimes too.

I would prefer more especially for 4k but I currently game in 3440x1440 and in VR sometimes too.

StinkyPinky

Diamond Member

- Jul 6, 2002

- 6,766

- 784

- 126

Gonna say no. I can tell MS flight sim 2020 is making my 1080ti chug. I've seen some other games like resident evil 2 and call of duty use up my vram pretty quickly if I want to turn up the settings like I used to.

I would prefer more especially for 4k but I currently game in 3440x1440 and in VR sometimes too.

Even worse if you think the 3070 is clearly a 4K card as well but the limit on the vram really makes it a cautious choice.

The Nvidia guy in the reddit AMA said it wasn't NVME exclusive, which is nice.I didn't read the whole thing, but I think it's NVMe only?

DirectStorage is coming to PC - DirectX Developer Blog

Earlier this year, Microsoft showed the world how the Xbox Series X, with its portfolio of technology innovations, will introduce a new era of no-compromise gameplay. Alongside the actual console announcements, we unveiled the Xbox Velocity Architecture, a key part of how the Xbox Series X will...devblogs.microsoft.com

I'd assume Nvidia's will just piggy back on Microsofts.

The Nvidia guy in the reddit AMA said it wasn't NVME exclusive, which is nice.

I'd wait for review of it before I'd trust the Nvidia guy. Sure it might somewhat work, but NVMe might be way faster?

Yeah, I might actually have misunderstood that. The storage might need to be PCIe connected, which doesn't necessarily preclude SATA SSDs, but it might not be as simple.I'd wait for review of it before I'd trust the Nvidia guy. Sure it might somewhat work, but NVMe might be way faster?

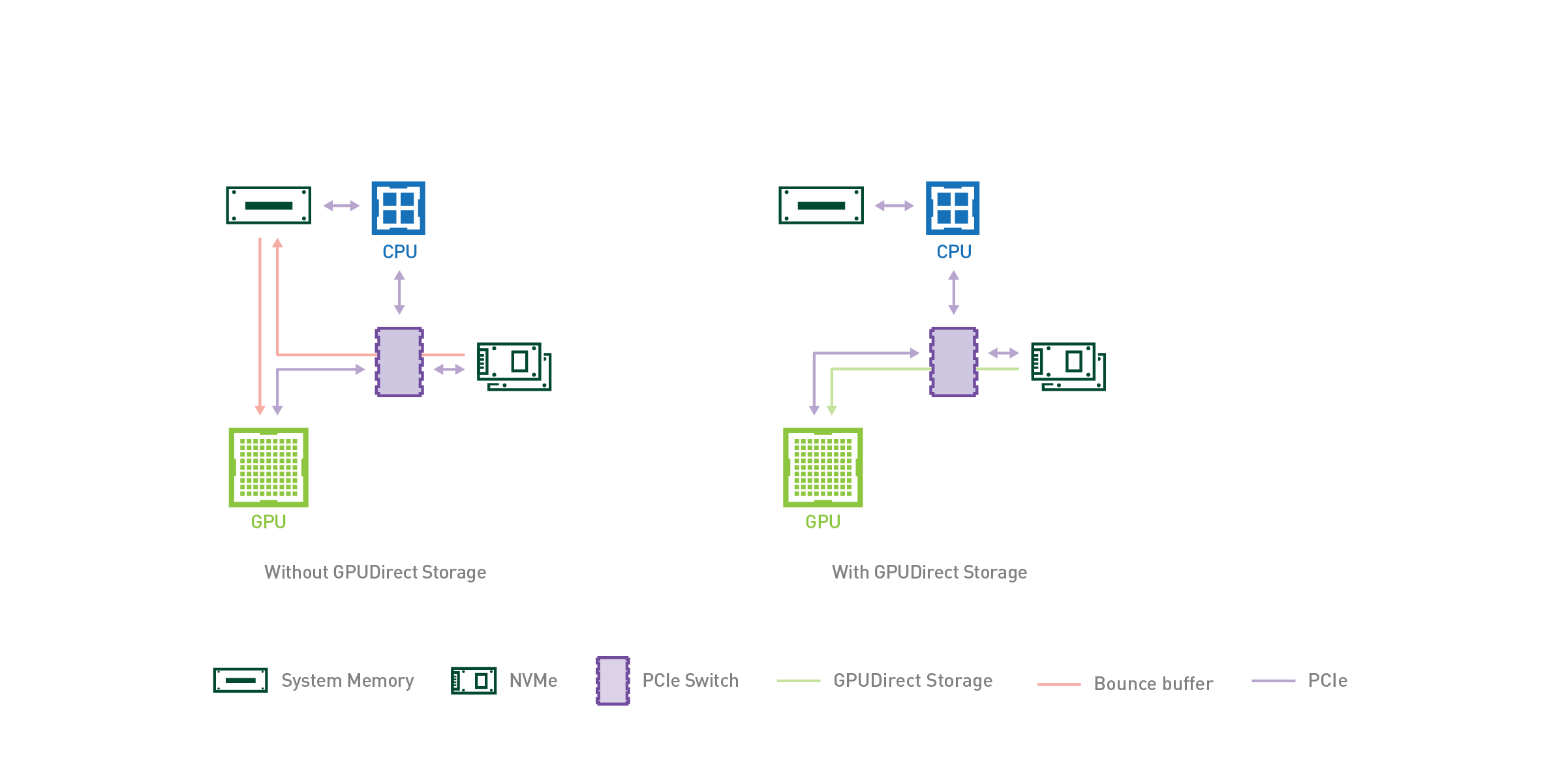

This is the page he linked about GPUDirect storage

GPUDirect Storage: A Direct Path Between Storage and GPU Memory | NVIDIA Technical Blog

As AI and HPC datasets continue to increase in size, the time spent loading data for a given application begins to place a strain on the total application’s performance. When considering end-to-end…

So, how does RTX IO work for NVMe drives that are directed connected to a CPU x4 interface? The data would still need to be shuttled through the CPU obviously, but it would still be a benefit as it can move in a compressed form and without the CPU having to process it outside of routing it to the x16 GPU interface?

TRENDING THREADS

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 10K

-

Discussion Speculation: Zen 4 (EPYC 4 "Genoa", Ryzen 7000, etc.)

- Started by Vattila

- Replies: 13K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes Discussion Threads

- Started by Tigerick

- Replies: 7K

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.