Assuming these prices are correct this is one of the best examples of binning currently available.

11900K - $600 - 8/16 core, 16MB L3 - 10900K 5.3/2c, 4.9/10c

11900 - $510 - 8/16 core, 16MB L3 - 10900 5.2/2c, 4.6/10c

11700K - $485 - 8/16 core, 16MB L3 - 10700K 5.1/2c, 4.7/8c

11700 - $390 - 8/16 core, 16MB L3 - 10700 4.8/2c, 4.6/8c

Outside of yields and turbo modes the sand is organized exactly the same in all of these. Well I guess the sand is just a little better organized as you go up the stack

Let's assume the turbo modes are going to be the same for 11 generation except for #1 below.

My questions/observations:

1. Since for 11th generation but 11900 and 11700 will have 8 cores there is nothing to distinguish between them except for clocks. Looks like Intel will have to increase the all core clock of the 11900 to 4.8 or it will probably perform worse than the 11700K.

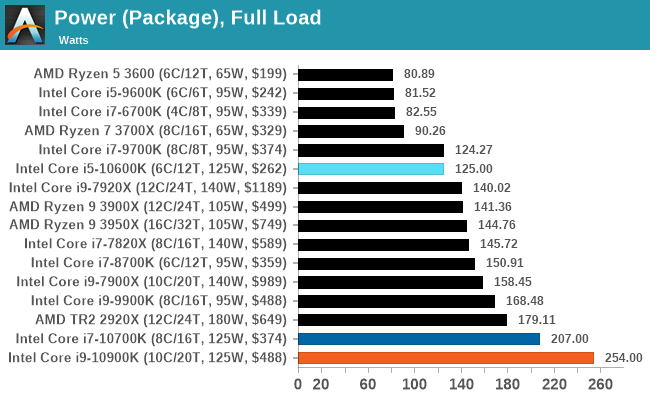

2. Moving down the stack you are paying huge percentage increases in price for tiny increases in performance. Like 2-3% more performance for 15% more money. Dollars per all-core GHz goes something like this down the stack $122.44, $106.25, $103.19, $84.78. Assuming #1 is correct. Pretty easy to see the price/performance champ here.

3. With Intel squeezing every last MHz out of their parts "automatically" given adequate cooling and power supply what is the actual value of the K parts? How much headroom from the 11900 to the 11900K is there? Tests seems to show 100MHz and better power consumption numbers. Basically "overclocking" guidelines are built into the parts these days and only need adequate cooling to attain them.

4. It will be interesting to see how close 11700K's can clock to 11900K's and I wonder as time progresses and yields get better if the gap will close or they both will perform better than early samples?

www.mindfactory.de

www.mindfactory.de