Here is a huge Core M review: http://www.notebookcheck.com/Im-Test-Intel-Core-M-5Y70-Broadwell.129544.0.html

Just another Lenovo Yoga 3 Pro analysis. I was hoping for a different Core M product this time.

Here is a huge Core M review: http://www.notebookcheck.com/Im-Test-Intel-Core-M-5Y70-Broadwell.129544.0.html

Here is a huge Core M review: http://www.notebookcheck.com/Im-Test-Intel-Core-M-5Y70-Broadwell.129544.0.html

Lots of gaming benchmarks. Performance isn't groundbreaking, Haswell-U is much faster.

Interesting frequency log from Dota 2: http://www.notebookcheck.com/fileadmin/Notebooks/Sonstiges/Prozessoren/Broadwell/dota2.png

GPU runs roughly at 400 Mhz only most of the time and CPU only 800 Mhz. No wonder performance isn't great. For a consistent performance over several minutes and longer Broadwell requires 10+ watts it seems.

Sunspider is a short benchmark, favouring Core-M and its Turbo. The drop comes in longer benchmarks.

seems comprehensive wish it was in english though. this is the lenovo 3 at 3.5w TDP? perf/watt seems very good. performance does not seem good vs the reference designs.

http://tabtec.com/windows/lenovo-thinkpad-helix-2-intel-core-m-now-available-us-979/

this one has an aluminium back so maybe less throttling.

But that only holds as a disadvantage if the iPad Air doesn't throttle (or considerably less).

Unfortunately notebookcheck doesnt test the same way for the air 2:

http://www.notebookcheck.net/Apple-iPad-Air-2-A1567-128-GB-LTE-Tablet-Review.129396.0.html

After 1 hour it throttles 15-30% in 3dmark.

But how is bw doing in similar chassis?

Those test is as much chassis test then. And validation of performance is a task only oem can do properly.

Just another Lenovo Yoga 3 Pro analysis. I was hoping for a different Core M product this time.

Finally, combined CPU and GPU stress wreaks predictable havoc on the machine, with CPU clock rates of around 500-600 MHz and the GPU reaching only around 300 MHz.

That is awful. CPU clocks at 1/5 of maximum and GPU clocks at 1/3 of maximum. It is even worse, when you consider this thing has a fan.

In addition the SoC gets destroyed by Tegra K1 in 3dMark.

And 6h battery life while browsing is on the lower end of the spectrum.

If Intel continues this way it will be overrun by the ARM competition in the not too distant future. Chances in mobile space are slim either.

Cortex A-57 is available in actual designs. And that is just run-off-the-mill fully synthesizable ARM IP. With other words, the ARM competition is not even trying.

The strangest part is that the post you quoted is right underneath this post:You know what the strange part is? If ARM chips like the S800 were given a load comparable to Furmark + Prime you would see similar behaviour.

Yes, the ceteris paribus principle is very important.

It's throttling because of artificially low power limitations set by Lenovo, not because of heat. The fan is largely irrelevant.That is awful. CPU clocks at 1/5 of maximum and GPU clocks at 1/3 of maximum. It is even worse, when you consider this thing has a fan.

In addition the SoC gets destroyed by Tegra K1 in 3dMark.

And 6h battery life while browsing is on the lower end of the spectrum.

If Intel continues this way it will be overrun by the ARM competition in the not too distant future. Chances in mobile space are slim either.

Cortex A-57 is available in actual designs. And that is just run-off-the-mill fully synthesizable ARM IP. With other words, the ARM competition is not even trying.

Even the point was made in the article that normal operation was quick enough. For its intended use, throttling was not a problem. I have said it before, but I still think people are expecting way too much performance for such a low watt chip. The problem I see is that despite the low TDP, the battery life is not exceptional, and the price is very high of course. Personally it does not appeal to me, but for a business traveller doing primarily e-mail, office use, internet and light productivity and who wants a sleek, "impressive" package with the company paying for it, it could have a market.

It's throttling because of artificially low power limitations set by Lenovo, not because of heat. The fan is largely irrelevant.

For the idle use case this is expected at 14nm. It will get worse with 10nm. There is not much you can do about it, aside from using smaller cores. That is the base idea behind the big.little concept as featured by many ARM designs. (see new Exynos using A57/A53)Yeah, the idle battery usage is way too high on these machines. That is almost certainly affecting battery life more than anything else. Compare it to the MacBook air (running Haswell u), which is fraction of the idle use. Is this a limitation of Windows or lazy design decisions by OEMs?

Lenovo shouldn't have let this product see the light of day, to be honest. It regresses compared to last year's model in other areas as well.This argument does not fly very far. The device was measured 42 degree at the hot spot. How hot, according to your opinion, should have Lenovo allowed the device to be?

14nm transistors are already the best transistors in the world in all aspects. So how do you mean it will only get worse at 10nm when they are going to have III-V compound semiconductor fins?

Has an ultra high-k candidate been found yet?I mean at some point you also need to reduce the thickness of the gate dielectric, where high-k materials are already used to extend Moores law.

Oh dear, please do not comment on topics, you are apparently no expert of.

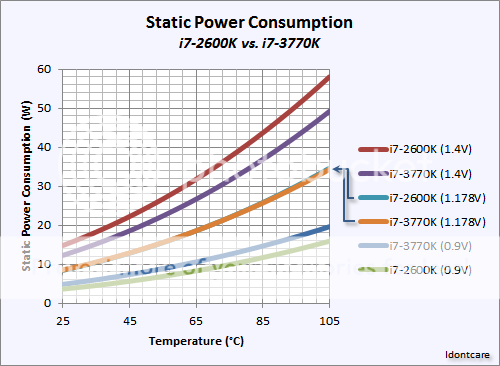

Let me first state that when idle, leakage is the single biggest contributor to power. In other use cases active power is (still) dominating. The relation between these to values however goes up with each smaller process node in favor of leakage. What you also should know is, that leakage not just doubles when moving down half a node. Leakage is one of the main effects working against Moores law.

The main reason to going to 3d transistors/FinFETs is to have more control of the geometric layout of the gate channel and the electric field controlling the channel, thus reducing leakage current significantly.

To give you some idea. 22nm FinFET has much lower leakage than 28nm planar. I estimate that 14 nm FinFET is about the same as 28nm planar for logic but already worse for SRAM. 14nm FinFET is in any case worse then 22nm FinFET, because you cannot defeat physics.

At this point you need to think about clever designs, as for instance the big.little concept. Keep in mind Intel is already past the general FinFET/3-gate gain with respect to leakage.

Mind to enlighten me, how is this supposed to improve when going down to 10nm, even if you consider that you are using compound materials? I mean at some point you also need to reduce the thickness of the gate dielectric, where high-k materials are already used to extend Moores law.

Oh dear, please do not comment on topics, you are apparently no expert of.

Let me first state that when idle, leakage is the single biggest contributor to power. In other use cases active power is (still) dominating. The relation between these to values however goes up with each smaller process node in favor of leakage. What you also should know is, that leakage not just doubles when moving down half a node. Leakage is one of the main effects working against Moores law.

The main reason to going to 3d transistors/FinFETs is to have more control of the geometric layout of the gate channel and the electric field controlling the channel, thus reducing leakage current significantly.

To give you some idea. 22nm FinFET has much lower leakage than 28nm planar. I estimate that 14 nm FinFET is about the same as 28nm planar for logic but already worse for SRAM. 14nm FinFET is in any case worse then 22nm FinFET, because you cannot defeat physics.

At this point you need to think about clever designs, as for instance the big.little concept. Keep in mind Intel is already past the general FinFET/3-gate gain with respect to leakage.

Mind to enlighten me, how is this supposed to improve when going down to 10nm, even if you consider that you are using compound materials? I mean at some point you also need to reduce the thickness of the gate dielectric, where high-k materials are already used to extend Moores law.

What amazes me, and this is the message I hope people absorb in reading this, what amazes me is that Intel was able to shrink the physical geometry of the the circuits themselves in going from 32nm to 22nm (xtor density goes up) and yet they managed to essentially keep the static leakage the same (roughly) at any given temperature and/or voltage as the much less dense (and less likely to leak) 32nm circuits.

I am not an expert at all but this is very interesting to me and I was wondering if you could answer a question: What lithography would you say is best for leakage? You said that 22nm FF was better than 14nm FF. Where does TSMC 20nm planar and Samsung 20nm FF Exynos 5433 fit in there? Better, worse?

From what I can gather, big core designs will run into problems below 14nm? It seems Apple has taken the big core approach with A8X and samsung is going big.LITTLE, I wonder if the smaller cores can give samsung an advantage when leakage becomes an issue. Would you say Samsung has the right approach with big.LITTLE vs big core?