Intel Broadwell Thread

Page 118 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,240

- 13,327

- 136

Fair enough. I would consider it if

a). I had the money (questionable)

b). There were more to learn from the chip (it's been examined well-enough by now) and

c). Someone that didn't charge me tax were selling it.

Still up in the air about b). but near-10% sales tax is a deal killer sometimes.

Also bummed by Broadwell not having task-scheduling and task-pre-emption for GPGPU though that's really pretty niche . . . Kaveri has the same problem, and I bought one of those. For 1/4 the price!

a). I had the money (questionable)

b). There were more to learn from the chip (it's been examined well-enough by now) and

c). Someone that didn't charge me tax were selling it.

Still up in the air about b). but near-10% sales tax is a deal killer sometimes.

Also bummed by Broadwell not having task-scheduling and task-pre-emption for GPGPU though that's really pretty niche . . . Kaveri has the same problem, and I bought one of those. For 1/4 the price!

richierich1212

Platinum Member

- Jul 5, 2002

- 2,741

- 360

- 126

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

I m aware that 3DMark 11 score is not representative of games scores, in this case the 20% are entirely due to eDRAM.

I know people like to think about "what ifs", but the reality is that's minute detail. Same as the people thinking "what ifs" about Nvidia Maxwell having HBM, or AMD having Fury with strong front-end or Maxwell's power efficiency. The actual products though, they end up being pretty similar to each other and things like "HBM" and "better architecture" do not co-exist and is merely a differentiator to have them equally viable competitors.

You are assuming. The problem is that Carrizo basically isn't available widely to test your *theory* out. You could be right about 50W, be slightly wrong, or be entirely wrong. Not to mention noone is playing LuxMark. Luxmark isn't even a 3D benchmark anyway.Actualy the GPU use more power than the CPU, IrisPro is not that powerfull that the CPU must be at full througput to load it at 100%, in Hardware.fr link you can check in LUXMARK power comsumption on GPU mode with CPU unloaded, that s 50W difference when losses are accounted, on CPU + GPU loading the CPU add 21W...

You can't reliably prove that. Because you are assuming the difference between 36W and 28W is entirely due to the SoC. Increasingly the entire platform is becoming dynamic in power usage. The only reason I quoted 10W is because its just as inaccurate as the Max power figure you are quoting. Could be a figure shown shortly after the test.Stress test start shortly at 36W and the device get rapidly to 28W, deltas at the SoC level are hence 24W and 17.5W, to wich we an add 0.5-0.7W that are within the idle power comsumption..

Indeed those figures correlate perfectly with your numbers, of course that the 5010U can be set to a strict 15W or 10W but then it wont achieve the same scores.

You should realize that if 10W was feasible at 2.1GHz then the Y variants that are 1GHz would be at less than 2.5W real TDP.

Yet they are rated 4.5W, and applying a raw square law point to almost 20W at 2.1GHz, let s assume that it s 18W due to the uncore not scaling as much..

When Intel spec a same line, that is the 2C/4T, from 1.9GHz to 2.5GHz it s obvious that the latter will have a TDP that is (2.5/1.9)^2 = 1.73x higher than the former, yet they are all specced 15W, wich is physicaly impossible..

Better to aknowledge that their ratings are a mess than keeping negating laws of physics, because that s the only thing left to try "explaining" the unexplainable...

While performance will be lower, it won't be to a significant degree(10-20%). That means however far lower power use because lower average operating frequency and voltage. Same thing shown by AMD, because gains at desktop TDPs are pathetic, while being quite impressive at lower voltages.It cant be more efficient than Carrizo s GPU by the virtue of the same laws i explained above, it start from too low to close the gap if it use the same process as BDW.

1) There's no Carrizo product to test that out, that's why I had to use 7870K. Oh, and its Desktop to Desktop, making it fairThat s due to process, not uarch, and at high frequencies Intel has quite an advantage, you had to ressort to Kaveri to try making a point, but what differentiate this chip from Carrizo is essentialy a more efficient process at average frequencies.

2) Minute detail as I said in the beginning. There's always an assumption that someone will make a "dream device" based on the advantages of everyone.

The only thing I can tell you. Intel has process advantage, AMD has HBM, and Nvidia has a good architecture. None of them has all three. I highly doubt you will get all that in one product, sadly. Someone *always* has something that the others don't.

Last edited:

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

In what Laptop did you ever saw a Broadwell SoC? It was intended for microcloud Servers and the like. But yes, the 25W QC version could happily be repurposed for Laptops.

CPU World also says that Broadwell SoC new Stepping brings a feature called "KR functionality" but they don't know what the hell it is.

An article at S|A answers that question: http://semiaccurate.com/2012/06/11/netronome-lays-out-their-22nm-nfp-6xxx-line/

https://en.wikipedia.org/wiki/10_Gigabit_Ethernet#10GBASE-KROne feature that makes standalone operation easier the NFP-6xxx line is KR compliant so they can plug directly in to a backplane.

10GBASE-KR This operates over a single backplane lane and uses the same physical layer coding (defined in IEEE 802.3 Clause 49) as 10GBASE-LR/ER/SR.

So that's a Xeon D Broadwell specific functionality.

You can't reliably prove that. Because you are assuming the difference between 36W and 28W is entirely due to the SoC. Increasingly the entire platform is becoming dynamic in power usage. The only reason I quoted 10W is because its just as inaccurate as the Max power figure you are quoting. Could be a figure shown shortly after the test.

1W5 for the SSD at most.

1W5 for the single DDR3L RAM stick

1W5 at most for the rest of components.

That s 4.5W wich are in the 6.4W idle power, all the delta is due to the SoC, that s not a laptop, there s no screen, or even Wifi + Blutooth and RAM comsumption is static, it s the memory controler that use power when writing/reading in the RAM.

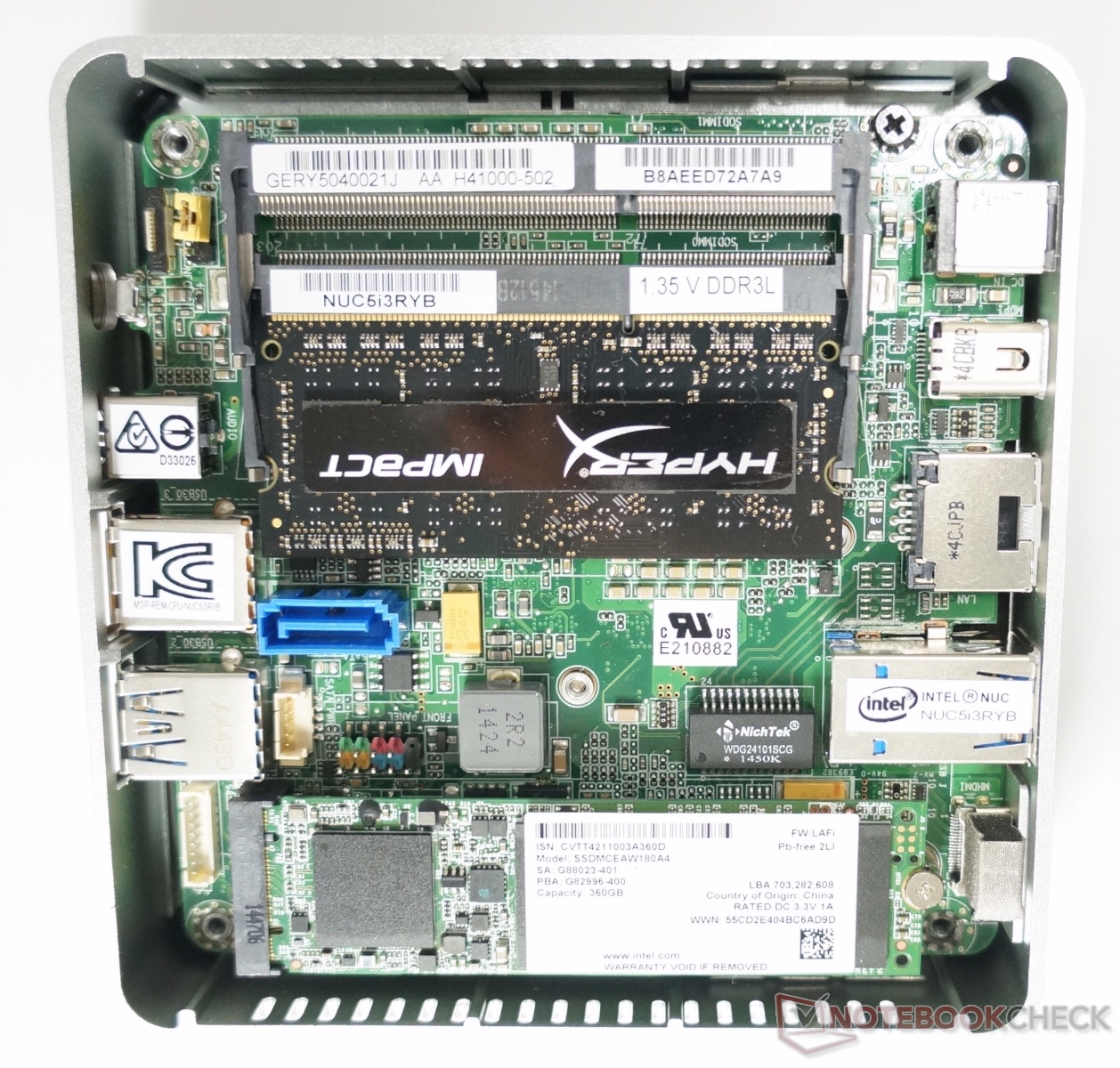

http://www.notebookcheck.com/Test-Intel-NUC5i3RYK-Mini-PC-Broadwell-Core-i3-5010U.147828.0.html

1) There's no Carrizo product to test that out, that's why I had to use 7870K. Oh, and its Desktop to Desktop, making it fair

You used system power and the Kaveri plateorm use 10W more at idle, looking at the deltas it use 40W at the GPU level, Iris pro is at 50W for a 20% better LUXMARK score..

As for Carrizo we know that its GPU score 2750 at 3DMark11 and at 24W, a Kaveri 7850K GPU score 2458 at 32W, the extrapolation to Luxmark is straightforwared, an IrisPro score 10% better, like in 3DMark11, at twice the power comsumption.

The only thing I can tell you. Intel has process advantage.

This is indeniable and is about the only reason of their dominance, a Kaveri using a nearby process would have much better CPU perf/Watt than it s i3 counterparts.

AMD has HBM, and Nvidia has a good architecture. None of them has all three. I highly doubt you will get all that in one product, sadly. Someone *always* has something that the others don't.

Nvidia has not a better uarch, quite the contrary, their perfs are based on higher frequencies and those latters are possible because they use a custom process at TSMC, anyway the process playing field will be leveled next year and AMD is the one that will gain the more from this evolution.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,240

- 13,327

- 136

You are assuming. The problem is that Carrizo basically isn't available widely to test your *theory* out. You could be right about 50W, be slightly wrong, or be entirely wrong. Not to mention noone is playing LuxMark. Luxmark isn't even a 3D benchmark anyway.

Actually, The Stilt has a cTDP unlocked Carrizo test system that he can reprogram to any pstate settings he likes. So if there's something you want tested, if it is of sufficient interest to him, he might just do it. He's already producing a good amount of useful data with the system already, and from the looks of things, it's pretty nice. So far, in fixed clockspeed tests, it's averaging ~11% faster than Kaveri at the same clockspeed in fp-heavy benchmarks.

However, this is a Broadwell thread. If we really want an in-depth examination of what Carrizo can do, I would recommend starting an entirely-new thread (no, not the pre-release thread) to address that topic.

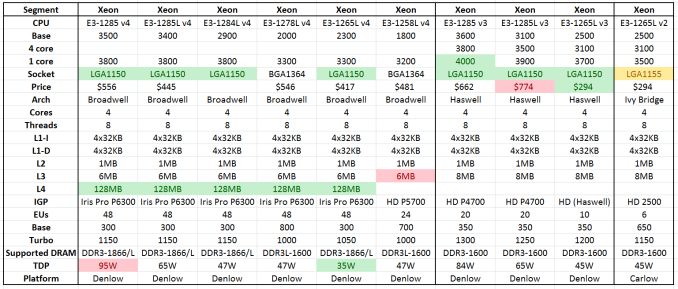

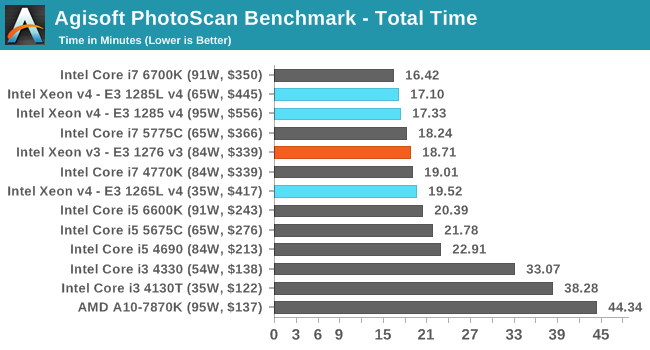

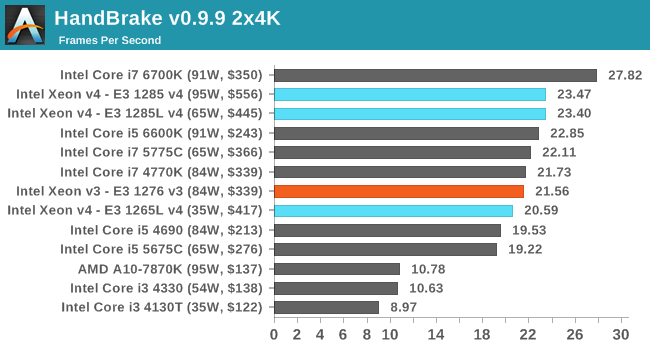

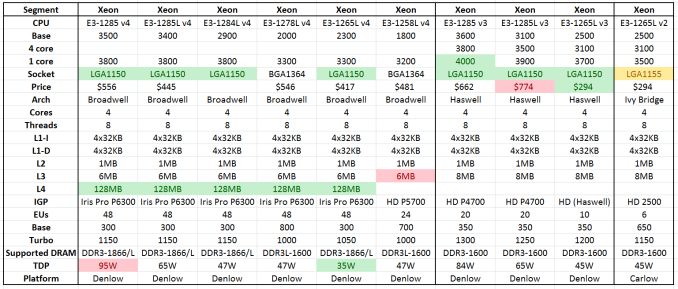

AnandTech: The Intel Broadwell Xeon E3 v4 Review: 95W, 65W and 35W with eDRAM

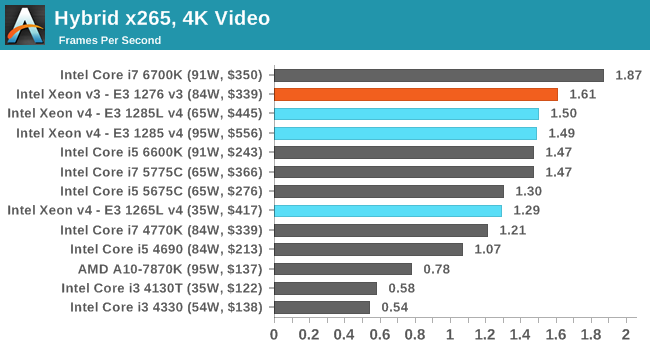

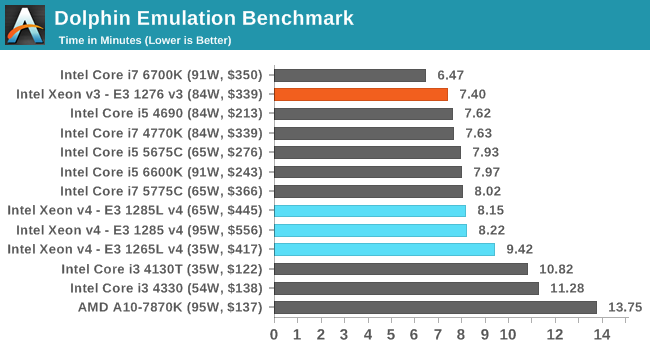

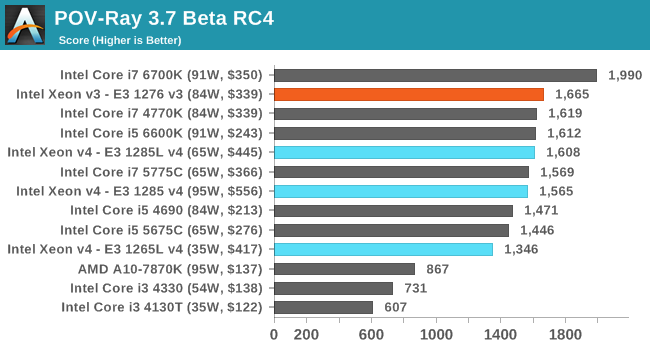

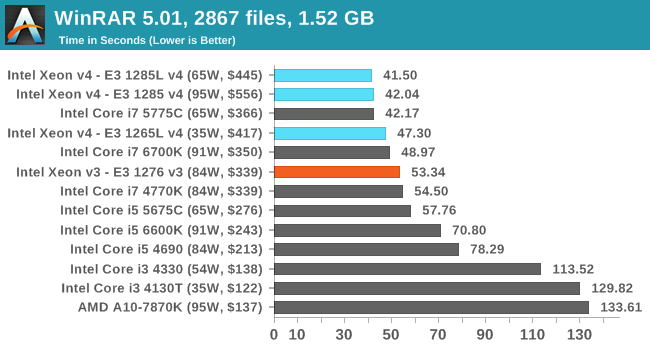

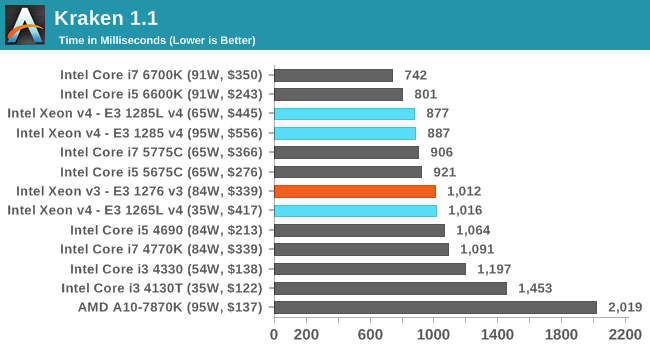

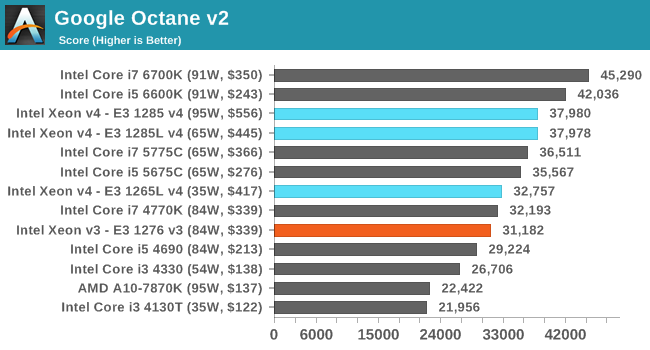

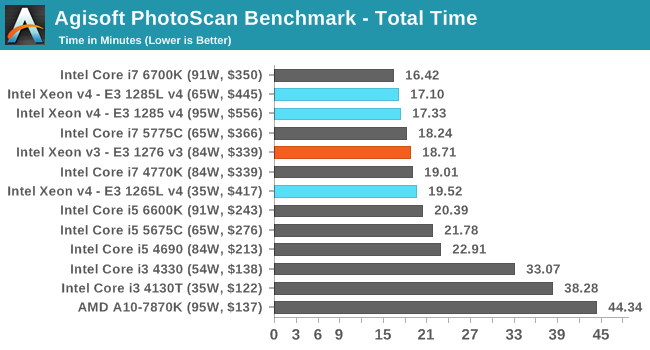

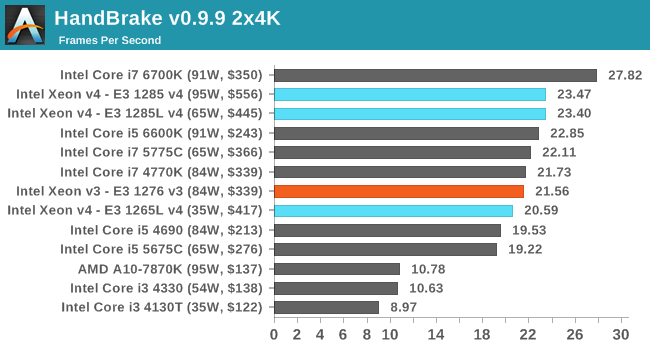

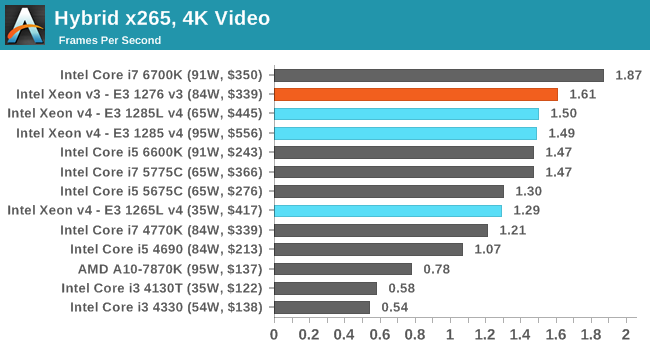

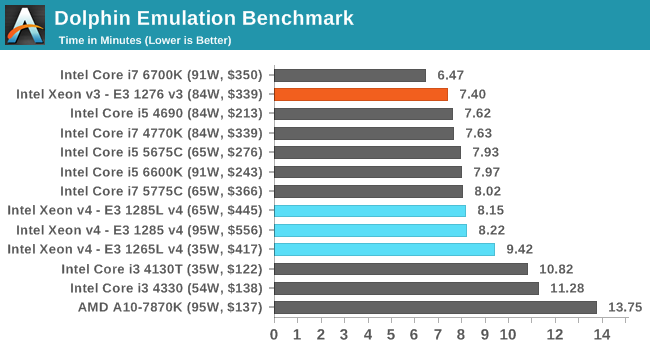

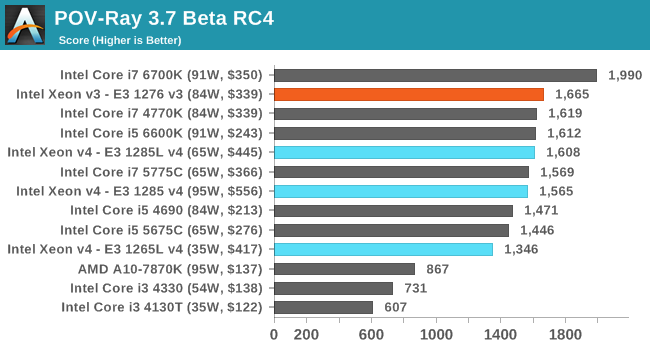

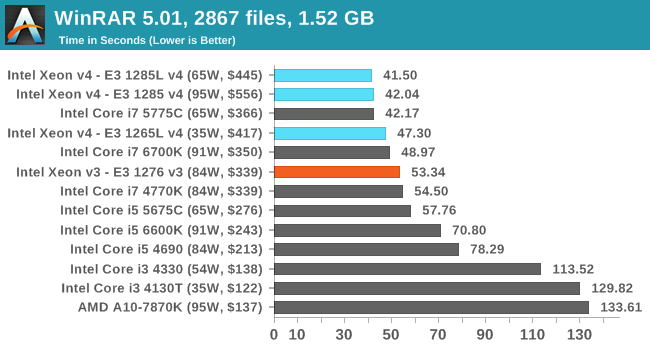

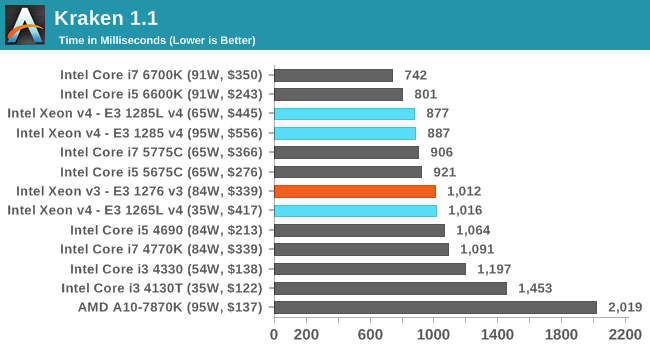

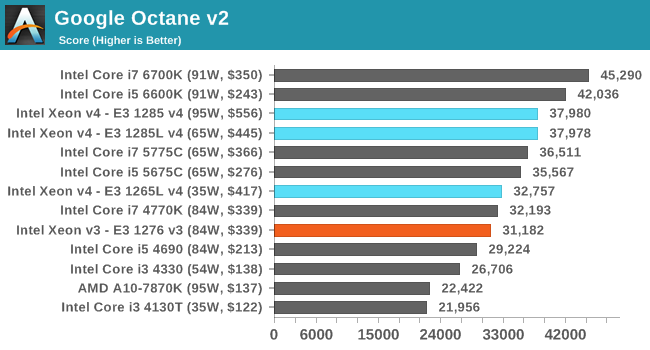

3.5-3.9GHz Haswell (Core i7 4770K) vs 3.5-3.8GHz 4C/8T Broadwell with eDRAM (Xeon E3 v4 1285) vs 4C/8T 4.0-4.2GHz Skylake (Core i7 6700K):

www.anandtech.com/show/9532/the-intel-broadwell-xeon-e3-v4-review-95w-65w-35w-1285-1285l-1265

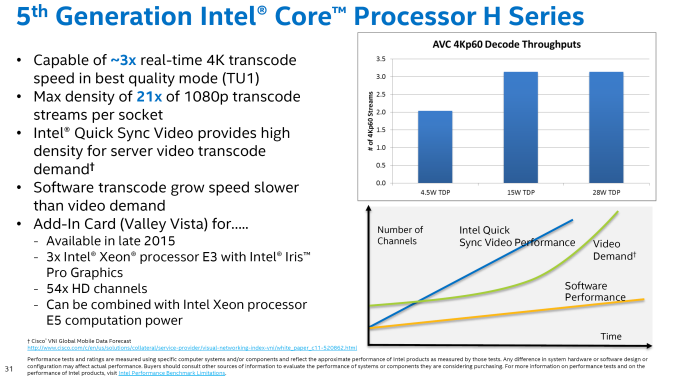

At IDF San Francisco this year, an announcement passed almost everyone by. Intel described an add-in card coming in Q4 2015 that features three Broadwell-H E3 Xeon processors on a single PCB, each with Iris Pro graphics.

Valley Vista is designed to allow for high density, workload specific work, in particular AVC transcoding. Aside from the slide above, there has been no real details as to how this card will work - if there's a PCIe switch for communication, or if it runs in a virtualized layer, or how the card is powered or if each of the processors on the card will have a fixed amount of DRAM associated with them. So far Supermicro announced in a press release that one of their Xeon Phi platforms is suitable for the cards when they get launched later this year.

3.5-3.9GHz Haswell (Core i7 4770K) vs 3.5-3.8GHz 4C/8T Broadwell with eDRAM (Xeon E3 v4 1285) vs 4C/8T 4.0-4.2GHz Skylake (Core i7 6700K):

www.anandtech.com/show/9532/the-intel-broadwell-xeon-e3-v4-review-95w-65w-35w-1285-1285l-1265

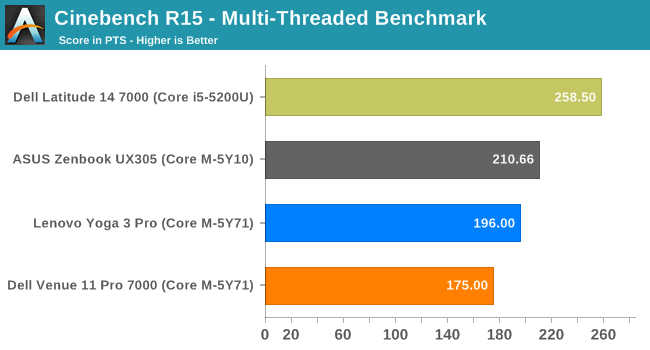

ASUS managed to get better performance with a slower Core M model inside a fanless design.

This reminded me of something. A question actually: Who bears the cost of warranty claims involving Intel Cpus sitting inside OEM systems? Are there normally burden sharing clauses in agreements with OEM designers?

The implications are obvious for less tried-and-true innovations like the corem because there is the potential that the entire line of processors may start failing en masse a few years down the line (heat is the obvious culprit in this scenario).

But the CPU is never too hot from reliability point of view. It throttles or in worst case shut down before that.This reminded me of something. A question actually: Who bears the cost of warranty claims involving Intel Cpus sitting inside OEM systems? Are there normally burden sharing clauses in agreements with OEM designers?

The implications are obvious for less tried-and-true innovations like the corem because there is the potential that the entire line of processors may start failing en masse a few years down the line (heat is the obvious culprit in this scenario).

ShintaiDK

Lifer

- Apr 22, 2012

- 20,378

- 146

- 106

This reminded me of something. A question actually: Who bears the cost of warranty claims involving Intel Cpus sitting inside OEM systems? Are there normally burden sharing clauses in agreements with OEM designers?

The implications are obvious for less tried-and-true innovations like the corem because there is the potential that the entire line of processors may start failing en masse a few years down the line (heat is the obvious culprit in this scenario).

Intel, assuming its run within specs. But the OEM handles the warranty. So the enduser have to do the claim against the OEM.

ClockHound

Golden Member

- Nov 27, 2007

- 1,111

- 219

- 106

I sold my 4790K to my nephew for $200 plus a G3258 (he got a deal, I think*) so I want to either get a 5775C or just ditch the Z97 platform and go Skylake.

*The G3258 is the one I had up to 4.9, so I didn't do horribly bad to get it back.

You're a very generous uncle. Does your nephew know what a famous chip he has? The world (anand forum) champ of quad cores in the overclocked CB 11.5 competition - a 5Ghz beast that was never OC'd but the one time to claim the gold.

It seems a lot of CPU's from Intel end up being very scarce in the USA. It was the same with the later i-3 35W cpu's. They promised the IRIS pro 6200 CPU's for desktop but you cant hardly find them anywhere near the Intel price per 1000 units.

If you are going to way overpay for the i7 may as well just get the XEON???

If you are going to way overpay for the i7 may as well just get the XEON???

zir_blazer

Golden Member

- Jun 6, 2013

- 1,266

- 586

- 136

Xeons E3 V4 are drastically more expensive than the Core i7 5775C. Its nowhere comparable to Xeon E3 V2 and V3 lines where you had a lot of models that superposed with Core i5/i7 models at around the same price.

ShintaiDK

Lifer

- Apr 22, 2012

- 20,378

- 146

- 106

Still at this point skeptical about Broadwell-E. If it does get released it'll probably be low volume. 3DXP is such a big deal I think companies will wait for Purley instead.

Broadwell-E/EP will still be big in mainstream 2P servers.

coercitiv

Diamond Member

- Jan 24, 2014

- 7,540

- 18,067

- 136

Fingers crossed for a golden(ish) chip, in the interest of... science!TOO MUCH! :embarrassed:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 24K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.