Introducing the Intel® Extension for PyTorch* for GPUs

Get a quick introduction to the Intel PyTorch extension, including how to use it to jumpstart your training and inference workloads.www.intel.com

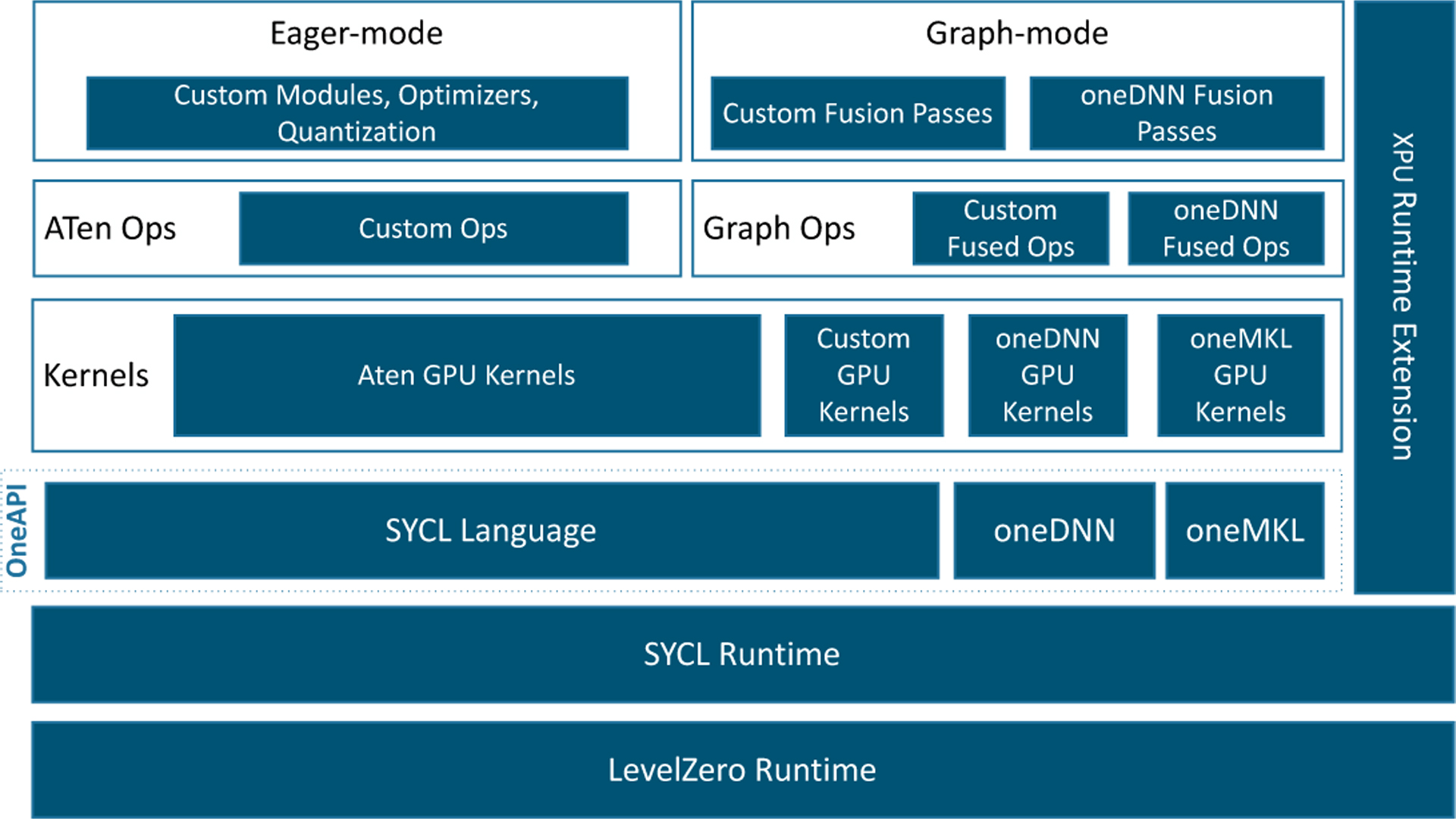

Intel® Extension for PyTorch* — Intel® Extension for PyTorch* 2.8.10+xpu documentation

This website introduces Intel® Extension for PyTorch*intel.github.io

Intel Xe Matrix Extensions (needed for Intel Extension for PyTorch) working on ARC according to this post: https://towardsdatascience.com/util...trix-extensions-xmx-using-oneapi-bd39479c9555

They don't explicitly state it but these are for Linux.

However, I did get their Docker container working. I have no idea what I did wrong the first time but I blame lack of sleep.

Anyway moving the training to the Arc GPU was easy once I got in. The attached screenshot is training an older NN (one of my first) using a very small batch size (10) that I was running on the CPU. Utilisation is pretty poor, but it is much slower at these small batch sizes than my 3080. Huge batch sizes (1000s) are working much better.

I will need to setup a proper benchmark to compare them. There is very little data about this online.