Fascinating how up to a 10% increase of the scheduler has been magically reduced to 1% by a guy that call others names...

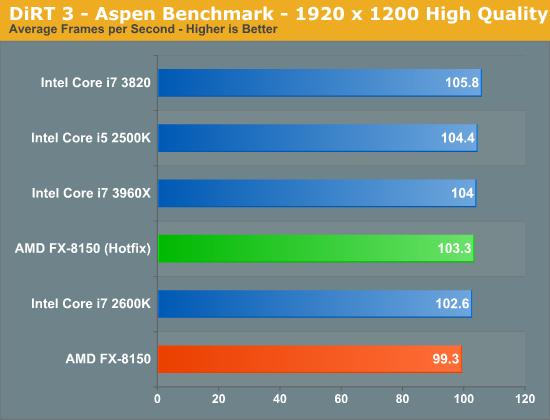

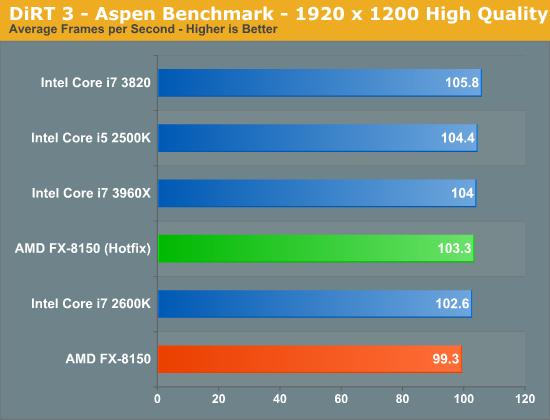

As shown by Anandtech the FX hotfixes can increase up to a 10% the framerate of some games. Several games obtain about a 5%

Using adequate memory speeds account for another 5%. And summing up only those two effects already gives about a 10% increase for the same chip.

This 10% is about the average difference in performance, reported above, between the FX-8350 and the i7-3770k. It is about the improvement from going from one generation of chips to the following.

As shown by Anandtech the FX hotfixes can increase up to a 10% the framerate of some games. Several games obtain about a 5%

Using adequate memory speeds account for another 5%. And summing up only those two effects already gives about a 10% increase for the same chip.

This 10% is about the average difference in performance, reported above, between the FX-8350 and the i7-3770k. It is about the improvement from going from one generation of chips to the following.

Last edited: