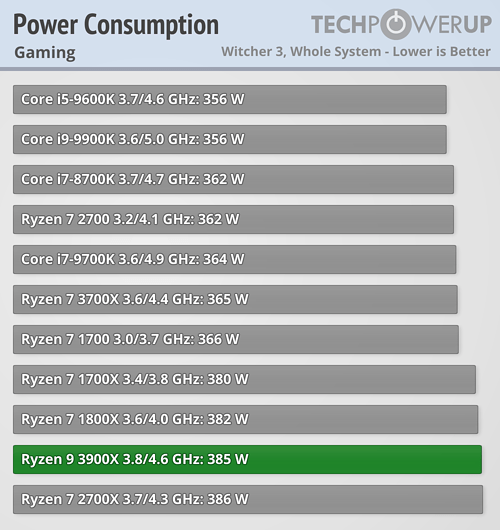

To get to a certain level of performance, in theory all of the above will consume a similar-ish amount of power to get the same-ish gaming results right? Maybe apart from the 3900x which has two chiplets.

I'm sure cinebench/etc. it would be a different story when all cores are synthetically maxed out. But for gaming purposes, I assume it would be negligible? It's just that I'm worried my current cooling set-up is inadequate (currently have a 3700x which doesn't boost beyond 4Ghz so I'm selling it), and upgrading to a 3900x would be a big mistake in terms of thermals and power.

I'm sure cinebench/etc. it would be a different story when all cores are synthetically maxed out. But for gaming purposes, I assume it would be negligible? It's just that I'm worried my current cooling set-up is inadequate (currently have a 3700x which doesn't boost beyond 4Ghz so I'm selling it), and upgrading to a 3900x would be a big mistake in terms of thermals and power.