Yes, it is a theme known by AMD fans for years, chips clocked way past their efficiency curve for minor performance just to compete, destroying the efficiency of the processor for little gain.

Opening themselves up for a panning in the tech forums by unforgiving intel/nvidia shills.

You are going to see more of this from intel now on, and AMD fans giving it back to them now the shoe is on the other foot.

Welcome to crazy town!

The difference is performance imho. We've seen these eras :

Athlon XP vs P4 Willamette.

Total AMD domination in price, performance, and upgrade. Socket 423 was shocking in all the wrong ways.

Athlon XP vs P4 Northwood.

Intel leading in performance, particularly once in the sweet spot at 3.0-3.2Ghz. Athlon still very viable, with amazing OC from cheap 1700+, etc.

Socket 940 Opty / A64 vs P4s. Suddenly AMD has top performance, with superior efficiency. A 3000+ and P4B/C @ 3.2 have nearly identical performance, but AMDs platform is just superior here. Intel released the pointless and overall bad Prescott. Netburst scaling was just poor past ~3.4 in any case.

A64 X2 vs Pentium D products. AMD domination continued, and the Intel S775 netburst dual cores just looked ridiculous compared to hugely more efficient X2 Athlons. AMD figured out they were dominating, and raised prices to ~$1k for flagship models. $300 got you a 3800 X2 though.

A64 vs Conroe. A holy crap moment for sure. Overnight both Netburst and AMDs existing platforms were obsoleted. A 2:1 IPC over Netburst, and a 1.5:1 IPC over the best X2/Opteron SKUs. Eg, lowly 1.6Ghz Conroe equal or better than 2.4Ghz A64 X2 or 3.2Ghz Pentium D, while sipping power. Once clocked much beyond 2Ghz, basically untouchable by all previous products.

AMD released a bunch of disappointing comeback products that never really turned the corner. The closest came with the Phenom II X4-X6 era, where they were truly competitive with the best Intel products (I went PhII here to save $). Timing was bad though, as soon after :

Core i7 920 followed by socket 1156 ruined Phenom II having much time as a relevant enthusiast product. Then Sandy came and made a mockery of everything. AMD released more laughable products like the FX series. Huge power consumption with the top models and OCed figures, but the crucial difference was that it didn't correspond with top-tier performance, making it especially ludicrous.

Intel got fat and lazy over the next 6 years, giving us tiny increases in IPC, tiny increases in features, edging prices up, and generally being greedy. Owning a 2700k @ 4.8, even a 7700k is mostly better only for the platform improvement, the actual CPU performance moved hardly at all.

Ryzen truly came and rocked the world. Almost a Conroe moment, untouchable multicore performance on a single socket, great prices, and only let down minimally by gaming/ST, where it certainly wasn't bad, just not better than the existing products. For general use and heavy MT, it vaulted to #1 with a bullet. And moved beyond quad cores instantly for the consumer.

Make no mistake, Ryzen is the only reason we were given hex cores with coffee Lake. And now seeing an octo Halo product.

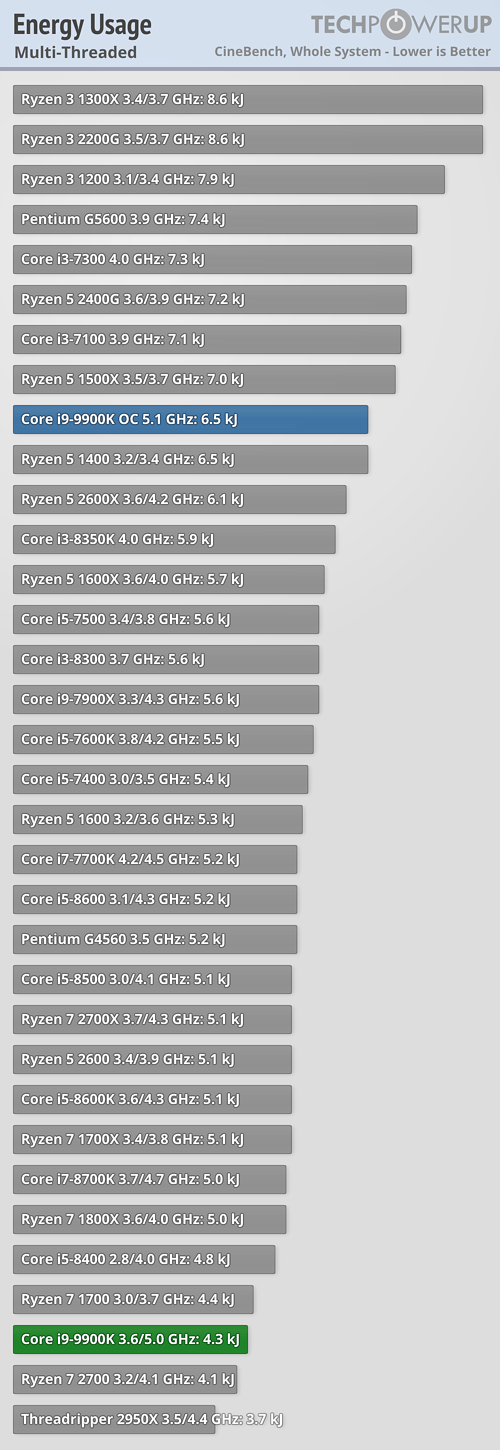

It's thirsty, yes. It's also a pretty bad economic prospect honestly. I'm not going to buy one. But, it's nowhere near as embarrassing as Willamette, Prescott, Phenom I, or FX. It actually delivers halo performance for halo pricing, with a lot of heat and fairly thirsty. I just think it's poor value. Still pretty impressive for 14nm.

CPUs are fun again, and much of that (most of that) is due to AMD being competitive at many levels again.