There are a couple of really nice articles from Puget Systems about the cpu and gpu requirements.

lightroom hardware .

That link confirms what I have seen in other articles about Lightroom performance.

With a 1200.00 budget, I would go for something like a 4790k/6700k, and a GTX 960/970. For heavy productivity use like this, I think an i7 is definiely worth the cost.

If it's an i7, and it'll probably be, why go for the 4790? The 6700 is a newer processor (benchmarks say it's more powerful) and uses a newer socket (1151). It's more future "proof" -- meaning it'll be easier to find a cheap, beefed up processor in a couple of years if I decide I want an upgrade. Unless that 4790 has something that the 6700 doesn't.

As far as the GPU selection, try to squeeze $10-20 to get into the R9 290/390/970 territory. Right now all of the GPUs in the $180-230 range present bad value compared to these 3 cards I listed. If you set a strict $200-230 budget on a GPU and end up with a 960 4GB/R9 380X, you are standing to lose a huge chunk of performance by not spending just a small fraction above that. Be on the look-out for hot deals and various discounts on Newegg such as using $25 off $200 with AMEX, etc.

I understand what you're saying and I can price up to get something that'll have such a huge difference. Both the i5, and the i7 have GPUs in them so I can grab one and start working, and postpone selecting a VGA for a while (a few weeks or a couple of months).

try to squeeze $10-20 to get into the R9 290/390/970 territory.

I have

really not looked at VGAs at all so I'm not sure I get what "models" you mention there. Can you please confirm that these are the ones?:

ATI Radeon R9 290 4GB RAM

ATI Radeon R9 390 8GB RAM

Nvidia GeForce GTX970 4GB

All of these cards go into the 350-380 Euro price range (I live inside the Euro zone) at local stores.

I mentioned USD in my earlier posts because a CPU that costs 275 USD in the US costs about 275 EUR in the Euro zone. And this trend follows most of the PC components.

With VGAs: an Nvidia GTX960 2GB RAM starts at 200 EUR. 230 EUR if you want 4GB. An Nvidia GTX970 4GB starts at 350 EUR. But then I need to buy from a store that has good RMA, and they bring Asus, MSI, and Gigabyte GTX970s, which start from 420 EUR. Damn!

An EVGA Nvidia GTX970 4GB costs around 300 USD in NewEgg but NewEgg doesn't ship in the place where I live. Amazon does though. The

cheapest GTX970 that I can see right now is around 360 USD. Plus shipping costs. And if it breaks I have to ship it abroad. Damn. Maybe it's best if I postpone buying a VGA for a while.

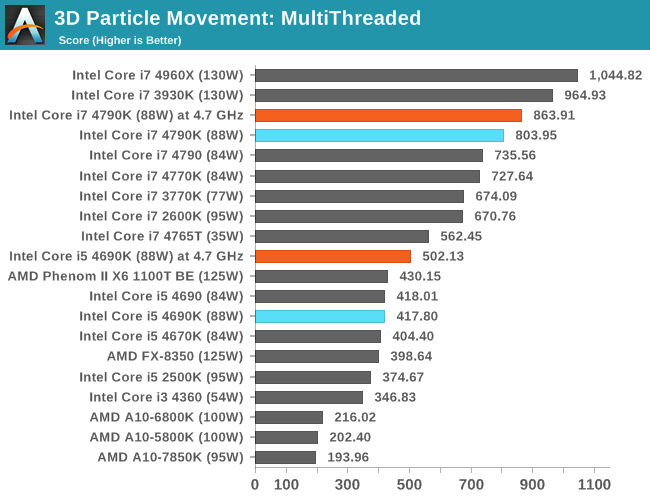

For productivity and overall gaming setup over 4-5 years, i7 5820K @ 4.4-4.5Ghz is way better than the i7 6700K. Why do you guys keep recommending him the i7 6700K? If multi-threaded performance is key, 5820K OC will crush the 6700K. Also, X99 platform has support for 8/10 core Broadwell-E and 18-core Xeons. Z170 is dead end as far as big performance gains go.

While 6700K OC is barely faster than the i7 4790K despite a big price increase, the i7 5820K OC actually smashes the 6700K in multi-threaded apps while delivering most of the gaming performance. With current pricing, the i7 6700K sits in no-man's land imo.

I never, ever, ever, ever overclock my machines. I don't like the idea of stressing the machine and upping the heat.

The extra heat is also the reason that I'm hesitant to go with a 'K' processor. The i7-6700K consumes 95w, the i7-6700 consumes 65w.

I still remember the day that I unpluged my AMD Athlon XP from my motherboard and saw that the CPU socket had a slight rotation because the temperature made the plastic soft! That day I swore I would never again have a hot CPU in my system. It makes me feel (it's not a rational decision) unsafe and unease. The stuff I do when I sit on my computer is important and anything that might even slightly undermine what I'm doing there is something I would really like to avoid. OC has gone a long way since the late 90s. It's gone so mainstream that today --hell even 10 years ago!-- is pretty trivial and safe to OC your CPU. I'll definately need to check on OC at some point in the future.

I use LR a lot (on a 4770K @ 4.4) and cannot believe how slow it is. It's the only software that makes my computer feel slow. On average, the pixel count in DSLRs has only risen 2-3x during the last ten years. Still Adobe is incapable of creating a software that runs well on modern i7 cpus.

According to this test LR is insensitive to hyperthreading and seems to scale reasonably well up to six physical cores. I've seen evidence that HT sometimes hurts the performance.

http://www.sweclockers.com/test/20862-intel-core-i7-6700k-och-i5-6600k-skylake/9#content

Is it also slow in Develop mode? When switching from one photo to the next? When opening a new subfolder? When selecting all photos having a keyword? Do you use the CC version? (I'm on an old 3.x version).

I think Adobe has gotten lazy with LR. We're going to need some competion if we're to see a faster LR.

Would be interested to see what other RAW workflow progs are out there, that at least have some of the facilities of Lightroom that I like: nested keywords, doun't touch RAW files and write everything to XMP files, adjustment snapshots, rename based on date when importing (there are probably more).

This topic deserves its own thread. If you're inclined open one and send me PM to point me to it. Or if you have tried any other RW prog, send me a PM mentioning it. I'll definately return with this.