You caught me.I say you're trolling. You asked.

I predict the return of cartridge based CPU's (poll inside)

Page 2 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

firewolfsm

Golden Member

- Oct 16, 2005

- 1,848

- 29

- 91

The system clearly works for GPUs, which are even larger and more energy intensive. And bandwidth, with PCIe4 and eventually 5, will start to become sufficient even for CPUs. I could see the card system being shared by both GPUs and CPUs for server farms which are going for density.

If you had say, 64 lanes of PCIe per CPU, it could connect to the two nearest GPUs with 16 lanes each, and keep 32 lanes for RAM. These three components could be interleaved across the motherboard and stick to their neighbors for most communication.

If you had say, 64 lanes of PCIe per CPU, it could connect to the two nearest GPUs with 16 lanes each, and keep 32 lanes for RAM. These three components could be interleaved across the motherboard and stick to their neighbors for most communication.

moinmoin

Diamond Member

- Jun 1, 2017

- 5,248

- 8,463

- 136

Which have RAM on board for a reason. While new PCIe versions multiply bandwidth, latency is usually still measured in 1000s of ns, a far cry of the sub 100ns common for accessing RAM (which is what CPUs need the many pins for).The system clearly works for GPUs

DrMrLordX

Lifer

- Apr 27, 2000

- 23,147

- 13,240

- 136

Which have RAM on board for a reason. While new PCIe versions multiply bandwidth, latency is usually still measured in 1000s of ns, a far cry of the sub 100ns common for accessing RAM (which is what CPUs need the many pins for).

AMD used to have an "HT over PCIe" function for older server platforms. I think the only iteration that ever saw the light of day was HTX. They used to use it for InfiniBand controllers and similar.

Which have RAM on board for a reason. While new PCIe versions multiply bandwidth, latency is usually still measured in 1000s of ns, a far cry of the sub 100ns common for accessing RAM (which is what CPUs need the many pins for).

Nothing saying you couldn't put ram slots on the back of the cpu cartridge. Probably would wind up faster and MB makers would rejoice as it'd cut down their end cost.

You will need to use SO-DIMMs so the cartridge will be a reasonable size.Nothing saying you couldn't put ram slots on the back of the cpu cartridge. Probably would wind up faster and MB makers would rejoice as it'd cut down their end cost.

Arkaign

Lifer

- Oct 27, 2006

- 20,736

- 1,379

- 126

If done well it could be a really cool thing that solves a number of problems. In fact, with the right design, it could even be badass for enthusiasts, if you could get good birectional cooling going (eg; have the CPU die package have conductive shell that faces both directions, perhaps 16 cores on side 'A', 16 cores on side 'B'). HSF radiating in both directions.

TheELF

Diamond Member

- Dec 22, 2012

- 4,029

- 753

- 126

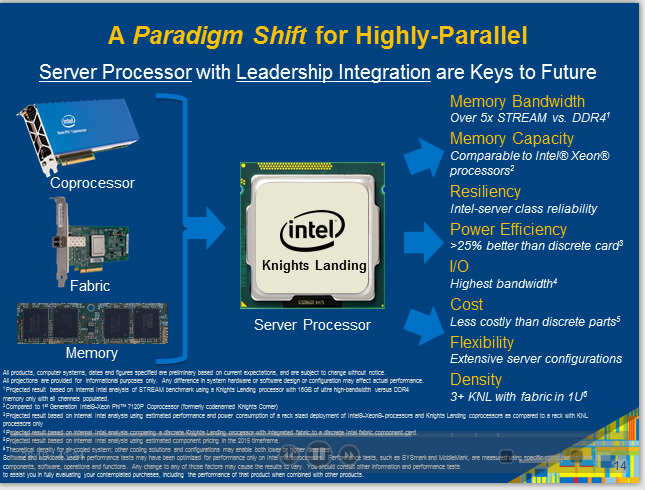

They already did a card based CPU in knights landing / xeon Phi ,they don't need to reinvent the wheel,if hell ever freezes over and this becomes attractive for the mainstream market they already have a working model.

For now these are completely useless for normal people.

For now these are completely useless for normal people.

Veradun

Senior member

- Jul 29, 2016

- 564

- 780

- 136

Chiplet design (however you want to call it) is the new cardridge design.

They used that design to pull cache off die, the limiting factor being the process technology. We are now facing the same problems with miniaturisation and the chiplet design is our new and better answer.

They used that design to pull cache off die, the limiting factor being the process technology. We are now facing the same problems with miniaturisation and the chiplet design is our new and better answer.

XMan

Lifer

- Oct 9, 1999

- 12,513

- 49

- 91

Heh. I still remember putting nickels between my Athlon's cache memory and the heat spreader.

Yes, just because i think that would be cool to see again. The PII/III era was back when i was assembling computers for a living, i probably installed several 1000 Slot CPUs in my lifetime.

- Jan 8, 2011

- 10,734

- 3,454

- 136

Right. Also this. Just sayin'. Also, this. Know what I mean?

https://www.guru3d.com/news-story/qnap-releases-mustang-200-computing-accelerator-card.html

https://www.guru3d.com/news-story/qnap-releases-mustang-200-computing-accelerator-card.html

NTMBK

Lifer

- Nov 14, 2011

- 10,498

- 5,959

- 136

Right. Also this. Just sayin'. Also, this. Know what I mean?

https://www.guru3d.com/news-story/qnap-releases-mustang-200-computing-accelerator-card.html

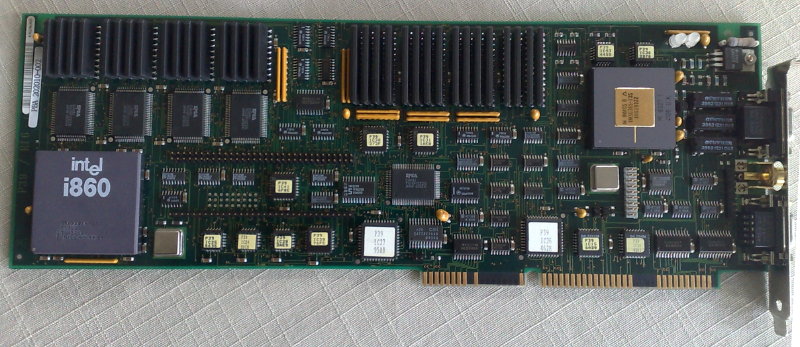

That's not the host CPU on a cartridge, that's an accelerator card that happens to use CPUs. About as relevant as the old graphics cards powered by an Intel i860.

EDIT: Or the Intel Visual Compute Accelerator, that put 3 Xeon E3s on one card so that you could use their Quicksync encoders:

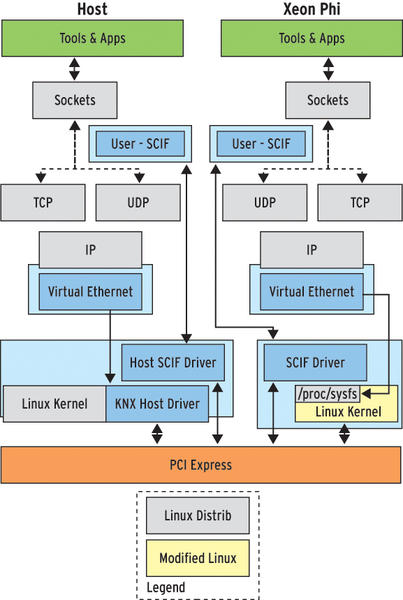

Or the Xeon Phi, that hosted an entire separate Linux OS on the PCIe card (even if the host was Windows!).

Last edited:

Batmeat

Senior member

- Feb 1, 2011

- 807

- 45

- 91

The last time we saw cartridge-style CPUs, I bet many of the newer users on here weren't even born yet.

Remember flip chips? Hook a socket based cpu onto a board you would then insert into the cartridge slot. Allowed faster CPUs in motherboards that otherwise wouldn’t support it....if my memory serves me right.

TheELF

Diamond Member

- Dec 22, 2012

- 4,029

- 753

- 126

What's that got to do with anything? Windows can only see that many CPUs/cores, that won't change even with cartridge CPUs,if you go for -too many cores for windows to see- you will have to run some software layer for it.That's not the host CPU on a cartridge, that's an accelerator card that happens to use CPUs. About as relevant as the old graphics cards powered by an Intel i860.

Or the Xeon Phi, that hosted an entire separate Linux OS on the PCIe card (even if the host was Windows!).

NTMBK

Lifer

- Nov 14, 2011

- 10,498

- 5,959

- 136

What's that got to do with anything? Windows can only see that many CPUs/cores, that won't change even with cartridge CPUs,if you go for -too many cores for windows to see- you will have to run some software layer for it.

My point is that these accelerator boards aren't extra cores that the OS can just schedule an arbitrary thread on- they're standalone devices that are managed by drivers, and run their own software internally. Whereas the old-school cartridge CPUs were just the same as a socketed CPU, they just happened to have a weird shaped socket.

EDIT: From the Mustang-200 product description:

With a dual-CPU processor and an independent operating environment on a single PCIe card (2.0, x4),

These are standalone compute systems, which can communicate with the host over PCIe.

Last edited:

NTMBK

Lifer

- Nov 14, 2011

- 10,498

- 5,959

- 136

Come on man. This is happening. Totally happening. You know this.

CPUs are way too memory bandwidth heavy, you can't get enough pins on the edge of a card. Only way the CPU would move onto a "card" is if main memory moved onto it too.

Also bear in mind that I'm talking about mainstream, high performance CPUs. There's plenty of examples out there in embedded computing of "compute modules" that put the CPU and memory onto a daughterboard, which slots into a bigger board that is basically just an I/O expander. Take a look at COM Express, or Q7.

- Jan 8, 2011

- 10,734

- 3,454

- 136

Please refer to post #23 of this thread.

Looks like we nailed it, Larry. When will the naysayers learn to never question my unmatched wisdom?

www.anandtech.com

www.anandtech.com

Also, don't even worry about it, guys. You can edit your poll choices any time.

I'm with you, Bogg. I've suggested something similar in the past too. I think it makes a LOT of sense, at least to me.

And for those claiming that there aren't enough pins, what about two, or three stacked card-edge connectors? I think that the Xeon slotted CPUs utilized two rows, and Neo-Geo cartridges definitely did. Although, if CPU "carts" get that large, hmm, dunno. Maybe an AMD mega-APU? 300W with integrated water-cooling? GPU and 32-core CPU in one?

Edit: If CPUs were in the form-factor of a Neo-Geo cart (roughly VHS-sized, if you've never seen one), it might make it more likely to be upgraded during the lifetime of the PC. Or it could allow for just-in-time assembling of PCs, build them, and slot in the CPU just before shipping. Either way, you wouldn't need a tech, or to be a tech (like us), to swap CPUs.

Edit: With the larger-sized carts, maybe they could included HBM2 or 3DXpoint NVDIMM-P soldered to the CPU cart, much like the L2 cache was soldered on to the Pentium II Slot-1 carts.

Looks like we nailed it, Larry. When will the naysayers learn to never question my unmatched wisdom?

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

Also, don't even worry about it, guys. You can edit your poll choices any time.

Last edited:

Cooling is going to be the biggest issue. A vertical slot based CPU like in the olden days isn't going to work with the cooling requirements now. Athlons and PIII's had super simple coolers and you still ran into space issues really quick. Can't really get a way with the 200w CPU in that setting. The only setting something like this would work is like in a PXI like setting with maybe some super high bandwidth connection to the master unit like PCIe 5.0 and full on expansion boards with everything including memory on board. This would only work in in a server setting like HPC. But you basically already have the GPU market rushing in to fill this market and Intel is developing GPU's for this very task as well. So no. I mean just in the current socket setup with EPYC and TR with their crazy amount of pins as it advances we could see 128c cpu's and this isn't ultra low power, simple cores. There isn't an onus to create a solution for a slot based CPU.

On the desktops its even more of a mess. How do you handle retention without drastically increasing the price of boards.

Third people need to remember why Slot 1 and Slot A existed. They existed because it seemed like the best way to include an interconnected external cache to the CPU with technology for including it on the same substrate as the CPU among other things hadn't been worked out. Now AMD and Intel have shown on several occasions and even IBM/Microsoft with the Xbox 360. Doing multichip (without talking out and back in) can be done without needing a whole new interface. Zen 2 proves you can do it right without losing performance compared to a mono die. The use case for a slot based CPU is extremely niche.

On the desktops its even more of a mess. How do you handle retention without drastically increasing the price of boards.

Third people need to remember why Slot 1 and Slot A existed. They existed because it seemed like the best way to include an interconnected external cache to the CPU with technology for including it on the same substrate as the CPU among other things hadn't been worked out. Now AMD and Intel have shown on several occasions and even IBM/Microsoft with the Xbox 360. Doing multichip (without talking out and back in) can be done without needing a whole new interface. Zen 2 proves you can do it right without losing performance compared to a mono die. The use case for a slot based CPU is extremely niche.

I don't know, I wouldn't call what Intel announced as a slot CPU. It's actually they reversed the direction of the PCI slot, and made the whole computer (minus PCIE and aux IO) on the daughter board. If you look at the back of the card, it has ethernet and USB... it's a full computer.Looks like we nailed it, Larry. When will the naysayers learn to never question my unmatched wisdom?

As for the origional post, I think the limitation is pins, if we're talking high end computing (which the OP is since he says we're running out of space), then we need a lot of pins, look to HEDT for where pin requirements will be in the future. By including the whole computing platform on the card (minus PCI-E and other IO), they get around the PIN problem. Because it's not just the CPU on the slot

Also, a different solution than slots was found, stacking, which will seems to be the way the industry is going. Although, perhaps cerberos? lol

DrMrLordX

Lifer

- Apr 27, 2000

- 23,147

- 13,240

- 136

I don't know, I wouldn't call what Intel announced as a slot CPU. It's actually they reversed the direction of the PCI slot, and made the whole computer (minus PCIE and aux IO) on the daughter board. If you look at the back of the card, it has ethernet and USB... it's a full computer.

Isn't that what older Phi cards were like? Kinda? Sorta? Just without the ethernet/USB . . .

Yep, so kinda nothing new here? Well perhaps this will do better than the Phi.Isn't that what older Phi cards were like? Kinda? Sorta? Just without the ethernet/USB . . .

NTMBK

Lifer

- Nov 14, 2011

- 10,498

- 5,959

- 136

Please refer to post #23 of this thread.

Looks like we nailed it, Larry. When will the naysayers learn to never question my unmatched wisdom?

Also, don't even worry about it, guys. You can edit your poll choices any time.

That's not a CPU on a cartridge, that's the entire CPU, chipset and memory on a daughterboard. No different from the Xeon Phi, as discussed previously.

I think one of the limitations of slot/cartridge based CPU's is the cooling. These days if you look at how tall cooling is, it would not be trivial on high end CPU's to do the same when the CPU is orientated horizontally. Yes, they could use heat pipes, or AIO's or something else. But the design's would always have to work around the some "safe zone" so that it didn't intrude on memory or PCI slots. Basically, there is no benefit, to having the CPU package with all it's pins on the board like we have now.That's not a CPU on a cartridge, that's the entire CPU, chipset and memory on a daughterboard. No different from the Xeon Phi, as discussed previously.

*edit* Oh, and think about the weight of the current norm for coolers, how do you support 2+lbs of copper horizontally?

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.