Does anyone know of any websites that do more realistic gaming-focused testing of SSD's (level load times, stutter elimination, etc)? I know "real usage" itself is going to differ from person to person, but very few SSD "reviews" even remotely reflect my own usage. I have one 512GB SSD (soon to be 1TB) used for OS, programs & games + one secondary 3TB HDD used for data, music, video, photos, downloads, etc. After all stuff is installed, setup and configured during week 1, the bulk of day to day SSD operations from then on are typically 90-95% reads of static data with 5-10% writes being little more than OS data, game saves and web browser cache. I rarely uninstall anything and the total quantity of post-install data written is so low that on a 512GB SSD, the "average block-erase count" is still in single digits after a year. Most "bulk data" is on the HDD. So tests like...

- Copying large videos, music files, etc, back & forward are utterly irrelevant to me since they're all on the much larger 3TB HDD (to maximize precious SSD space for games)

- Synthetics that max out sequential performance do not really reflect the typically lower install speed of games which are installed from a compressed installer (sometimes single-threaded) from a backup / data drive, or for older retail games even an optical disc, where they'll typically be written slower than a pure file copy / synthetic write due to a decompression phase. Likewise, if I'm ripping / burning a CD / DVD / Blu-Ray, it'll go on the HDD as the bottleneck either way is the optical drive speed. The bottleneck for video / audio encoding is the CPU, etc.

- 30mins continuous "consistency tests" involving writing over 150-500GB without any break do not really apply to me or many others who may install one 0.1-50GB game, then there'll be a delay before installing the next one, etc. And that's assuming you put all your games on at once. Same goes for regular people of putting say Office & Photoshop Elements on a laptop, then not much else. "Consistency" tests should really be renamed "saturation" tests.

- HTPC "trace usage" that uses wildly exaggerated demands (eg, accessing 7-8 files simultaneously in a house with 2-3 people). In our house, if we record 2 things, and watch a 3rd whilst downloading a 4th, then it'll peak at 4 (normally is 1-2). But even then, 5,400rpm HDD's in various set-top boxes can handle 3 HD streams at once (record 2 + watch), and every single person we know with a custom built HTPC has a small boot SSD and either a local 2-4TB HDD, or pulls it over a NAS / media server (with a couple of 2-4GB WD Red's) where the bottleneck for multiple streams will be Gigabit network.

- Continuous QD32 "4k" only files are not realistic for anything I use. Most queue depths of mine are single digits, and although the web browser cache is the nearest thing that matches, it gets written at a far slower rate and many files are nearer 128k than 4k. You load a page, a dozen small files get written / read from cache, then there's a break whilst you read the page, etc. This is neither constant nor queued up 32x load, let alone both for 10-30mins solid.

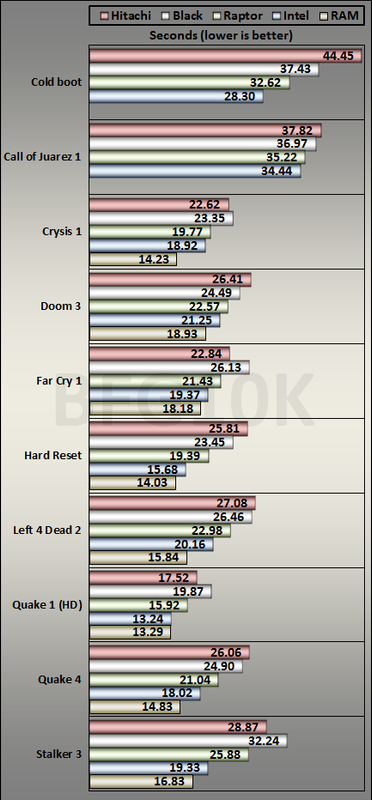

To give one extreme example of the massive "synthetic vs observable reality" disparity, the Samsung 950 PRO is highly impressive in being 3-4x theoretically faster than most SATA bottlenecked drives, and yet is actually losing to the cheap MX200 in multiple games:-

http://techreport.com/review/29221/samsung-950-pro-512gb-ssd-reviewed/4

Now I expected the "real world" gap to be narrower, but a budget MX200 loading levels faster than a +110% more expensive 950 PRO M2 during actual gaming? And some review sites conclude how "the 512GB 950 PRO will be popular amongst gamers" (over a 1TB MX200 / Samsung EVO for less money)...

Is there some site out there that actually attempts to seriously address this stuff in detail beyond token "PC Mark 7 trace" 'realism' tests? Like a clean install of 20 games with a range of "many small files" and "few large files" installs, then measuring performance, level load times, stutter elimination in open-world games that "burst stream" in data, etc? Or benchmarking games install times from actual packed games installers (eg, a large downloaded GOG exe) rather than simple file copies or synthetic produced data? So far the Tech Report is the only one that's actually tried to measure even simple things like level load times.

Surely I can't be the only one who wants at least one review website to try something a little different beyond the usual : "7 out of 8 pages contain unrealistic workloads for any client use but we included them because..." comprising 90% of the review, only for a single page of half-hearted "real" data tucked in somewhere and treated almost like an aberration (in 1 review out of 5 that can even bother with that) that openly contradicts everything previously written, before ending with some very iffy untested conclusions for "gamers"...

- Copying large videos, music files, etc, back & forward are utterly irrelevant to me since they're all on the much larger 3TB HDD (to maximize precious SSD space for games)

- Synthetics that max out sequential performance do not really reflect the typically lower install speed of games which are installed from a compressed installer (sometimes single-threaded) from a backup / data drive, or for older retail games even an optical disc, where they'll typically be written slower than a pure file copy / synthetic write due to a decompression phase. Likewise, if I'm ripping / burning a CD / DVD / Blu-Ray, it'll go on the HDD as the bottleneck either way is the optical drive speed. The bottleneck for video / audio encoding is the CPU, etc.

- 30mins continuous "consistency tests" involving writing over 150-500GB without any break do not really apply to me or many others who may install one 0.1-50GB game, then there'll be a delay before installing the next one, etc. And that's assuming you put all your games on at once. Same goes for regular people of putting say Office & Photoshop Elements on a laptop, then not much else. "Consistency" tests should really be renamed "saturation" tests.

- HTPC "trace usage" that uses wildly exaggerated demands (eg, accessing 7-8 files simultaneously in a house with 2-3 people). In our house, if we record 2 things, and watch a 3rd whilst downloading a 4th, then it'll peak at 4 (normally is 1-2). But even then, 5,400rpm HDD's in various set-top boxes can handle 3 HD streams at once (record 2 + watch), and every single person we know with a custom built HTPC has a small boot SSD and either a local 2-4TB HDD, or pulls it over a NAS / media server (with a couple of 2-4GB WD Red's) where the bottleneck for multiple streams will be Gigabit network.

- Continuous QD32 "4k" only files are not realistic for anything I use. Most queue depths of mine are single digits, and although the web browser cache is the nearest thing that matches, it gets written at a far slower rate and many files are nearer 128k than 4k. You load a page, a dozen small files get written / read from cache, then there's a break whilst you read the page, etc. This is neither constant nor queued up 32x load, let alone both for 10-30mins solid.

To give one extreme example of the massive "synthetic vs observable reality" disparity, the Samsung 950 PRO is highly impressive in being 3-4x theoretically faster than most SATA bottlenecked drives, and yet is actually losing to the cheap MX200 in multiple games:-

http://techreport.com/review/29221/samsung-950-pro-512gb-ssd-reviewed/4

Now I expected the "real world" gap to be narrower, but a budget MX200 loading levels faster than a +110% more expensive 950 PRO M2 during actual gaming? And some review sites conclude how "the 512GB 950 PRO will be popular amongst gamers" (over a 1TB MX200 / Samsung EVO for less money)...

Is there some site out there that actually attempts to seriously address this stuff in detail beyond token "PC Mark 7 trace" 'realism' tests? Like a clean install of 20 games with a range of "many small files" and "few large files" installs, then measuring performance, level load times, stutter elimination in open-world games that "burst stream" in data, etc? Or benchmarking games install times from actual packed games installers (eg, a large downloaded GOG exe) rather than simple file copies or synthetic produced data? So far the Tech Report is the only one that's actually tried to measure even simple things like level load times.

Surely I can't be the only one who wants at least one review website to try something a little different beyond the usual : "7 out of 8 pages contain unrealistic workloads for any client use but we included them because..." comprising 90% of the review, only for a single page of half-hearted "real" data tucked in somewhere and treated almost like an aberration (in 1 review out of 5 that can even bother with that) that openly contradicts everything previously written, before ending with some very iffy untested conclusions for "gamers"...

Last edited: