Irony much?

The key to using "less power" watching videos online, is "hardware decode". In case you hadn't noticed.

Edit: And while I've heard that online streaming (uploading) is better-quality using software encoding, instead of hardware ENCODING, I've never heard anyone say bad things about software DECODING, when using a format that the hardware is capable of decoding.

Spend some time in the madVR thread on Doom9. madshi is forever going back and forth with Intel, AMD, and Nvidia over their decode issues. If you care about accuracy you should not be using hardware decode.

So moms don't share large high quality original photos of a wedding or any number of events, or large video files of their family?

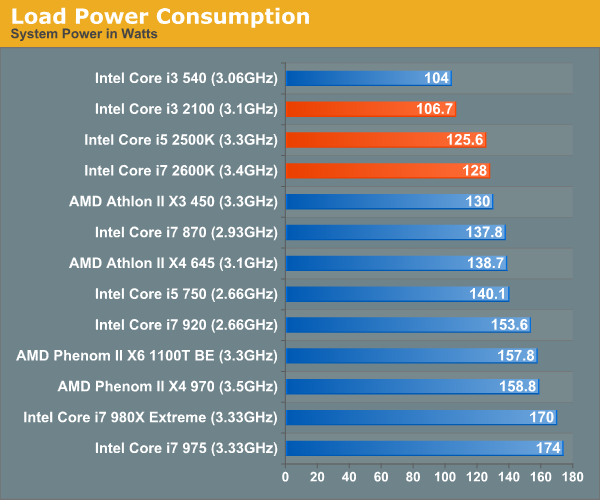

Power management has gotten a lot better over the years, and you have to factor in the whole system.

We're talking about using it through 2024, the hardware will be at least 12 years old at that point. Things will fail.

And VirtualLarry covered the hardware decode part.

Why do people continually seem to think that Sandy is the same as dual socket 771 server? A HP DL380 G5 will pull ~500W; that doesn't mean Sandy does.

Anandtech's review doesn't have system power for the 2200G, but package power alone is 53W, which is in the same range as the i5 2500 CPU.

Also, in my experience AMD systems last around half as long as Intel ones, so buying a new AMD system will not likely see you more longevity than a used Intel.

I have a stable full of Lynnfield, Sandy, and Ivies all running like new, while my Phenom II's are all junked.