- Jan 15, 2013

- 12,181

- 35

- 91

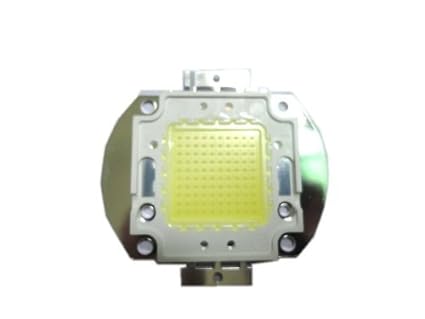

Problem: I have a projector with an LED chip lamp. I want to measure the current output of the projector's power supply and thus ascertain the LED's wattage.

I can't really measure the current from the power supply directly because my multimeter is teling me 0 amps (possibly because it draws too much current and the power supply automatically shuts off when it's shorted.) I don't have a Kill-A-Watt.

1. How can I measure current output without a multimeter? One unscientific method is to light the LED using a known power supply and compare it to the brightness of the unknown power supply. I also don't have a light meter

2. How much is it okay to overload an LED chip lamp? Like if it's rated for 20W can I give it 30W with enough cooling?

I can't really measure the current from the power supply directly because my multimeter is teling me 0 amps (possibly because it draws too much current and the power supply automatically shuts off when it's shorted.) I don't have a Kill-A-Watt.

1. How can I measure current output without a multimeter? One unscientific method is to light the LED using a known power supply and compare it to the brightness of the unknown power supply. I also don't have a light meter

2. How much is it okay to overload an LED chip lamp? Like if it's rated for 20W can I give it 30W with enough cooling?