http://wccftech.com/hitman-lead-dev-dx12-gains-time-ditching-dx11/

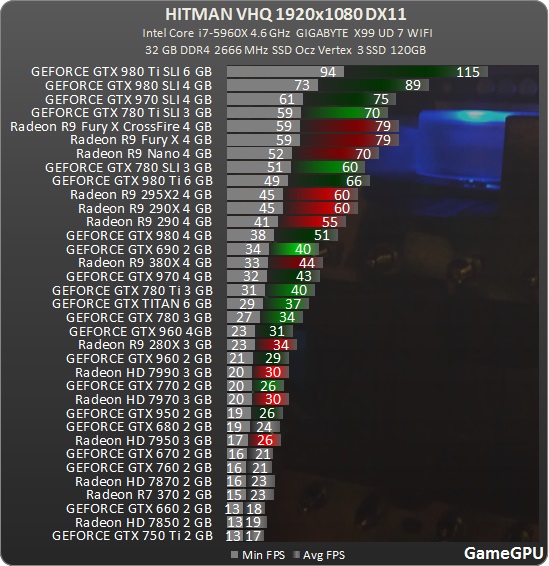

HITMAN on DirectX 12 only offers approximately a 10% performance boost on AMD cards. Are you planning to improve DirectX 12 performance and/or add any further DX12 features via patch and if so, which ones?

We dont have any DX12 specific improvements planned for our subsequent releases. While we have 10% improvement when completely GPU bound, I think there are gains above that when youre CPU bound.

NVIDIA cards, on the other hand, have basically the same performance under DX11 and DX12. Youve mentioned during the GDC 2016 talk that youre working with NVIDIA to improve their Async Compute implementation how are things looking on that end and do you have any ETA?

I dont have any news on that front, sorry.

Async Compute in particular has received a lot of attention from PC enthusiasts, specifically in regards to NVIDIA GPUs lacking hardware support for it. However, in the GDC 2016 talk you said that even AMD cards only got a 5-10% boost and furthermore, you described Async Compute as super hard to tune because too much work can make it a penalty. Is it fair to say that the importance of Async Compute has been perhaps overstated in comparison to other factors that determine performance? Do you think NVIDIA may be in trouble if Pascal doesnt implement a hardware solution for Async Compute?

The main reason its hard is that every GPU ideally needs custom tweaking the bandwidth to compute ration is different for each GPU, ideally requiring tweaking the amount of async work for each one. I dont think its overstated, but obviously YMMW (your mileage may vary). In the current state, Async compute is a nice & easy performance win. In the long run it will be interesting to see if GPUs get better at running parallel work, since we could potentially get even better wins.

Several DirectX 12 games are out now, but the first outlook isnt nearly as positive as Microsoft stated (up to 20% more performance from the GPU and up to 50% more performance from the CPU). Do you think that its just a matter of time before developers learn how to use the new API, or perhaps the performance benefits have been somewhat overestimated?

I think it will take a bit of time, and the drivers & games need to mature and do the right things. Just reaching parity with DX11 is a lot of work. 50% performance from CPU is possible, but it depends a lot on your game, the driver, and how well they work together. Improving performance by 20% when GPU bound will be very hard, especially when you have a DX11 driver team trying to improve performance on platform as well. Its worth mentioning we did only a straight port, once we start using some of the new features of dx12, it will open up a lot of new possibilities and then the gains will definitely be possible. We probably wont start on those features until we can ditch DX11, since a lot of them require fundamental changes to our render code.

Do you believe HITMANs DirectX 12 performance could have been better if the game had been built entirely on it, rather than having DX11 as min spec?

Yes, it obviously would have. DX12 includes new hardware features, which I think over time will make it possible for us to make games run even better.

Another low level API has been recently released: Vulkan. What do you think of it in terms of performance and features? Do you have any plans to add Vulkan support to HITMAN?

Vulkan is a graphics programmers wet dream: A high performance API, like d3d12, for all platforms. With that said, we dont have any plans to add Vulkan support to Hitman. Also, unfortunately it looks like Vulkan wont be supported on all platforms.

Finally, as a developer, what are your thoughts on the Universal Windows Platform?

Im not qualified to talk about UWP I dont know anything about it. I can say, I really like the Win32 API, its been around forever, is well documented, and it works.

Last edited: