- Mar 27, 2009

- 12,968

- 221

- 106

Here is a chart (I found on another forum posted by username "bladerash") showing process technology leads over the years:

Intel

250nm_____january 1998_____Deschutes

180nm_____25 october 1999_____Coppermine

130nm_____july 2001_____Tualatin

90nm_____february 2004_____Prescott

65nm_____january 2006_____Cedar Mill

45nm_____january 2008_____Wolfdale

32nm_____7 january 2010_____Clarkdale

22nm_____january 2012??_____Ivy Bridge

AMD

250nm_____6 january 1998_____K6 ''Little Foot''

180nm_____23 june 1999_____Athlon ''original''

130nm_____10 june 2002_____Thoroughbred

90nm_____14 october 2004_____Winchester

65nm_____5 december 2006_____Brisbane

45nm_____8 january 2009_____Deneb

32nm_____30 june 2011_____Llano

If this chart is correct at one time AMD and Intel were on par with each other. (with AMD actually beating Intel to the 180mm node).

But look at things today? AMD is 18 month behind Intel on the 32nm node. Furthermore, Intel is about to release 22nm Finfet process technology, which will probably result in the gap widening even more. (see quote below)

http://www.anandtech.com/show/4318/intel-roadmap-ivy-bridge-panther-point-ssds/1

This brings me to my question:

We've all heard about using the GPU for energy efficient computing, but what about AMD adding bobcat CPU cores (to augment large CPUs) to the equation? Could this help close the gap on battery run times?

A good example of mixing large CPU cores with small cpu cores would be the upcoming ARM "big.LITTLE" arrangement with Cortex A15 coupled to Cortex A7.

http://www.arm.com/products/processors/technologies/bigLITTLEprocessing.php

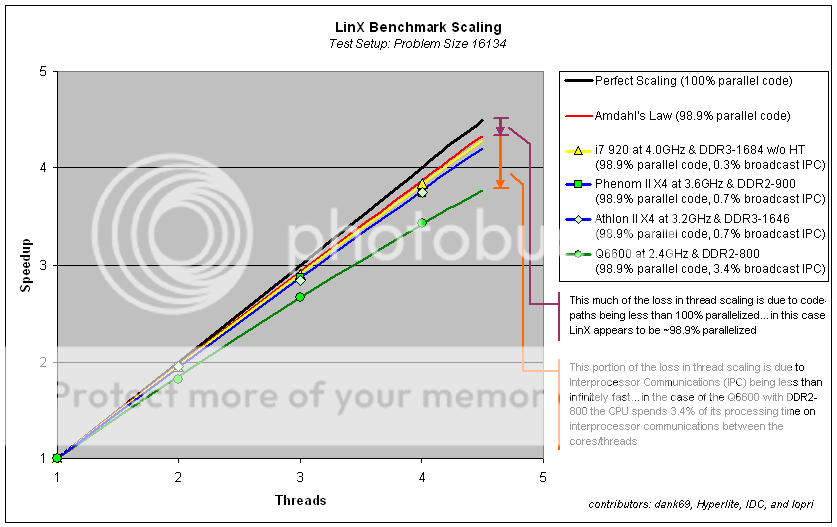

Even though we are looking at a scaleless graph above, I'd have to imagine the power savings would be significant.

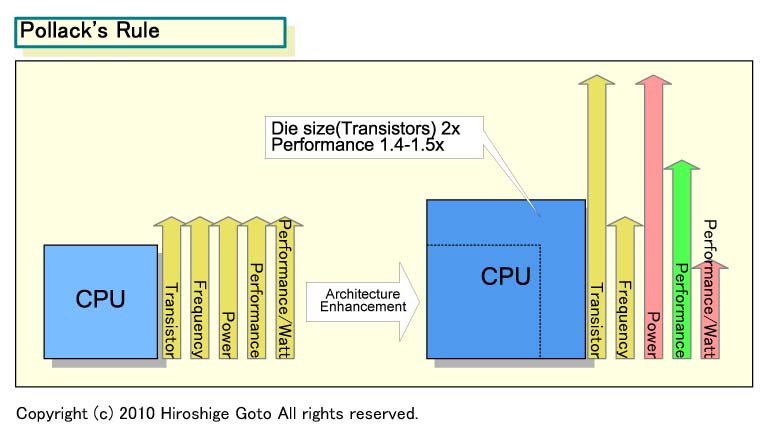

The downside for AMD with "big.LITTLE" would be increased silicon die size area, but bobcat cores are pretty small at 4.6mm2 on 40nm node.

Intel

250nm_____january 1998_____Deschutes

180nm_____25 october 1999_____Coppermine

130nm_____july 2001_____Tualatin

90nm_____february 2004_____Prescott

65nm_____january 2006_____Cedar Mill

45nm_____january 2008_____Wolfdale

32nm_____7 january 2010_____Clarkdale

22nm_____january 2012??_____Ivy Bridge

AMD

250nm_____6 january 1998_____K6 ''Little Foot''

180nm_____23 june 1999_____Athlon ''original''

130nm_____10 june 2002_____Thoroughbred

90nm_____14 october 2004_____Winchester

65nm_____5 december 2006_____Brisbane

45nm_____8 january 2009_____Deneb

32nm_____30 june 2011_____Llano

If this chart is correct at one time AMD and Intel were on par with each other. (with AMD actually beating Intel to the 180mm node).

But look at things today? AMD is 18 month behind Intel on the 32nm node. Furthermore, Intel is about to release 22nm Finfet process technology, which will probably result in the gap widening even more. (see quote below)

http://www.anandtech.com/show/4318/intel-roadmap-ivy-bridge-panther-point-ssds/1

The shrink to 22nm and 3D transistors (FinFET) almost represents a two-node process technology jump, so we expect performance at various power levels to increase quite a bit.

This brings me to my question:

We've all heard about using the GPU for energy efficient computing, but what about AMD adding bobcat CPU cores (to augment large CPUs) to the equation? Could this help close the gap on battery run times?

A good example of mixing large CPU cores with small cpu cores would be the upcoming ARM "big.LITTLE" arrangement with Cortex A15 coupled to Cortex A7.

http://www.arm.com/products/processors/technologies/bigLITTLEprocessing.php

Even though we are looking at a scaleless graph above, I'd have to imagine the power savings would be significant.

The downside for AMD with "big.LITTLE" would be increased silicon die size area, but bobcat cores are pretty small at 4.6mm2 on 40nm node.

Last edited: