Thanks for spotting this, it looks like pretty good news. I was beginning to think AMD was having it all their way, when what I'd really like is good competition between the two, giving us better cards and choice. Look forward to the 69xx series and how they compare.

GTX580 reviews thread

Page 3 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Very impressive. If nvidia had come out with this card at their 480 launch like they were supposed to, it could have made up for them being nearly half a year late. It's still a welcome boost, though.

Think about this for a second: if Nvidia had come out with this card back in April, then the gtx470 would have had the performance of what is now a gtx480. That means Nvidia would have had no reason to gimp gf104 and would have released a full on 384 shader card, which would have been faster than an hd5870. So Nvidia would have had 3 GPU's faster than AMD single best GPU.

Things would have been extremely lopsided from April 2010 till 28nm.

happy medium

Lifer

- Jun 8, 2003

- 14,387

- 480

- 126

About the limiter.......

"At this time the limiter is only engaged when the driver detects Furmark / OCCT, it is not enabled during normal gaming. NVIDIA also explained that this is just a work in progress with more changes to come. From my own testing I can confirm that the limiter only engaged in Furmark and OCCT and not in other games I tested.

Die size?

"According to NVIDIA, the die size of the GF110 graphics processor is 520 mm²."

--------------------------------------------------------------------------------

"At this time the limiter is only engaged when the driver detects Furmark / OCCT, it is not enabled during normal gaming. NVIDIA also explained that this is just a work in progress with more changes to come. From my own testing I can confirm that the limiter only engaged in Furmark and OCCT and not in other games I tested.

Die size?

"According to NVIDIA, the die size of the GF110 graphics processor is 520 mm²."

--------------------------------------------------------------------------------

"According to NVIDIA, the die size of the GF110 graphics processor is 520 mm²."

A MASSIVE 10mm^2 smaller than gf100!

If the average power draw at load under gaming is 197w, then 2 of these bad boys can definitely be put on one card, down clocked to ~725, to make one ultra expensive, ultra fast x2 card.

Daedalus685

Golden Member

- Nov 12, 2009

- 1,386

- 1

- 0

Think about this for a second: if Nvidia had come out with this card back in April, then the gtx470 would have had the performance of what is now a gtx480. That means Nvidia would have had no reason to gimp gf104 and would have released a full on 384 shader card, which would have been faster than an hd5870. So Nvidia would have had 3 GPU's faster than AMD single best GPU.

Things would have been extremely lopsided from April 2010 till 28nm.

Yeah but ifs and buts and all that.

It is just as likely we would have seen a 5890 in the summer if Fermi was full powered out of the gate. Alternatively, AMD likely expected Fermi to be this powerful int eh first place (at least in a oh no sort of way) and were fully prepared to start a 4800 style price war.

That and there is no guarantee the 460 was released without the extra cores for positioning vs binning. It may have been the same card we see right now regardless.

muskie32

Diamond Member

- Sep 13, 2010

- 3,115

- 7

- 81

Pretty flippin' impressed, thing is a screamer.

.

Same comment :awe:

Daedalus685

Golden Member

- Nov 12, 2009

- 1,386

- 1

- 0

If the average power draw at load under gaming is 197w, then 2 of these bad boys can definitely be put on one card, down clocked to ~725, to make one ultra expensive, ultra fast x2 card.

Perhaps. One of these is still more power hungry than a 5970, a card many thought would never be made due to power constraints, and is handily under clocked to fit into them.

happy medium

Lifer

- Jun 8, 2003

- 14,387

- 480

- 126

If the average power draw at load under gaming is 197w, then 2 of these bad boys can definitely be put on one card, down clocked to ~725, to make one ultra expensive, ultra fast x2 card.

Rumer has 2 gtx 570's on a dual gpu card.

Yeah but ifs and buts and all that.

It is just as likely we would have seen a 5890 in the summer if Fermi was full powered out of the gate. Alternatively, AMD likely expected Fermi to be this powerful int eh first place (at least in a oh no sort of way) and were fully prepared to start a 4800 style price war.

The 5890 never came out because the 5870 does not scale well with clock speed increases. Otherwise they definitely would have released it since there was a huge pricing gap between the hd5870 and hd5970.

That and there is no guarantee the 460 was released without the extra cores for positioning vs binning. It may have been the same card we see right now regardless.

Who are you kidding? The only reason Nvidia never released a fully unlocked gf104 card was because it would have completely rendered the gtx470 a worthless purchase.

Rumer has 2 gtx 570's on a dual gpu card.

Rumors also pegged GF110 to be released in January with either 768 shaders or 512 super-scalar shaders (ala GF104) and Barts to have 2+2 shader configuration. So about those rumors....

PingviN

Golden Member

- Nov 3, 2009

- 1,848

- 13

- 81

Rumer has 2 gtx 570's on a dual gpu card.

Not another "rumor" indicating a dual fermi board... Now it's just getting silly.

happy medium

Lifer

- Jun 8, 2003

- 14,387

- 480

- 126

Rumors also pegged GF110 to be released in January with either 768 shaders or 512 super-scalar shaders (ala GF104) and Barts to have 2+2 shader configuration. So about those rumors....

I think they are revising the gf104 chip in January, just like the gf100 was with the gf110.

Daedalus685

Golden Member

- Nov 12, 2009

- 1,386

- 1

- 0

The 5890 never came out because the 5870 does not scale well with clock speed increases. Otherwise they definitely would have released it since there was a huge pricing gap between the hd5870 and hd5970.

No, it does not scale well with clocks. However, one cannot make blind assumptions about could ofs on one side while guaranteeing static on the other. Many things could have happened differently including a 5890 that scales better with clocks due to "minor tweaks".

The fact is this was not how things went, and should they have changed for one team they could have been drastically different for the other... What if 32nm at glofo worked and the true 6000 series released in the fall, with a true 5000 refresh months ago? What if ATI had a refresh planned that scaled well but scrapped it given the market and the need to work on the no scrapped 32nm parts for 40nm? It is a silly thing to speculate on.

Who are you kidding? The only reason Nvidia never released a fully unlocked gf104 card was because it would have completely rendered the gtx470 a worthless purchase.

I am not kidding anyone. I have no evidence they could have at any time thus why would I believe it as they didn't. There are likely many reasons the 460 was not a fully enabled GPU. As it was the 460 overclocks and stock killed off the 465 and 470 anyway. If it were up to me I would have entirely killed the 470 if it meant more sales over the 5870 on the whole, if i were able to. Obviously it is not up to me.

Until they release a GF104 with full cores I have no evidence that they are capable at all, why would I believe they could have all along. It certainly would have destroyed the 6800s if they had released it before as the 580 is before the 6900s.

happy medium

Lifer

- Jun 8, 2003

- 14,387

- 480

- 126

No, it does not scale well with clocks. However, one cannot make blind assumptions about could ofs on one side while guaranteeing static on the other. Many things could have happened differently including a 5890 that scales better with clocks due to "minor tweaks".

The fact is this was not how things went, and should they have changed for one team they could have been drastically different for the other... What if 32nm at glofo worked and the true 6000 series released in the fall, with a true 5000 refresh months ago? What if ATI had a refresh planned that scaled well but scrapped it given the market and the need to work on the no scrapped 32nm parts for 40nm? It is a silly thing to speculate on.

I am not kidding anyone. I have no evidence they could have at any time thus why would I believe it as they didn't. There are likely many reasons the 460 was not a fully enabled GPU. As it was the 460 overclocks and stock killed off the 465 and 470 anyway. If it were up to me I would have entirely killed the 470 if it meant more sales over the 5870 on the whole, if i were able to. Obviously it is not up to me.

Until they release a GF104 with full cores I have no evidence that they are capable at all, why would I believe they could have all along. It certainly would have destroyed the 6800s if they had released it before as the 580 is before the 6900s.

Come on dude? All this and nothing about the gtx580? start a new thread.:\

cusideabelincoln

Diamond Member

- Aug 3, 2008

- 3,275

- 46

- 91

Well now I hope reviewers stop testing Furmark power consumption. I thought this number was useless before, but now it most definitely is. Give us power numbers from real life gaming situations, please and thank you.

And as a request to AT: I would like to see average power consumption under a gaming situation along with maximum figures.

And as a request to AT: I would like to see average power consumption under a gaming situation along with maximum figures.

happy medium

Lifer

- Jun 8, 2003

- 14,387

- 480

- 126

Well now I hope reviewers stop testing Furmark power consumption. I thought this number was useless before, but now it most definitely is. Give us power numbers from real life gaming situations, please and thank you.

And as a request to AT: I would like to see average power consumption under a gaming situation along with maximum figures.

+1

Well now I hope reviewers stop testing Furmark power consumption. I thought this number was useless before, but now it most definitely is. Give us power numbers from real life gaming situations, please and thank you.

And as a request to AT: I would like to see average power consumption under a gaming situation along with maximum figures.

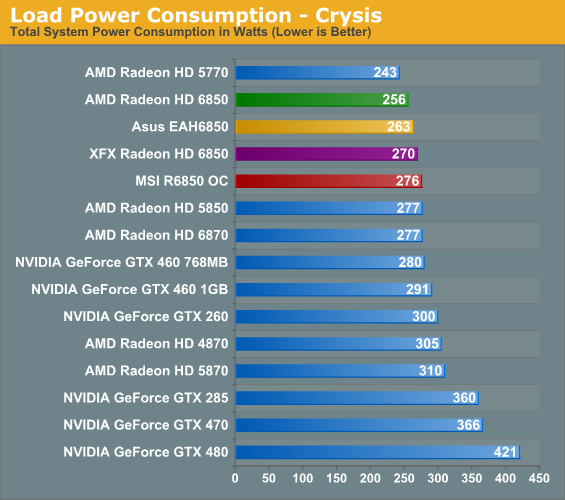

AT already does this. They give power load under furmark and crysis.

GaiaHunter

Diamond Member

- Jul 13, 2008

- 3,731

- 428

- 126

Well now I hope reviewers stop testing Furmark power consumption. I thought this number was useless before, but now it most definitely is. Give us power numbers from real life gaming situations, please and thank you.

And as a request to AT: I would like to see average power consumption under a gaming situation along with maximum figures.

erm...

Daedalus685

Golden Member

- Nov 12, 2009

- 1,386

- 1

- 0

Come on dude? All this and nothing about the gtx580? start a new thread.:\

I'll grant you that it was off topic, but it does not deserve a new thread simply to respond to something that started "on topic" in this one. On topic does tend to diverge. It is natural, and I feel no need to avoid pointing out and discussing points raised by others.

I have given my words for the 580, it is a great product.

Though, what is more off topic, the post claiming off topic and nothing else, or a direct response to other comments in this thread?

I will avoid continuing an off topic discussion though,

My apologies to all.

Trading blows with the 5970. Speaking of 5970s... they are slowly being taken off newegg...

Noticed it too. Must be to make space for 6990...

Pretty flippin' impressed, thing is a screamer.

Still looks to trail the 5970 overall though.

If we assume it's 2x 470 clocked down to fit into power/thermal envelope - I bet it is - it's pretty obvious it won't be faster than 5970, rather match it, best case scenario...

...until 6970/6990 arrives next week, of course.

I have to agree with Charlie here, it's mostly a showoff for the investor call, it's probably way more expensive to make than even a GTX480. We'll have to see the availability first - if it's low then it's just a smokescreen.

cusideabelincoln

Diamond Member

- Aug 3, 2008

- 3,275

- 46

- 91

Furmark is useless, and their Crysis test does not give me what I want in the average.AT already does this. They give power load under furmark and crysis.

Let me clarify, since you obviously didn't get what I was saying: I want the maximum power consumption under a typical gaming situation. You are right AT already does this with the Crysis test. However I also want the average power consumption over time. So AT could log the power usage over a 5 minute Crysis demo. This will incorporate the highs, lows, and middles of power consumption for a typical card. With this information you can more accurately estimate total energy useless; you can't just use the maximum or peak value to do this.

I'm really shocked why a few of you didn't get what I was saying. I first said Furmark was useless. Then I said I wanted maximum values. I should have spelled it out for you guys, but I meant maximum under a gaming situation, not maximum under Furmark. Because... yeah... I stated with vehemence that Furmark was useless... Jesus.

Last edited:

happy medium

Lifer

- Jun 8, 2003

- 14,387

- 480

- 126

If we assume it's 2x 470 clocked down to fit into power/thermal envelope

I was assuming 2 fully clocked gtx570's (as fast as a gtx 480 each) to compete with a 6990 dual card in December. 6970 and 6950's are next week correct?

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.