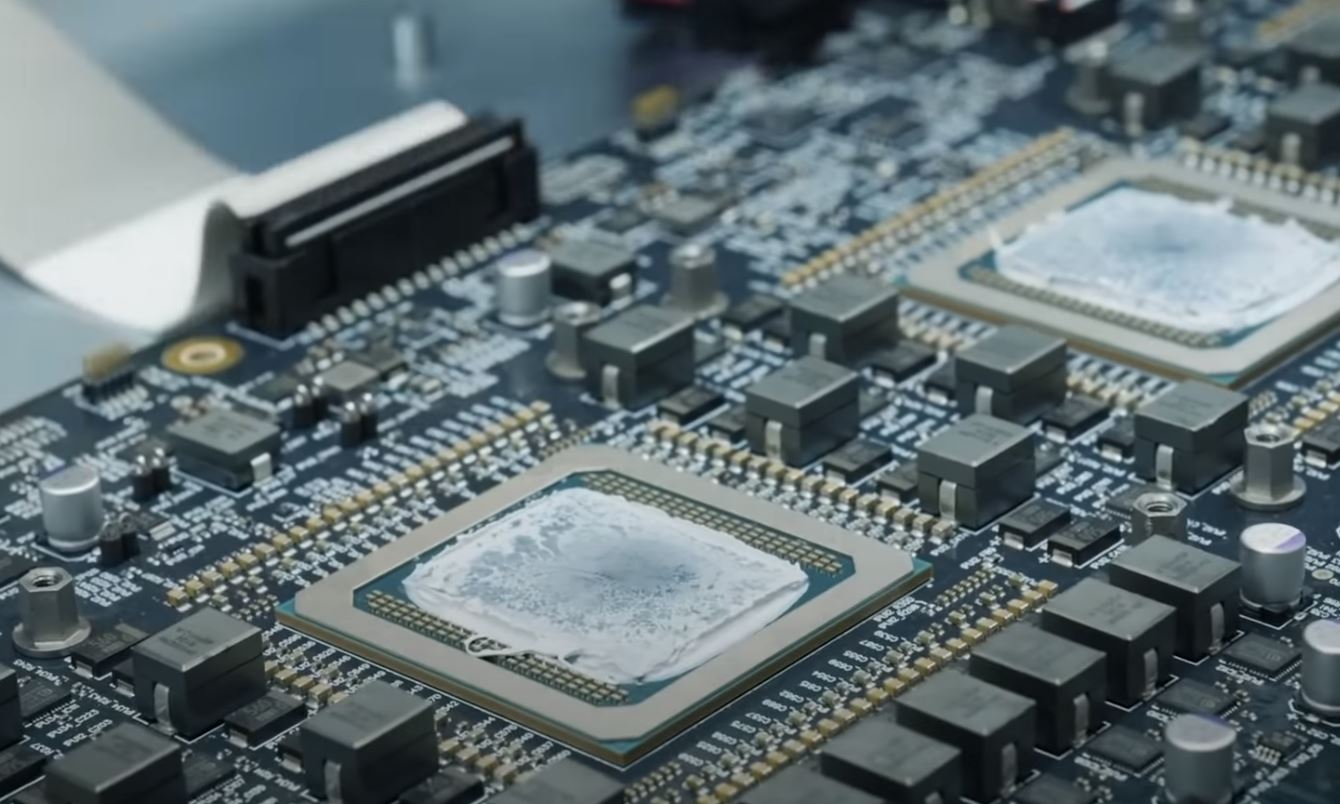

Graphcore just announced their 250 TeraFLOP FP16 monster AI chip, nVidia beware.....

Link here to more details on it.

Edit: the announcement is very slippery about exactly what a single chip does.

I don't think it's 250 TFLOP FP32 per chip, I think it's per rack of 4 doing 62.5 TFLOPS each.

FP16 figures are projected 4x higher than that, so effectively 250 TFLOPS FP16 per chip.

Link here to more details on it.

Edit: the announcement is very slippery about exactly what a single chip does.

I don't think it's 250 TFLOP FP32 per chip, I think it's per rack of 4 doing 62.5 TFLOPS each.

FP16 figures are projected 4x higher than that, so effectively 250 TFLOPS FP16 per chip.

Last edited: