- Sep 9, 2010

- 2,574

- 252

- 126

For those that didn't catch this on the main page.....

http://www.anandtech.com/show/7166/nvidia-announces-quadro-k6000

http://www.anandtech.com/show/7166/nvidia-announces-quadro-k6000

If the rumor's of AMD's GPU refresh coming October/November are true, then I think we'll see a fully loaded GK110 on the Geforce side, too. Nvidia played this game with Fermi, releasing cut down parts just fast enough to be clearly faster than AMD's (in part because they probably had to with their first gen Fermi parts). This time they obviously had more wiggle room and time GK110 (and they probably needed the time to get GK110 right), but I can definitely see AMD matching gtx780 and forcing Nvidia to drop it's price and release a gtx785 that will actually outperform Titan in games.

Sounds really boring to me, in a few months its nearly 2 years into this gen and we're getting such tiny bumps.

Would these cards really entice users who own a 680 or 7970 for this long to upgrade? I guess GPUs are becoming like CPUs.. keeping them for longer and longer.

anyone else surprised this was rated @ 225w? clocks werent listed but they figure 900mhz.

really specially binned chips then or what?

Wait, so Titan was their midrange card as well?!If the rumor's of AMD's GPU refresh coming October/November are true, then I think we'll see a fully loaded GK110 on the Geforce side, too. Nvidia played this game with Fermi, releasing cut down parts just fast enough to be clearly faster than AMD's (in part because they probably had to with their first gen Fermi parts). This time they obviously had more wiggle room and time GK110 (and they probably needed the time to get GK110 right), but I can definitely see AMD matching gtx780 and forcing Nvidia to drop it's price and release a gtx785 that will actually outperform Titan in games.

Wait, so Titan was their midrange card as well?!

Wouldn't say "less power".... but yes they do have lots of headroom.

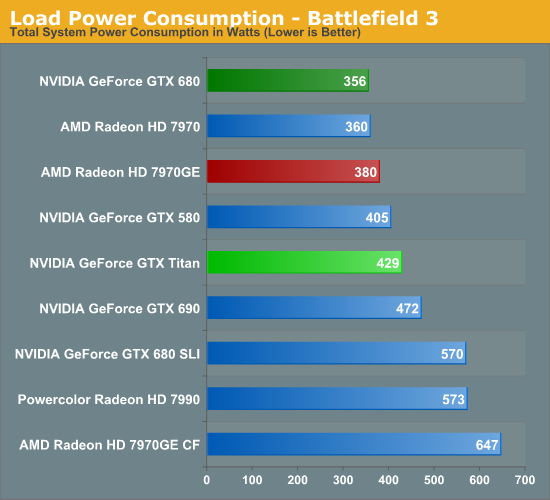

It's total system power consumption. Not Titan's.

Like that makes a difference. Doesn't matter what makes it draw more power, your PC will draw more power with a Titan in the system than with a 7970GE. Maybe there is increased chipset traffic, maybe there is increased CPU load with more driver overhead, who knows what it is. Fact of the matter is system power consumption is more relevant than just the GPU power consumption.

It's total system power consumption. Not Titan's.

How are Tom's and TPU measuring card power on its own? Sure they can measure power usage from the two external power connectors, but they cannot measure power going through the PCI-E port.

As for the Quadro K6000, using a max of 225W sounds very good considering it has 12GB of GDDR5. I though the memory would use a fair bit all by itself - I know from measurements that I did for bitcoining (SHA256) that the 3GB 7950 I tried consumed about 30W less at the wall with the memory set to 310MHz vs what it pulled 1500MHz. That was with one of the newer Gigabyte Windforce models which doesn't report memory voltages.