I'm going to offer an uninformed view on why nVidia focused on strong x64 performance: To offer more quality so there are less under sampling, cracking, aliasing, shimmering artifacts. Be gentle: I don't know.

Well that cracked me up.

I'm going to offer an uninformed view on why nVidia focused on strong x64 performance: To offer more quality so there are less under sampling, cracking, aliasing, shimmering artifacts. Be gentle: I don't know.

Amen,

They repeat it over and over so many times they hope it becomes true.

:whiste:

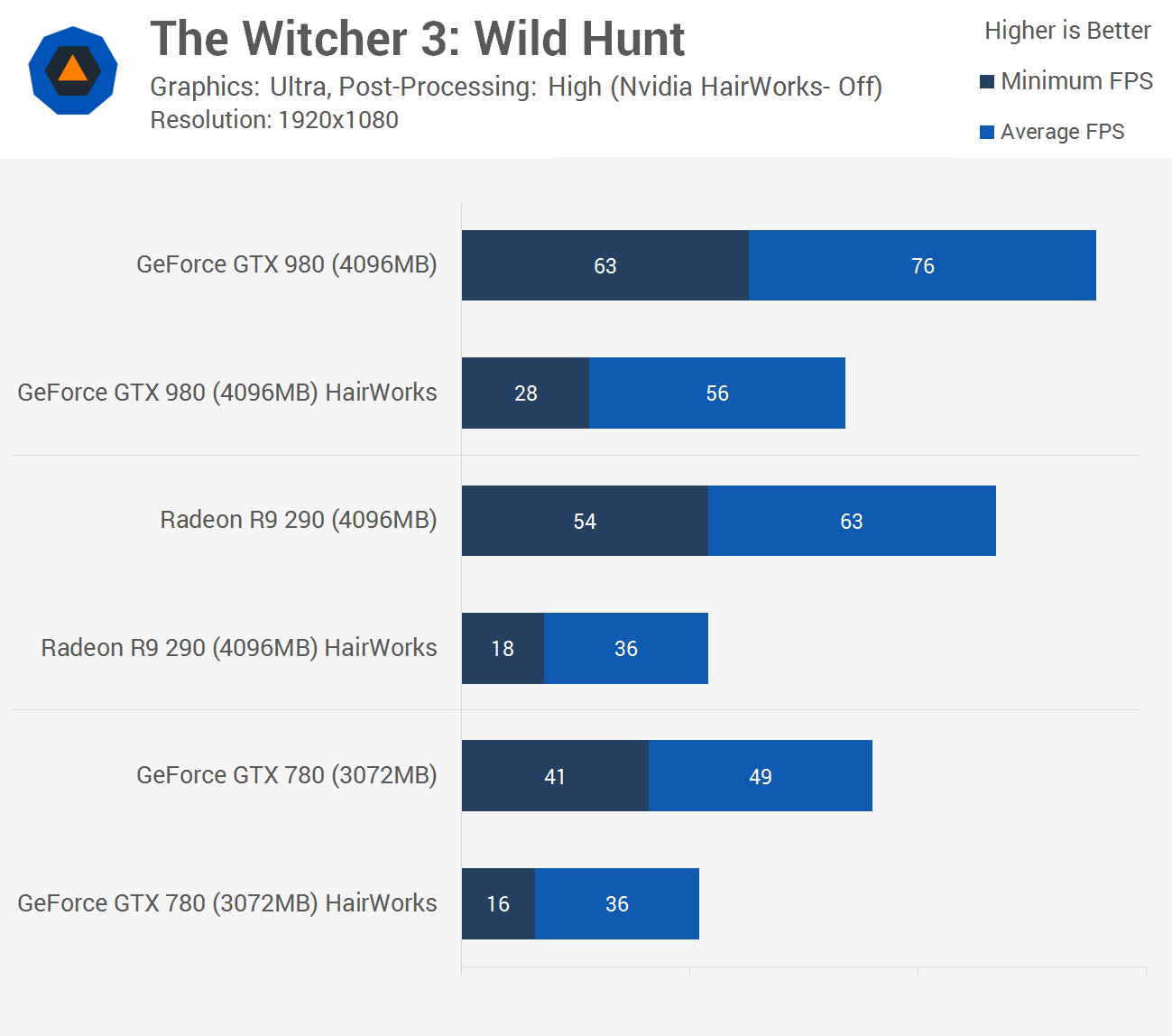

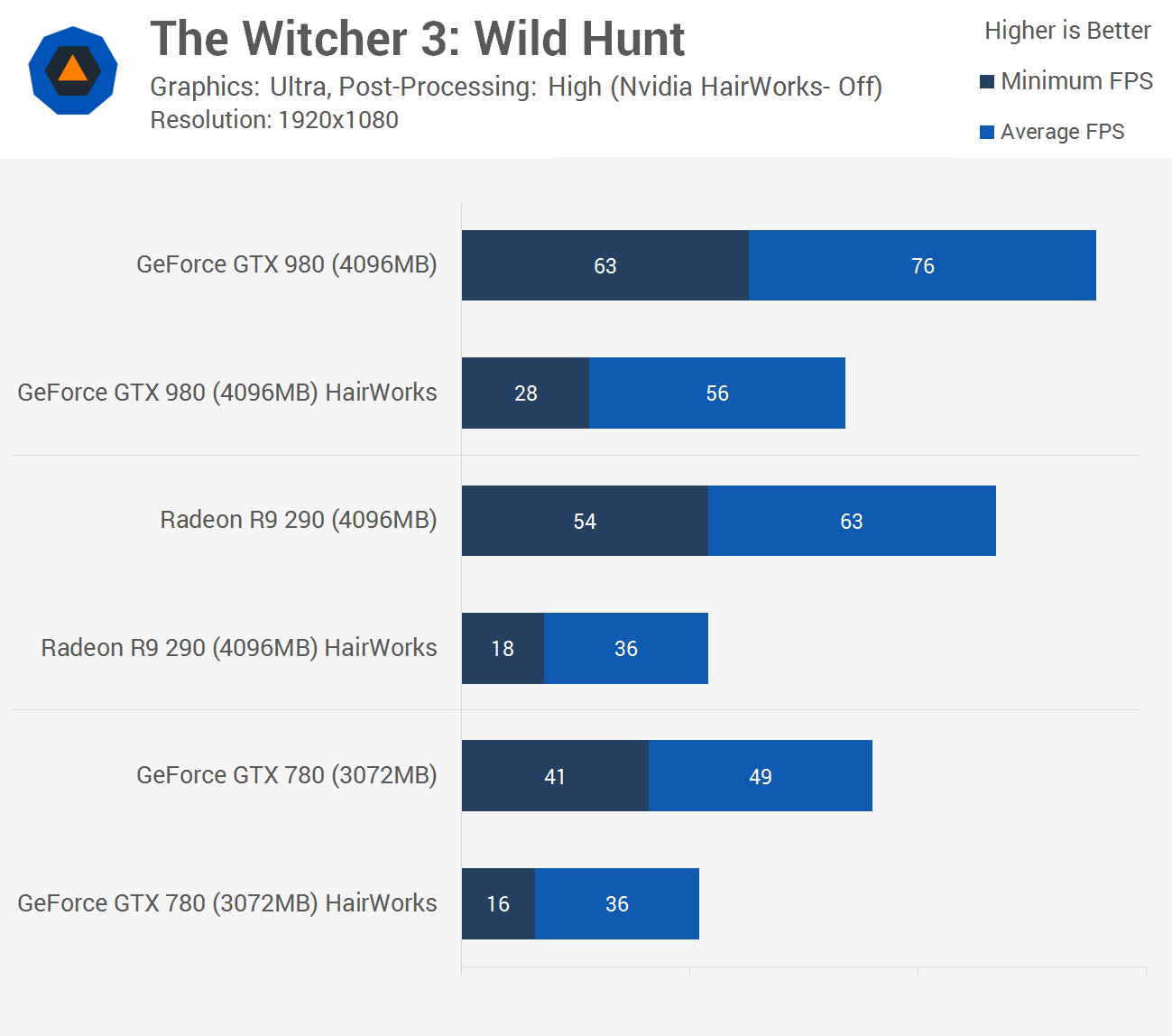

980 Hairwork off 76fps vs hairwork on 56fps

76-56=20fps

-26.3% impact

780 HW Off 49fps HW on 36fps

49-36=13

-26.5% impact

The performance issues for kepler is not Hair(game)works in the witcher 3.

I post this, others have posted similar results yet this will continue on and be ignored. I guess just doesnt matter anymore, people just say whatever over and over. Valid or not, they dont care

I entered this thread with no judgment. Just wanted to hear both sides of the argument before forming an opinion. I mostly favor Nvidia hardware and may continue to do so. But its become obvious for me that gameworks was designed first and foremost to give NV an edge over AMD (with nice graphics for their customers as an afterthought). And that seems to include using code that unnecessarily hampers performance for AMD (with possible kepler collateral damage for time being). Its purely a business practice that seeks to gain the upper hand for NV by hook or by crook. I think the main point in keeping it proprietary, was to lock AMD and game devs from improving it. I have an uneasy feeling that Nvidia can improve the code greatly for both their hardware and AMDs, but would prefer it to remain in a lesser state just to give them that extra edge in benchmarks.

Personally dont care much. Not as emotionally invested as others may be. I recall being a happy gamer with discete cards that are inferior to todays IGPs. If both Nvidia and AMD went out of business, I have a feeling that PC gaming wouldnt suffer that much. Game devs could just focus on IGPs, with little or no conflicting interests. People would just dial down the effects and focus on the gameplay. Hell, I may be even happier for it.. no more HW expenses, no more bulky GPUs crammed into MB, no more frenzied rants in forums over which side sucks / is better than the other.

Wrong.

Based on your numbers:

HW on vs off for the 980, the 980 remains playable at 1080P.

HW on vs off for the 780, the 780 is no longer playable at 1080P.

40FPS or greater considered minimum for enjoyable gameplay. This should not be considered a stretch, generally most gamers want >40fps. Let's also not miss the significance of 1080P as a basis for resolution and performance for many gamers. At this resolution, hairworks breaks the 780 (kepler). That's big, it's why folks are talking about it.

HW as implemented by nVidia did cripple Kepler performance in TW3, dragging a 780 down below 40fps at 1080P. And it did so for nearly immeasurable visual benefit were the tessellation simply turned down to a reasonable level. nVidia knew these numbers and performance delta's and figures, the question is why did nVidia implement Hairworks in this manner? There are not many, let alone one, good reason for gamers for hairworks to be put in this way. However, for nVidia, hairworks may trick some folks into buying a new nVidia GPU (The obvious goal nVidia has for it's hairworks implementation).

This is the core of the dillema for gamers vs Gameworks from Nvidia. Is nVIdia intentionally harming many gamers experience in games by putting in Gameworks blackbox code into big title games in order to try and sell more GPU's?

Yes.

I'm not saying this is the end of the world, just pointing out the obvious. You can always turn off hairworks, obviously, but folks buying 780's or other 400-500 dollar cards are not going to be happy when these games are played by nVIdia Gamewords they disables their ability to turn on new highly touted and marketed features in AAA games.

What does this have to do with anything?

Again, instead of actually discussing the issue people like to just link to something else vaguely related that seems to support their point to cloud the issue.

All the pictures I looked at showed no difference. What on earth are you even talking about? As has been said: this is subpixel differences which cannot be viewed. And it doesn't even effect the clothing...?

And the fact that this is FORCED on all users is even worse.

I think I will just paste this TBH:

"Yea just keep repeating this while completely ignoring everyone's point that Hairworks is using heavy tessellation for no IQ improvement.

Answer this one question: What's the point of severely hampering performance on all hardware by using x64 tessellation when it offers no improvement over x16?"

In gameplay a person would be hard pressed to see any visual difference between the above images.

In screenshots 64x looks best. 1st glance very similar. Looking closer there is more detail in 64x although it's subtle. Look at the shoulder, weapons. They look similar but more detail with 64x.

Worth the performance hit....Nope.

As a NVIDIA user I'd want a in game slider or option in control panel to force 16x.

Are they Tessellating Geralt or the weapons? Horrible low res texture aside, I see same bump shading throughout, granted slightly different angle.

The big things that would make me suspect that they actually are not tessellating Geralt is the faceting on the mid back. And the same seam between the shoulder and the neck.

If they were, the facet, made more pronounced by the texture on his back would not appear at all....

Funny thing is with 64x tessellation, you can see obvious facets in Geralts hair that are not there in the 16x... Reversed labels?

Edit: The first big white clump strand of hair 2cm the top of his head.

Performance Improvements for the following :

The Witcher 3 - Wild Hunt : Up to 10% performance increase on single GPU Radeon R9 and R7 Series graphics products

Project Cars - Up to 17% performance increase on single GPU Radeon R9 and R7 Series graphics products

AMD just released a new beta driver.

Once again, two games that AMD claimed to be crippled and unable to optimize for suddenly have improvements when the effort is made to do so.

Wake up guys. Stop being pawns in AMD's Gameworks war. They are doing it solely to stop the marketshare bleeding because product isn't going to do it.

So nvidias big performance hit from hair works is a myth?

Plenty of nvidias users that are not happy with it.

Not sure but kind if looks like it....One has to be pretty anal to see it that's for sure.

AMD just released a new beta driver.

Once again, two games that AMD claimed to be crippled and unable to optimize for suddenly have improvements when the effort is made to do so.

Wake up guys. Stop being pawns in AMD's Gameworks war. They are doing it solely to stop the marketshare bleeding because product isn't going to do it.

When have they sad they were unable to optimize sans source?AMD just released a new beta driver.

Once again, two games that AMD claimed to be crippled and unable to optimize for suddenly have improvements when the effort is made to do so.

Wake up guys. Stop being pawns in AMD's Gameworks war. They are doing it solely to stop the marketshare bleeding because product isn't going to do it.

AMD just released a new beta driver.

Once again, two games that AMD claimed to be crippled and unable to optimize for suddenly have improvements when the effort is made to do so.

Wake up guys. Stop being pawns in AMD's Gameworks war. They are doing it solely to stop the marketshare bleeding because product isn't going to do it.

AMD just released a new beta driver.

Once again, two games that AMD claimed to be crippled and unable to optimize for suddenly have improvements when the effort is made to do so.

Wake up guys. Stop being pawns in AMD's Gameworks war. They are doing it solely to stop the marketshare bleeding because product isn't going to do it.

All of this gets culled from the pipeline.

Build better hardware. Hardware which is better suited for geometry processing.

Here is a bit from the HAWX2 complain:

http://techreport.com/review/19934/nvidia-geforce-gtx-580-graphics-processor/8

AMD complained about something which wasnt existent. nVidia's hardware was and is just "superior" when it comes to geometry processing.

The same with Crysis 2. The performance impact of tessellation was around 18% on Fermi: http://www.hardware.fr/articles/838-7/influence-tessellation-son-niveau.html

On the other hand the performance impact of Forward+ in Dirt:Showdown was 58% on Kepler:

http://www.hardware.fr/articles/869-14/benchmark-dirt-showdown.html

How is this okay? This is even a bigger performance impact than people have with Hairworks in The Witcher 3. :|

The point is AMD just optimized two more games that they claimed they were crippled and unable to optimize for.

Does it get culled from the frame where you are looking? Or only at the sides and behind your viewpoint?

Talking about occlusion culling right?

Show me exactly where AMD ever claimed that. Hint: you can't, because it doesn't exist.

Hairworks is garbage and nobody said the performance hit was a myth.

The point is AMD just optimized two more games that they claimed they were crippled and unable to optimize for.

Look at the shoulder, weapons. They look similar but more detail with 64x.

Worth the performance hit....Nope.

As a NVIDIA user I'd want a in game slider or option in control panel to force 16x.

Amen,

They repeat it over and over so many times they hope it becomes true.

:whiste:

980 Hairwork off 76fps vs hairwork on 56fps

76-56=20fps

-26.3% impact

780 HW Off 49fps HW on 36fps

49-36=13

-26.5% impact

The performance issues for kepler is not Hair(game)works in the witcher 3.

I post this, others have posted similar results yet this will continue on and be ignored. I guess just doesnt matter anymore, people just say whatever over and over. Valid or not, they dont care