poofyhairguy

Lifer

- Nov 20, 2005

- 14,612

- 318

- 126

Its like calling the HD 7850 1GB or GTX 650 Ti Boost 1GB "trash" when they were introduced.

I had a 1GB 7850 and it was trash. It turned me off of AMD for a few years the experience was so bad.

Its like calling the HD 7850 1GB or GTX 650 Ti Boost 1GB "trash" when they were introduced.

Thats funny. The 7 series from AMD was all the rage. Maybe you had a defective card.I had a 1GB 7850 and it was trash. It turned me off of AMD for a few years the experience was so bad.

You completely miss the point.

The issue is that in Directx 12 that ability for Nvidia to basically fix the mistakes of developers is gone.

We already have seen where in both Hitman and Tomb Raider that the VRAM usage at 1080p can go over 3GB in Directx 12 mode. If that is the sign of things to come then soon maybe even 4GB of VRAM won't be enough.

Thats funny. The 7 series from AMD was all the rage. Maybe you had a defective card.

I haven't seen any evidence that DX12 will somehow make it impossible for AMD or Nvidia to perform memory usage optimization at a driver level, so no idea where that idea comes from.

I don't know that we have actually seen VRAM issues in Hitman

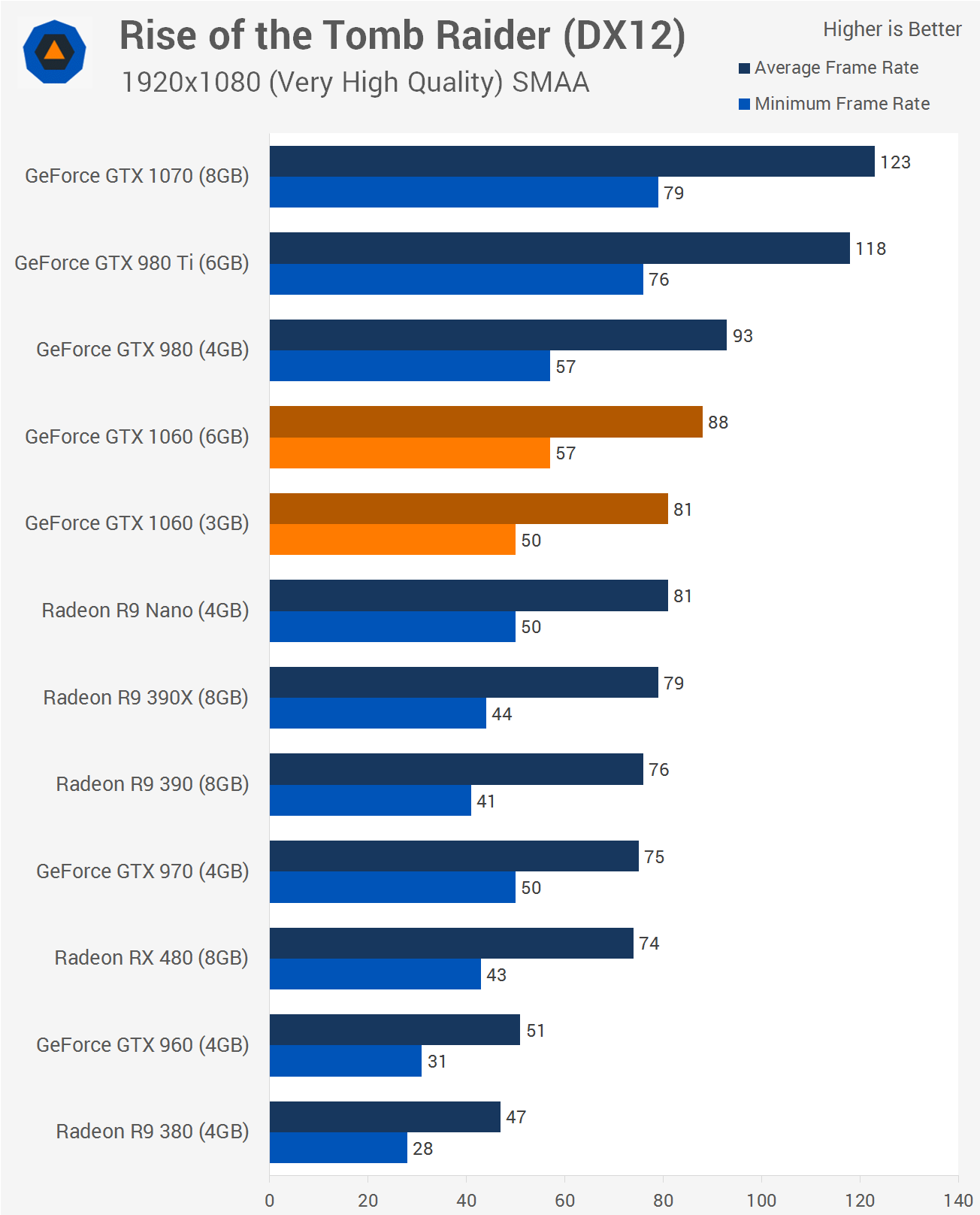

In all of the benches here, there is no evidence to suggest that the 3GB framebuffer causes issues - except in one title, Hitman. Here, the performance drop-off on GTX 1060 3GB is significant - and we strongly suspect that DX12, where the developer takes over memory management duties, sees the card hit its VRAM limit. Notably, this does not happen on the AMD cards with four gigs of memory/

Tomb Raider VRAM issue is murky at best. it's only a single scene out of three, it's only seen by Eurogamer, not by ABT, and even then the 1060 3GB still manages ~50 FPS.

The common wisdom has been that if you have a card with more VRAM, you need more RAM. We've never ascribed to that belief, as we never, ever saw that play out in the real world of our game benchmarking. But the results we show above truly astounded us: video cards with less VRAM (in this case 4GB) actually forced the game to allocate far more system RAM to the game engine. Our guess is that because Rise of the Tomb Raider was originally coded for the PS4 and Xbox One, it expects 8GB of dynamic system RAM, and when ported over to the PC, developers had to find a way to access that much RAM if required. Now, we're not saying that this affected performance in any measurable way, but it's possible that the sky-high minimums of the 390X under DX12 may not have been a fluke. It in fact requires the least system RAM. And here's an interesting fact we realized after looking at the data a second time: all five systems require 14GB of total memory when using DX12. Coincidene? We think not!

Really there is currently zero indication that 3GB of the 1060 won't be enough, unless you can't accept anything below 60 FPS, but if that's the case you should be buying a 1070 at minimum anyway, since even the 6GB 1060 dips below 60 FPS all of the time. It's certainly possible that there will be issues in the future, but normally when people talk about VRAM issues, they are not referring to a card that dips down to 50 FPS in my experience.

I don't even think the HD 7870 had as many threads/posts dedicated to it.

reading your post, and then checking the prices.Because not everyone has an unlimited budget like I do. I could sneer at everyone not buying a 1080 but that doesn't help them.

I disagree 100% that it's somehow evil to say "if you can afford it, get the 6 GB but the 3 GB will work with these limitations: ....".

People buy a 750ti, 950, 1050 (once available) when the card suits their needs and their budget. The 1060 3GB is $50-100 less than the 6 GB at a cost of <10% speed difference now and an unknown % in the future.

In that light the 3GB 1060 is a clear clear winner for a "budget gamer" who gets games at Steam sales and doesn't touch AAA games until a year or more after their release. For those people by the time they have more Directx 12 only games than not the 1060 will be a dinosaur. But the 470 might be a better choice for someone who wants to buy and play new AAA games as they come out, as they will maximize the Directx 12 titles that are being increasingly released. At this level your use case matters, but when you buy a 1070 or a 1080 or Titan or whatever you are basically paying extra to not have to consider the use case.

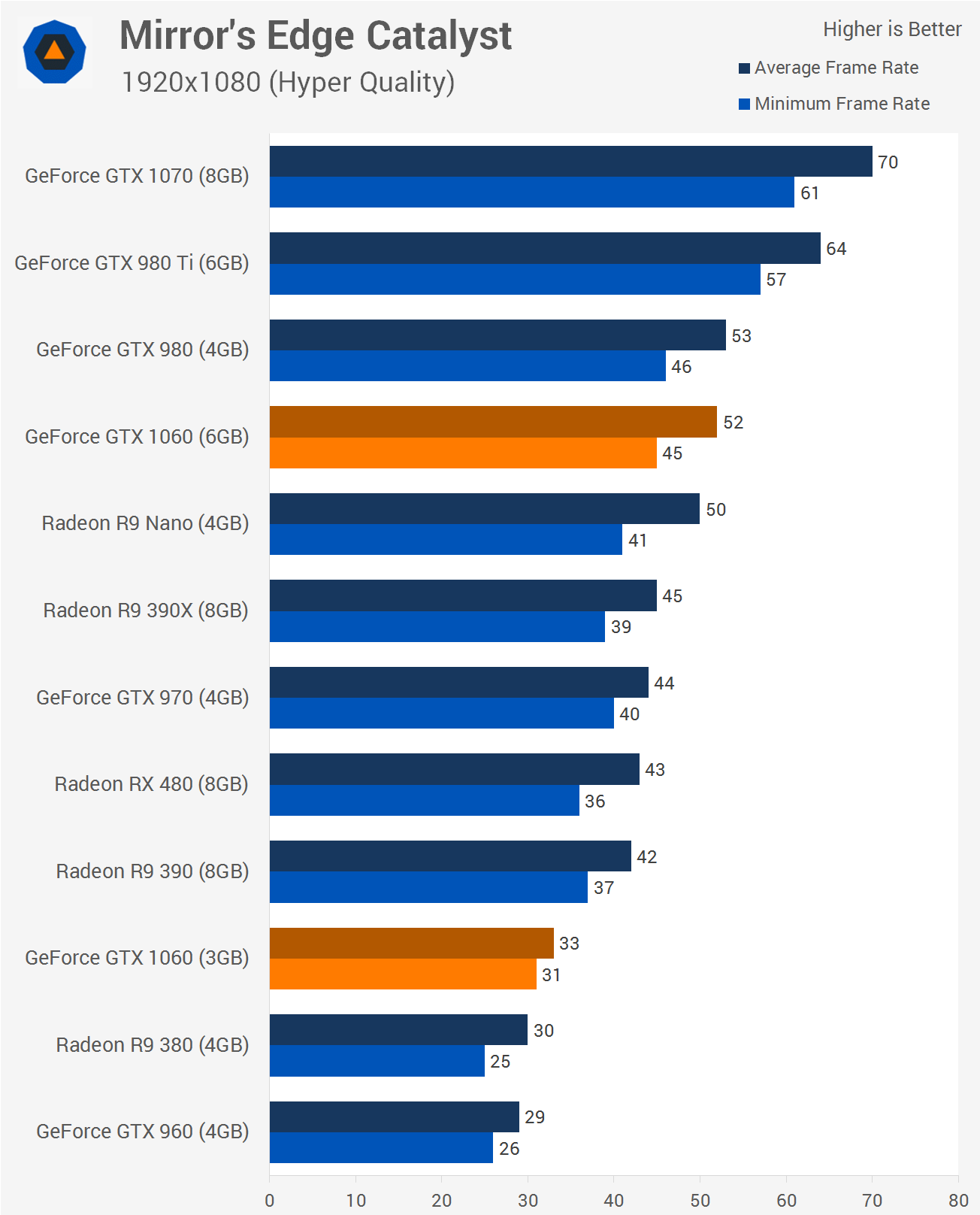

>> Makes me think maybe the VRAM thing isn't the issue I thought it might be. Then we move to Mirror's Edge and holy crap:

Setting: (Hyper Quality)

That sounds like it's dialed up to 11, and isn't so kind to the 480 8GB either.

You could point to Mirror's Edge Catalyst and suggest that this is how future games will look, and you might even be right. I personally feel this is an extreme example and it will still be some time before it becomes the norm.

For those wondering, turning Mirror's Edge Catalyst down to the ultra-quality preset saw the 3GB GTX 1060 perform within 10% of the 6GB model. Not only that, but I can't really tell the difference in visual quality between ultra and hyper, making the latter pointless in my opinion.

Averages don't tell the story on a card like this, if you get spikes of low frame rates due to VRAM in intense scenes it doesn't make you feel any better that the average is high because the game runs at 80 fps (on your 60hz screen) in a basic landscape scene. Plus we have had evidence that Tomb Raider in Directx 12 mode is a VRAM hog for a while now:

1152 CUDA cores running at 1.7 GHz isn't going to let you max settings in the next 2-4 years either.

>> Makes me think maybe the VRAM thing isn't the issue I thought it might be. Then we move to Mirror's Edge and holy crap:

Setting: (Hyper Quality)

That sounds like it's dialed up to 11, and isn't so kind to the 480 8GB either.

But once again, I don't argue that if you can afford to spend $250+ then the 1060 6GB is a better choice than the 3GB. It's just that if you can't, the 3GB seems fine at 1080p except for some "Crysis at Ultra" / "Doom3 at Ultra" card-killer settings that improve quality a tiny bit for a huge performance hit. That's not the same as it being "trash."

Exactly. Thread after thread in these forums analyzes price to performance down to the last penny and frame per second when it fits the poster's agenda.

But now, suddenly, the 1060 3gb card is being called trash and a crime to sell or approve of even slightly, simply because it is not as fast as its 6gb model, and price is being totally ignored. Duh people, the 6gb model is at least 25% more expensive than the 3gb model, and in some cases, a lot more that that.

As I showed in my earlier post, and even in nearly all the more recently presented data, the 6gb model is not even close to 25% faster. And in the only game which seems to show clear tanking due to vram issues, Mirror's edge at 1440, *both* the 480 4gb, and the 1060 3gb are unplayable (even the 1060 6gb is barely playable), and in fact even though they are both unplayable, the 1060 3gb actually performs better than the 4gb 480. So I guess by that metric, any 4gb card is "trash" as well.

Uh, the whole point of Directx 12 is to be a low-level API that puts the developer in the driver seat. Eurogamer even address that in the article along with this:

You didn't read the article I take it. Direct quote (bolding done by me):

They tell us clear as day that Directx 12 Hitman causes VRAM issues, and that developers are in charge of memory management.

Averages don't tell the story on a card like this, if you get spikes of low frame rates due to VRAM in intense scenes it doesn't make you feel any better that the average is high because the game runs at 80 fps (on your 60hz screen) in a basic landscape scene.

Plus we have had evidence that Tomb Raider in Directx 12 mode is a VRAM hog for a while now:

http://techbuyersguru.com/first-look-dx12-performance-rise-tomb-raider?page=1

The test wasn't at 1080p though so it's hard to tell if that is what we are seeing here with the 3GB 1060. A good review with minimums listed would go a long way.

VRAM is simply about turning up the texture settings. Sure with Hairworks or Godrays on high you can kill a 6GB 1060, but it would also kill a 980 or a 480 or any card from that class. The issue is that in the same class of cards (ie below the 1070) this 3GB model might not be able to use the same quality of textures as the other cards in this class. We saw something like that last generation where the 390 could run Mirror's Edge with Hyper textures (at playable framerates) while the 970 couldn't.

Plus quite frankly I hate the implied concept (not by you but in general) of "well that is a budget card anyway, those peons should have to accept they have to turn the settings down. If they didn't want to turn down settings they should buy a high-end Nvidia card every two years like I do." In my opinion small differences matter at this price level, because by definition people have (or are willing to spend) fewer resources and so they need to get more for their money to maximize the experience.

In that light the 3GB 1060 is a clear clear winner for a "budget gamer" who gets games at Steam sales and doesn't touch AAA games until a year or more after their release. For those people by the time they have more Directx 12 only games than not the 1060 will be a dinosaur. But the 470 might be a better choice for someone who wants to buy and play new AAA games as they come out, as they will maximize the Directx 12 titles that are being increasingly released. At this level your use case matters, but when you buy a 1070 or a 1080 or Titan or whatever you are basically paying extra to not have to consider the use case.

Doesn't handle Mankind Divided well either:

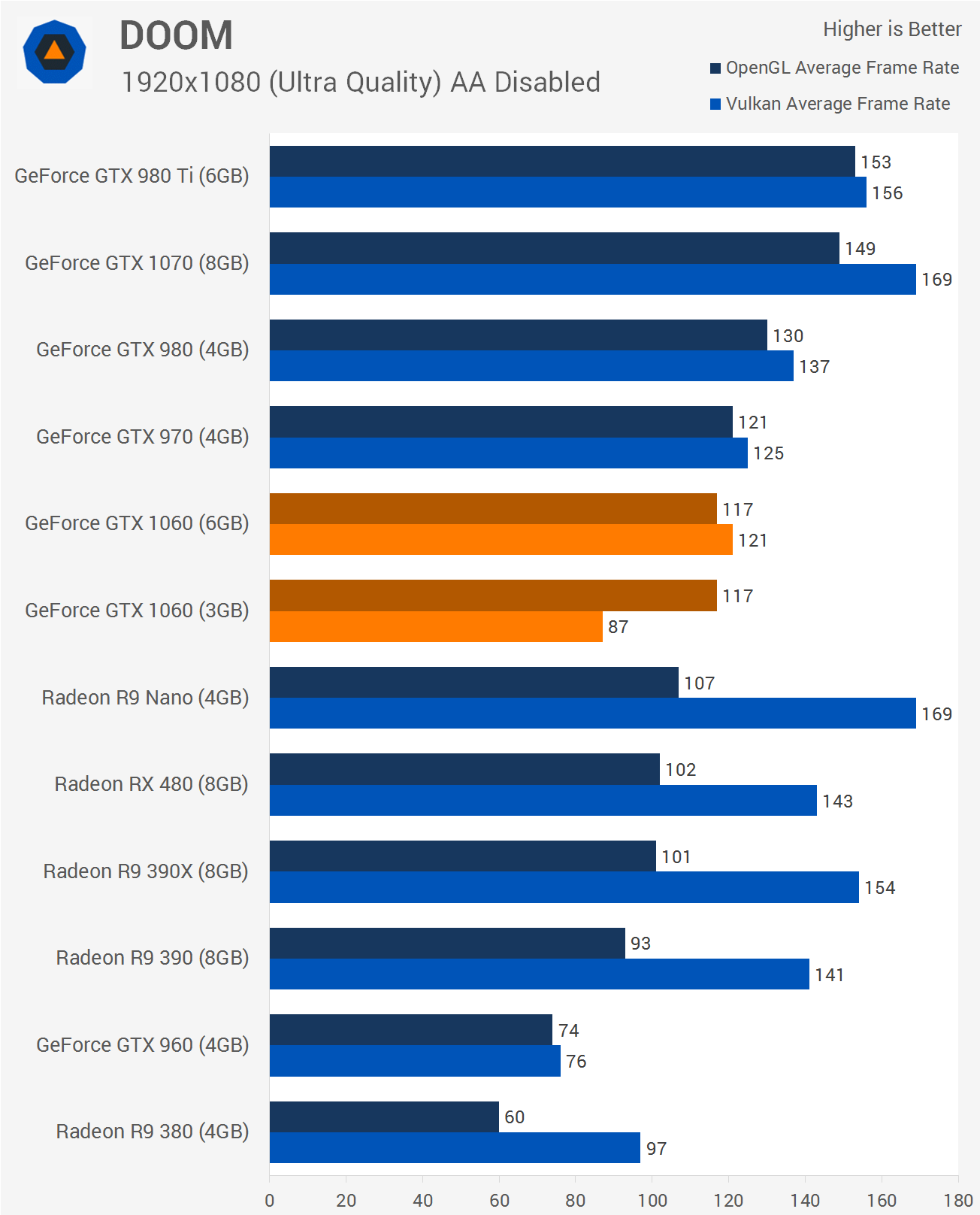

Slower in Doom Vulkan as well compared to OpenGL vs 6gb:

But if you want to see how the memory will come into play... look at Mirror's Edge:

Any card without the VRAM drops like a rock

Makes me think maybe the VRAM thing isn't the issue I thought it might be. Then we move to Mirror's Edge and holy crap:

The 6GB model is playable while the 3GB model slums it with a 380. And that is a game that likes Nvidia cards (notice the 970 even with the 390x, 3.5 GB is enough).

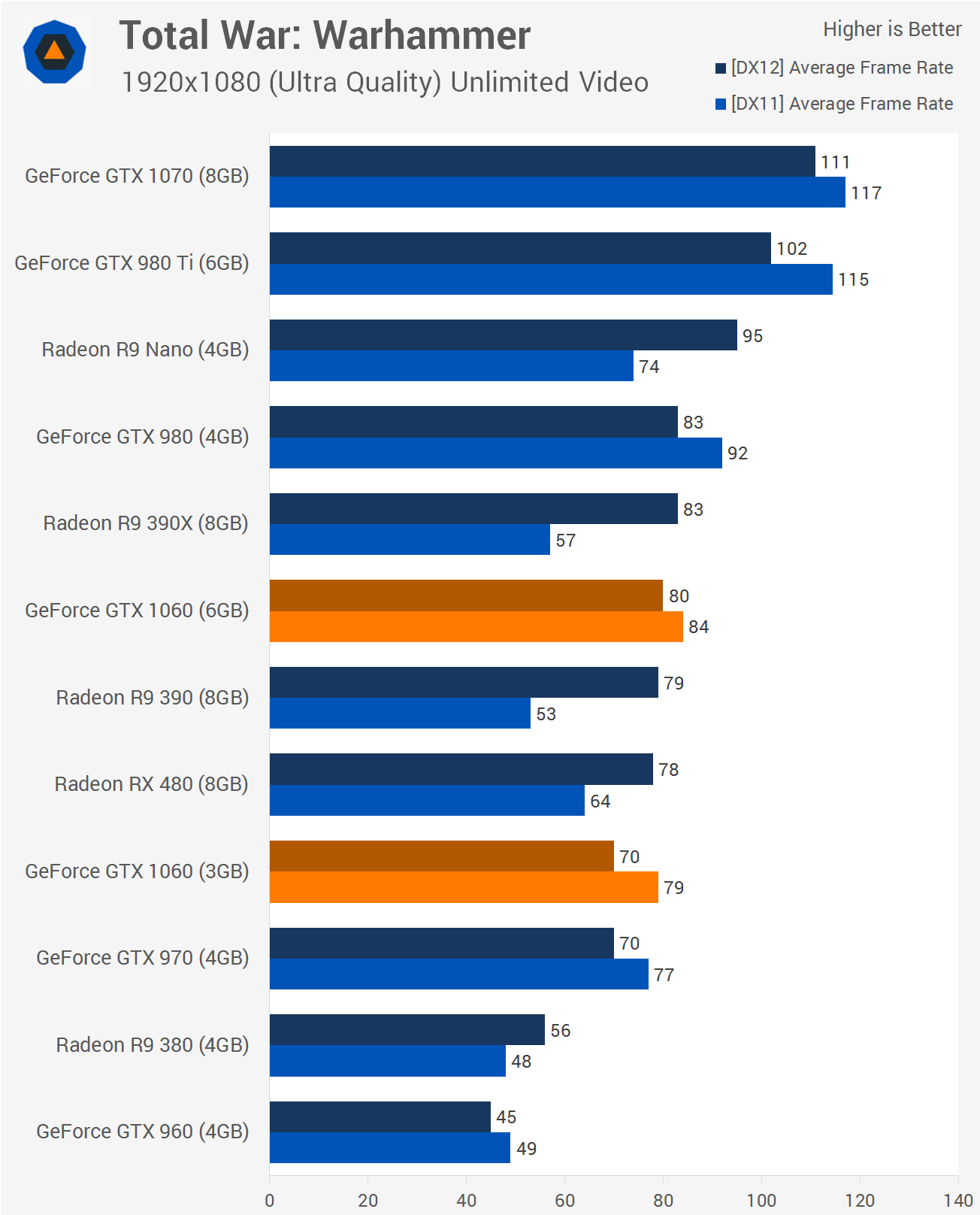

Also notice it loses more performance than the 970 does when Directx 12 mode is turned on in War Hammer:

It also completely falls apart in Vulkan:

Also falls behind the 970 in that game too.

There is no dual fan $200 3GB 1060 so the cheapest one with a decent cooler is $210-220. I have seen clearance 970s go for $200 and less sometimes recently, so I think for people wanting to play yesteryears's games (aka the 3GB 1060 forte) they get a better deal with a cheap 970. That is unless the VRAM black hole theory on the 970 is legit.

But now, suddenly, the 1060 3gb card is being called trash and a crime to sell or approve of even slightly, simply because it is not as fast as its 6gb model, and price is being totally ignored. Duh people, the 6gb model is at least 25% more expensive than the 3gb model, and in some cases, a lot more that that. As I showed in my earlier post, and even in nearly all the more recently presented data, the 6gb model is not even close to 25% faster. And in the only game which seems to show clear tanking due to vram issues, Mirror's edge at 1440, *both* the 480 4gb, and the 1060 3gb are unplayable (even the 1060 6gb is barely playable), and in fact even though they are both unplayable, the 1060 3gb actually performs better than the 4gb 480. So I guess by that metric, any 4gb card is "trash" as well.

You know as well as I do that's not at all the argument the people you are criticizing are making. Misrepresenting the argument is pretty weak. It's pretty clear from a brief 2 minute skim of any thread on the 1060 3GB that people who don't recommend it do so because they believe: 1) 3gb is barely enough in 2016 and wont be enough very soon, 2) 1060 3GB is a misleading name

Notably absent is anyone saying its bad because its slow. So no, that's a strawman.

1060 3GB is a misleading name