- Mar 27, 2009

- 12,968

- 221

- 106

In the absence of a Windows Fusion drive, here is my guess on what is most likely for U.2 hard drive:

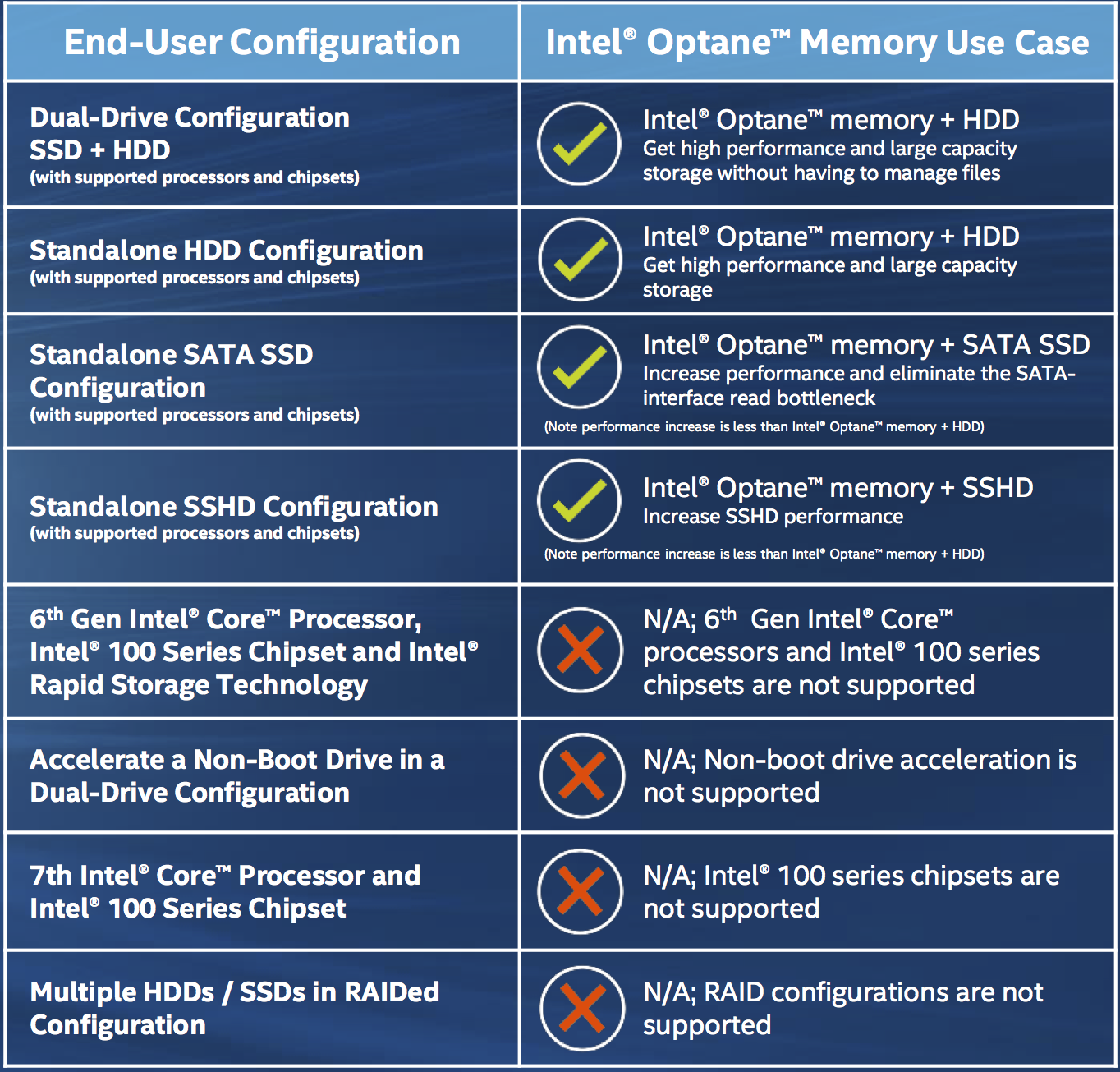

1. NAND based SSHD (Its gonna take 240GB of NAND (using the small dies) to approach saturating PCIe 3.0 x 4 though)

2. Optane based SSHD (Should only take 32GB of Optane to approach saturating PCIe 3.0 x 4)

For #2, I also wonder if the Optane can be used for the hard disk controller's dram buffer. If so, that then that is another chip that could be removed from the PCB.

With that noted, certainly there could also be a dual drive (operating as separate volumes of course) with at least 1TB of NAND possible as well. (re: 16 die package of 512Gb (64GB) 3D TLC = 1TB).

1. NAND based SSHD (Its gonna take 240GB of NAND (using the small dies) to approach saturating PCIe 3.0 x 4 though)

2. Optane based SSHD (Should only take 32GB of Optane to approach saturating PCIe 3.0 x 4)

For #2, I also wonder if the Optane can be used for the hard disk controller's dram buffer. If so, that then that is another chip that could be removed from the PCB.

With that noted, certainly there could also be a dual drive (operating as separate volumes of course) with at least 1TB of NAND possible as well. (re: 16 die package of 512Gb (64GB) 3D TLC = 1TB).

Last edited: