Nope, sources have tested:

-Control

-MechWarrior 5

-Wolfesntein Young Blood

You can see whatever you like, all sources have concluded that DLSS 2 is equal or better than native res + TAA, whether Digital Foundry (in their Control and Wolfesntein analysis), or TechSpot, or Overclock3d, or the dozens of other sources on Youtube and tech sites. It's also my personal observation. You are free to think whatever you want, but that's probably your tainted view, nothing more, and goes against what testers have experienced.

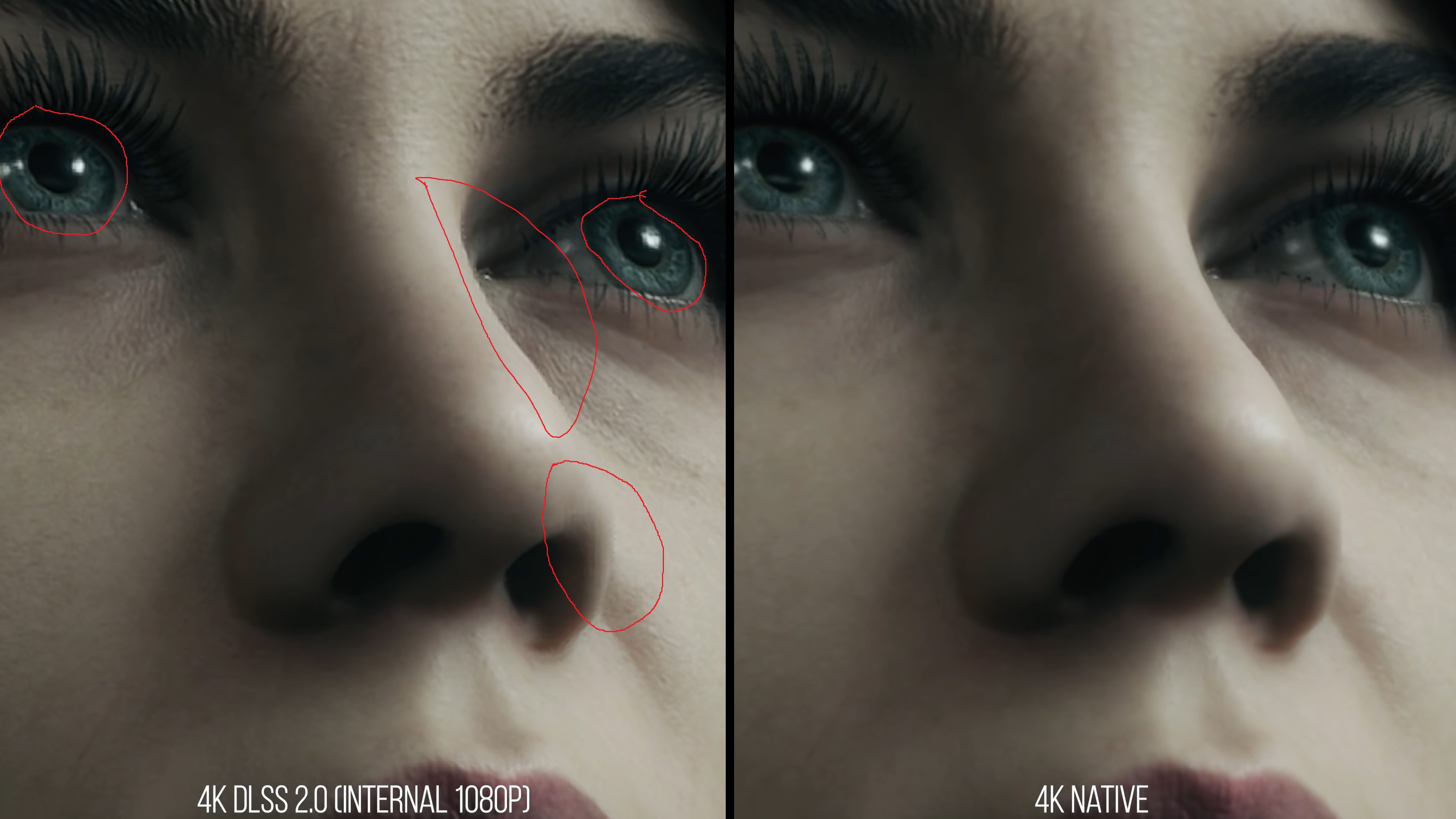

Control, from the techspot source YOU provided. First picture, LOWER quality ovet native, second picture, LOWER quality over native, third picture LOWER quality over native.

In every single picture, DLSS 2.0 textures are darker, have reduced detail and are oversharpened, in every single picture it has lower quality.

Wolfenstein young blood, again in first picture comparison DLSS looks darker, texture color looks more monotone, but i'd give it that it looks sharper. TSSAA does look blurrier. So that is worse textures, worse colors, darker image, but sharper look.

Second image DLSS does looks darker, but texture quality is very similar, but the colors in that scene are already way too monotone, bright white and black, DLSS does look sharper once again compared to the TSSAA 8x they are using.

Forth pic, I'm skipping 3rd because its way too dark to discern much from it, so we have TSSAA 8x, dlss quality and smaa. TSSAA does look blurry, but it definitely has softer edges, there is no jagged edges, it looks polished, but blurry. DLSS does look sharper again, but again even in this rather dark scene it does tend to darken the image even further, noticeable loss of detail, you can clearly see more dirt and rust and worn textures on the TSSAA and especially SMAA. Again its small things, its not too obvious, but ITS THERE, its LOWER QUALITY. It is removing some detail and some color palette and range and is darkening stuff to make it process faster.

SMAA vs DLSS 2.0, SMAA wins, it looks sharper, it has higher detail, it has broader color palette, it does have slightly more jagged edges compared to DLSS 2.0 though. So SMAA wins with native resolution wins.

Again this is why even Nvidia doesn't claim the absurd thing that DLSS has BETTER image quality than native, ONLY YOU in the whole world claim this nonsense. Nvidia would rather people focus on the performance aspect, rather than quality one, but leave it to Nvidia worshipers to make this giant fuss how DLSS 2.0 is the second coming of christ, better than sliced bread, best thing invented since hot water, etc...

Again kudos to Nvidia for being able to lower quality and mask it decently to offer more performance, fine, it is definitely worse looking in general than native + any AA I've seen tested. Biggest consistent themes coming up are darker textures, loss of details, quite a bit of a monotone color palette which makes objects looks bland, it is though sharper to a certain extent over some AA and thus can look better in that aspect.

As I said in my previous post, it's like a pretty decent art student who is just starting out vs a seasoned professional painter. DLSS is working with a smaller color palette and way less range of details and quality. Its still good for a novice painter, but its imitating original art.