First of all we are talking about applications, not gaming. Games behave differently than all other applications. Haswell core i3 is only faster than Kaveri APUs in Cinebench, they loose or are equal in the vast majority of MT workloads out there.

I'm talking about stuff that's worth choosing a faster CPU for. A few highly multithreaded programs that get <1% of wall time on a PC aren't worth that. If you spend most of your time in those applications, then fine, but that's not typical.

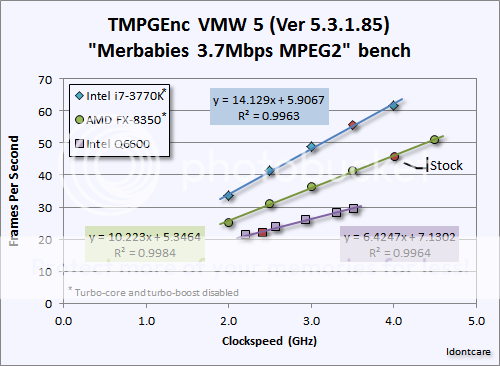

All applications behave differently. Some are just easy to scale out horizontally, like video encoding, and substandard file compression (you will not get as good results with LZMA or LZMA2, FI, with a many-thread compression job, as with fewer threads--my VM backups can be hundreds of MB smaller, FI). Again, if that's what you do most of the time, then get whatever will work best for your budget. If you don't, though, it doesn't make a CPU that can do it faster a better one. And, again, most people's "typical" is going to be limited by RAM or HDD, more than anything else, with any decent CPU, today, and that's better for AMD than it is for Intel. IE, Intel's CPUs being better, overall, BD being a failure for AMD, does not equate to having an AMD CPU as being bad, or insufficient; but merely not the common case, due to Intel serving more of the x86 markets better.

Secondly there aren't any SteamRoller products like Kaveri APUs in those links you provided above.

Blame reviewers for that. I get annoyed at that segmentation with reviews, myself. For some reason, a Core i3 with improved 4th-gen IGP is worth testing with a dGPU, but hey, nobody's going to buy an FM2+ CPU and add a dGPU, so why bother (just ignore the newer chipsets, cheaper mobos, and nearly the same pricing as the FX line per core)? Likewise, nobody looking at an i3 and dPU is going to give an AMD a second thought, so why bother comparing them? And that says nothing of i5s. If nothing else, it would make it easier to compare the relative bang/buck for desktop machines. I'd like more sites to test a more representative range, rather than most of them testing a handful of models near one of the price spectrum, and not testing some models due to seeing them as in different markets. Also, I wish video card reviews of non-high-end models would compare to a common IGP, for a good reference point.