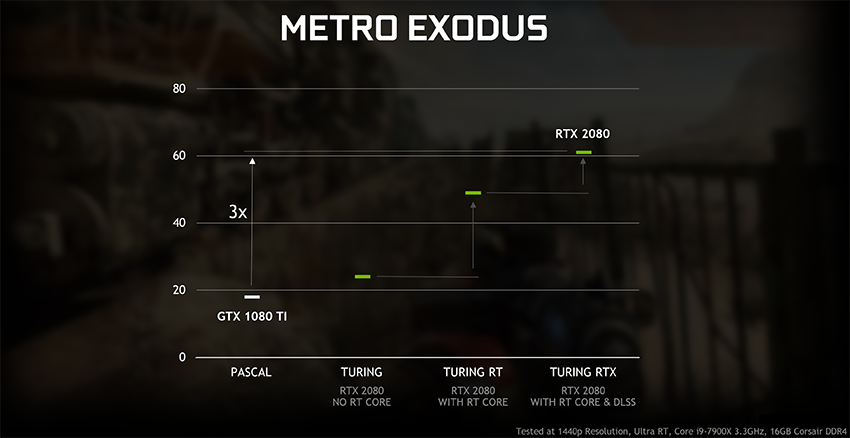

Also not sure, why you take BF5 as reference - a title where just the reflections on reflective surfaces only are considered. Why not take a title like Metro Exodus, where all the GI calculations are done on RT cores as reference and where they have to shoot a photon at almost each pixel? As far as i am concerned, using raytracing for GI and shadows is much more impacting than reflections.

I'm taking BF5 as reference because it's the only real game I've seen benchmarked with both Turing and Volta with ray tracing enabled. Metro Exodus would bee a useful addition. I too feel GI and shadows are much more important than reflections, but my limited experience says these can be implemented with a lower performance impact (GI especially should respond better to downsampling and other performance enhancing tricks which would otherwise likely ruin image quality in reflections).

I am sure, that the NVidia Engineers having done precisely such estimation when deciding to go for RT cores. Current indications are that 45% speed-up are on the low side, and your estimate of 20-30% performance gain at iso-area look to be on the high side of the spectrum. Still a discrepancy of 15-25%, mind you, which cannot be easily mitigated within the same technology.

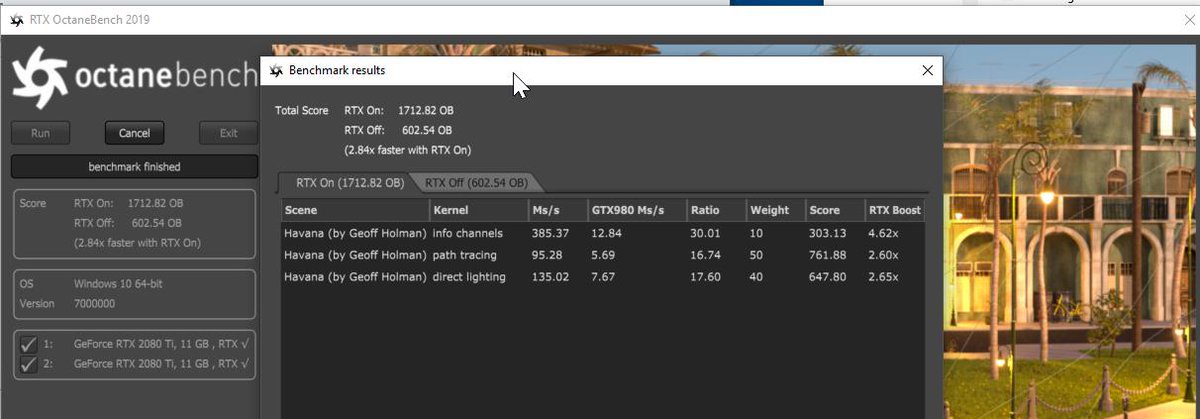

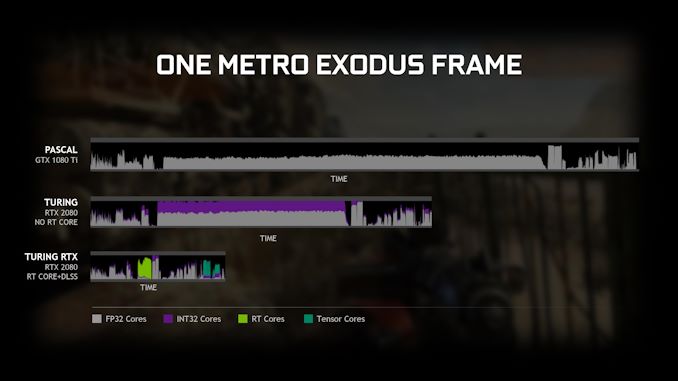

RT cores are definitely faster than general cores, much faster than 45%. Use the GPU to render a fully ray-traced scene and Turing probably obliterates Volta, but that process won't be in real time anymore. If we want real-time, we use hybrid rendering, with RT taking up only a fraction of the time needed to render the frame. The rest of the time is directly dependent on raster performance. In the example from Nvidia the RT portion takes only roughly 10% of the frame time.

If we were to assume Turing was 100% faster at RT than Volta, the frame above would only take 10% longer to render. We can tilt this ratio to favor RT cores, but the problem is when doing so we end up tanking overall performance hard enough that only benchmarks can afford to do so.

As for my estimates of 20-30% perf gain at iso-area, keep in mind I was talking about a jump from Volta to non-RTX Turing - from a compute oriented arch to a "nimble" gaming oriented product. Take a look at them side by side and judge for yourself:

There's FP64 and Tensor cores in there which could arguably make plenty room for more compute units even with added FP16 units. I'm inclined to believe a 10% increase going from Volta to non-RTX Turing would not only be possible, but rather on the low side of the spectrum. Then comes the frequency question. Titan RTX lists 10%+ higher base clocks and and 20%+ higher boost clocks over Titan V. Again, a 10% increase in clocks in power limited scenarios seems adequate. Add these two together and 20% perf increase is already probable.

We should also keep in mind that performance increases in general compute cores offer two-fold jumps in frame times (as opposed to specialized hardware), as they lower both RT and raster time. For the example above (with 1:9 RT to Raster ratio), a 20% increase in overall performance may allow the general compute card to spend 100-200% more time with ray-tracing than it did before, assuming iso performance targets. On the other side, a 200% increase in RT core performance would yield a ~7% improvement in FPS.

Its only when the RT ratio starts to go up, when we use it for reflections, GI, shadows and maybe more at the same time that numbers make a lot more sense for specialized hardware, but one can argue that won't be happening in the near future, as today's RTX cards wouldn't be able to handle such load anyway. For now all we can reasonably do is take a look at RT enabled games running with decent FPS and try to extrapolate where that threshold is. To me, the 45% performance advantage Turing has over Volta is not convincing enough, for the reasons outlined above. (especially considering we can turn it into a 25% perf advantage if we run Low RT details)

Give me an offline render scenario and I'll beg for Turing. Give me a modern, fast paced game, and I'll start asking questions.