computerbaseAshes of the Singularity Beta1 DirectX 12 Benchmarks

Page 45 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

airfathaaaaa

Senior member

- Feb 12, 2016

- 692

- 12

- 81

what i was trying to say is that this dx12.1 doesnt exist simply because it was on dx 11.3 as microsoft has pointed out the dx12 as we know of is just the dx11.3 but with the whole low overhead commandsyes that is what i am trying to explain to that new poster.

some support full 11.3 some the part of dx12 only technically both are correct but both are wrong too

PhonakV30

Senior member

- Oct 26, 2009

- 987

- 378

- 136

Behrouz, remember Nvidia DX11.1 and AMD DX11.2? And its far from the last time from both.

ah , I remembered.fair enough.

3DVagabond

Lifer

- Aug 10, 2009

- 11,951

- 204

- 106

They never said "full support".

FL12_1 is for advanced graphics effects. AoS is a outdated game with literally no graphics and a huge amount of compute processing.

Still denier?

http://blogs.nvidia.com/blog/2015/01/21/windows-10-nvidia-dx12/

now Please deny again , Say this is liar.

Is that how you admit you were wrong? Because I'm having a hard time figuring it out.

xthetenth

Golden Member

- Oct 14, 2014

- 1,800

- 529

- 106

Oxide will give loads of excuse why they did not use these features and only go with AC because it was clear that it give only the advantage to AMD which is totally fine and AMD can do that. Some users will troll and complain when AMD cards will be a stutter and low fps in games like Just Cause 3, Rise of the Tomb Radier, Quantum Break. and other upcoming Nvidia and MS title simply because they cannot use Dx12.1 features.

I simply refunded ATOS because it is totally meant for AMD gamers so they can enjoy this game.

There's one very obvious reason why they used the features they did. It lets them make the game they wanted to make.

As far as the rest of your post, as an AMD user I hope my card underperforms like it does in Rise of the Tomb Raider, because it's underperforming faster than the NV equivalent. Maybe by the end of the generation my 290 will underperform the heck out of the 980.

I'm sorry that you're stuck with a card that's getting beaten by its counterpart's underperformance.

Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

There's one very obvious reason why they used the features they did. It lets them make the game they wanted to make.

As far as the rest of your post, as an AMD user I hope my card underperforms like it does in Rise of the Tomb Raider, because it's underperforming faster than the NV equivalent. Maybe by the end of the generation my 290 will underperform the heck out of the 980.

I'm sorry that you're stuck with a card that's getting beaten by its counterpart's underperformance.

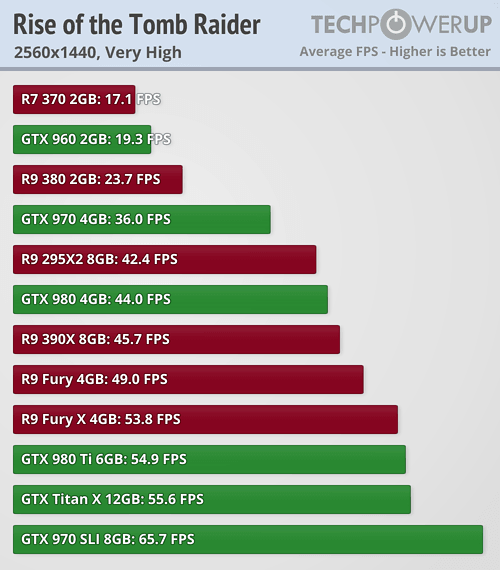

Yeah, the 390/X (or rather, non-reference 290/X), is under-performing so much in new DX11 games that it's trashing the 970 and even the 980 in most of the major AAA titles lately!

Hopefully it keeps on under performing.

Even the 970 at over 1.5ghz struggles against the under performing and old Hawaii chip..

Last edited:

What a cherry pick benchmark .Yeah, the 390/X (or rather, non-reference 290/X), is under-performing so much in new DX11 games that it's trashing the 970 and even the 980 in most of the major AAA titles lately!

Hopefully it keeps on under performing.

Even the 970 at over 1.5ghz struggles against the under performing and old Hawaii chip..

Even you know that 99% people who buy GTX 970 do not use it at 1440p or you do know that.

Here is the benchmark

http://www.pcgameshardware.de/The-Division-Spiel-37399/Specials/Beta-Benchmark-Test-1184726/

http://www.pcgameshardware.de/Far-Cry-Primal-Spiel-56751/Specials/Benchmark-Test-1187476/

http://www.pcgameshardware.de/The-Division-Spiel-37399/Specials/Benchmarks-1186853/

Even AMD roy said that PChardware.de are very good benchmark site.

Why you are posting hardcop benchmark and you said they are Nvidia paid. You do not have any principles or what?

Last edited:

Mahigan

Senior member

- Aug 22, 2015

- 573

- 0

- 0

Oxide will give loads of excuse why they did not use these features and only go with AC because it was clear that it give only the advantage to AMD which is totally fine and AMD can do that. Some users will troll and complain when AMD cards will be a stutter and low fps in games like Just Cause 3, Rise of the Tomb Radier, Quantum Break. and other upcoming Nvidia and MS title simply because they cannot use Dx12.1 features.

I simply refunded ATOS because it is totally meant for AMD gamers so they can enjoy this game.

Absolutely and unequivocally untrue, unfounded statement right there.

While both AMD and NVIDIA GPUs did suffer from stuttering and performance issues when Just Cause 3 DX11 launched, none of that had to do with DX12 FL12_1 support (like you attempted but failed to successfully argue in the Pascal thread).

For AMD,

Two issues affected Just Cause 3 upon launch:

1. 8GB system memory when the game used nearly 10GB caused stuttering.

2. Lack of QA done on the developers end, due to Gameworks, in order to ensure proper AMD support via drivers which caused visual anomalies.

AMD rectified these issues with their 15.11.1 hotfix drivers.

For NVIDIA,

One issue affected Just Cause 3 upon launch:

1. 8GB system memory when the game used nearly 10GB caused stuttering.

These issues had nothing to do, contrary to your claims, with lack of Conservative Rasterization and Raster Ordered Views.

If anything, Rise of the Tomb Raider DX12 should run quite well on AMD Graphics cards due to the inclusion of Multi-Threaded Rendering. CR might allow for VXGI (Voxel Based Ambient Occlusion) benefits in terms of performance when running VXGI under this title.

Most likely, AMD will need a driver to tune the Framebuffer usage on their 4GB GPUs but that shouldn't lead to the sort of sensationalist claims you're making.

I also have not heard of Remedy implementing Conservative Rasterization or ROVs in Quantum Break. I may be wrong but I haven't read or found anything which justifies this claim.

CR and ROVs aren't performance enhancement options per-say. ROV is a drain on performance whereas CR can allow for new post processing effects with a lower performance hit. Effects you can toggle on and off like AO.

As for your opinions on AotS, Oxide etc... They're unfounded, unproven conspiracy theories which contradict all of the available information I have been able to source. You're free to hold those opinions even, since your opinions aren't based on evidence, if they're baseless.

thesmokingman

Platinum Member

- May 6, 2010

- 2,302

- 231

- 106

Absolutely and unequivocally untrue, unfounded statement right there.

While both AMD and NVIDIA GPUs did suffer from stuttering and performance issues when Just Cause 3 DX11 launched, none of that had to do with DX12 FL12_1 support (like you attempted by failed to successfully argue in the Pascal thread).

For AMD,

Two issues affected Just Cause 3 upon launch:

1. 8GB system memory when the game used nearly 10GB caused stuttering.

2. Lack of QA done on the developers end, due to Gameworks, in order to ensure proper AMD support via drivers which caused visual anomalies.

AMD rectified these issues with their 15.11.1 hotfix drivers.

For NVIDIA,

One issue affected Just Cause 3 upon launch:

1. 8GB system memory when the game used nearly 10GB caused stuttering.

These issues had nothing to do, contrary to your claims, with lack of Conservative Rasterization and Raster Ordered Views.

If anything, Rise of the Tomb Raider DX12 should run quite well on AMD Graphics cards due to the inclusion of Multi-Threaded Rendering. CR might allow for VXGI (Voxel Based Ambient Occlusion) benefits in terms of performance when running VXGI under this title.

Most likely, AMD will need a driver to tune the Framebuffer usage on their 4GB GPUs but that shouldn't lead to the sort of sensationalist claims you're making.

I also have not heard of Remedy implementing Conservative Rasterization or ROVs in Quantum Break. I may be wrong but I haven't read or found anything which justifies this claim.

CR and ROVs aren't performance enhancement options per-say. ROV is a drain on performance whereas CR can allow for new post processing effects with a lower performance hit. Effects you can toggle on and off like AO.

As for your opinions on AotS, Oxide etc... They're unfounded, unproven conspiracy theories which contradict all of the available information I have been able to source. You're free to hold those opinions even, since your opinions aren't based on evidence, if they're baseless.

They are it is marketed with Nvidia.

https://www.youtube.com/watch?v=kAQRwtfhtFU.

99% of time MS games are market with Nvidia.

Mahigan

Senior member

- Aug 22, 2015

- 573

- 0

- 0

Even if they're marketed by NVIDIA it doesn't mean they use CR or ROVs.They are it is marketed with Nvidia.

https://www.youtube.com/watch?v=kAQRwtfhtFU.

99% of time MS games are market with Nvidia.

Microsoft has a contract with NVIDIA. Anytime they hold an event, NIVDIA GPUs are used to power the games.

I see no mention of Quantum Break being a Gameworks title as well. http://remedygames.com/quantum-break-pre-orders-and-previews-launch-windows-10-version-announced/

Last edited:

It will be and result will be same as GOW. You wanna bet?Even if they're marketed by NVIDIA it doesn't mean they use CR or ROVs.

Microsoft has a contract with NVIDIA. Anytime they hold an event, NIVDIA GPUs are used to power the games.

I see no mention of Quantum Break being a Gameworks title as well.

Mahigan

Senior member

- Aug 22, 2015

- 573

- 0

- 0

You do realize that Quantum Break was developed for the XBox One right? The PC version is being developed in-house at Remedy (not by some cheap third party know nothing developers).It will be and result will be same as GOW. You wanna bet?

If anything, Quantum Break might show parity between NVIDIA and AMD.

You do realize that Quantum Break was developed for the XBox One right? The PC version is being developed in-house at Remedy (not by some cheap third party know nothing developers).

If anything, Quantum Break might show parity between NVIDIA and AMD.

Rise of the Tomb Raider was developed on Xbox One.

Alan Awake was nvidia sponsored. Remedy has a history with nvidia and MS 99% games ended with nvidia sponsored.

https://twitter.com/RiotRMD/status/697879718478086145

he did not comment or said no and same was done when Alan wake was launched.

Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

What a cherry pick benchmark.

pcgameshardware.de uses highly OC models for their NV cards, with high boost clocks, nice cherry picking though. And their Tomb Raider is invalid, it uses the pre-release alpha build, not the actual game release build which fixed AMD performance.

Maybe you weren't aware of that and you thought it's legit. Pcper, TPU, Computerbase.de used the release build to bench, and the 390 is heaps faster than the 970.

The 970 can't even enable Very High textures without crazy stutters: https://www.youtube.com/watch?v=Gki0dV2kcqM

This was before AMD's optimized drivers a few days after release:

That 390X ~= non-reference 290X. Under-performing so much!

How about a video run through, so you can't deny, even at your favorite 1080p.

https://www.youtube.com/watch?v=cMUbHdQBSiQ

https://www.youtube.com/watch?v=fq6GyUzyuJQ

https://www.youtube.com/watch?v=Jne8VWuE2a4

These guys (Digital Foundry) even use non-reference 970 models, custom ones that you know, already boost above reference. And it still gets wrecked.

So much under-performance there from GCN! -_-

Last edited:

Yes you are totally right. GTX 980 Ti should be underclocked to 1050hmz and and memory should be underclocked to 500hmz to make benchmark fair because it is Nvidia fault that their card are extreme at OC and Fury X is not.pcgameshardware.de uses highly OC models for their NV cards, with high boost clocks, nice cherry picking though. And their Tomb Raider is invalid, it uses the pre-release alpha build, not the actual game release build which fixed AMD performance.

Maybe you weren't aware of that and you thought it's legit. Pcper, TPU, Computerbase.de used the release build to bench, and the 390 is heaps faster than the 970.

How about a video run through, so you can't deny.

https://www.youtube.com/watch?v=cMUbHdQBSiQ

https://www.youtube.com/watch?v=fq6GyUzyuJQ

https://www.youtube.com/watch?v=Jne8VWuE2a4

These guys (Digital Foundry) even use non-reference 970 models, custom ones that you know, already boost above reference. And it still gets wrecked.

So much under-performance there from GCN! -_-

So true Silverfox and it is the post of the year.

Mahigan

Senior member

- Aug 22, 2015

- 573

- 0

- 0

Rise of the Tomb Raider was developed on Xbox One.

Alan Awake was nvidia sponsored. Remedy has a history with nvidia and MS 99% games ended with nvidia sponsored.

https://twitter.com/RiotRMD/status/697879718478086145

he did not comment or said no and same was done when Alan wake was launched.

Yes,

And once AMD released their 16.1.1 hotfix drivers:

http://m.hardocp.com/article/2016/02/15/rise_tomb_raider_video_card_performance_review/1

Basically,

AMD win everywhere except at the top end (and only lose by a bit). This is under a DX11 title. We've seen what happens to AMDs performance under DX12 even without Async Compute.

As for the VRAM limits of the Fury series... HardOCP reviewed that later and found that they were wrong here: http://m.hardocp.com/article/2016/02/29/rise_tomb_raider_graphics_features_performance/

HardOCP are big time NVIDIA supporters too. Check out their forums.

So I'm not sure what you're getting at... Tomb raider performance at launch?

As for the rest of your post, mostly assumptions. No evidence thus baseless.

Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

@desprado

In your world, you think it's fair to pit factory OC models that boost above 1.4ghz vs stock cards.

Were you around when GCN in the 7800 and 7950 series did massive 50% OC? Did you think it would be fair back then if review sites compared them running with such massive OC against stock NV GPUs?

This is why there's standards in tech journalism, because one gen to another, OC ability varies and if you start to pit massively OC models vs stock, you lose credibility because the data you generate is biased. Hence, sites like Anandtech, TPU, Guru3d, Computerbase.de etc continue to be the standard that gamers respect. Because they are consistently fair.

Btw, Computerbase result even includes factory OC 970 and 980, Strix/Gaming cards, that boost above reference 970/980 clocks.

It still got trashed.

You must be one of the few who are still denying that GCN is maturing well, performing great in new games?

In your world, you think it's fair to pit factory OC models that boost above 1.4ghz vs stock cards.

Were you around when GCN in the 7800 and 7950 series did massive 50% OC? Did you think it would be fair back then if review sites compared them running with such massive OC against stock NV GPUs?

This is why there's standards in tech journalism, because one gen to another, OC ability varies and if you start to pit massively OC models vs stock, you lose credibility because the data you generate is biased. Hence, sites like Anandtech, TPU, Guru3d, Computerbase.de etc continue to be the standard that gamers respect. Because they are consistently fair.

Btw, Computerbase result even includes factory OC 970 and 980, Strix/Gaming cards, that boost above reference 970/980 clocks.

It still got trashed.

You must be one of the few who are still denying that GCN is maturing well, performing great in new games?

Last edited:

@desprado

In your world, you think it's fair to pit factory OC models that boost above 1.4ghz vs stock cards.

Were you around when GCN in the 7800 and 7950 series did massive 50% OC? Did you think it would be fair back then if review sites compared them running with such massive OC against stock NV GPUs?

This is why there's standards in tech journalism, because one gen to another, OC ability varies and if you start to pit massively OC models vs stock, you lose credibility because the data you generate is biased. Hence, sites like Anandtech, TPU, Guru3d, Computerbase.de etc continue to be the standard that gamers respect. Because they are consistently fair.

Btw, Computerbase result even includes factory OC 970 and 980, Strix/Gaming cards, that boost above reference 970/980 clocks.

It still got trashed.

You must be one of the few who are still denying that GCN is maturing well, performing great in new games?

Why you do not post benchmarks of Rise of the Tomb Raider, GOW, and other 2016 games?

You are talking about GTX 970 and GTX 980 and posting benchmarks of 1440p.

So you mean to say that Journalist should underclock GTX 980 Ti to fury X level like 1050hmz and memory to 500hmz right.

Last edited:

Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

Why you do not post benchmarks of Rise of the Tomb Raider, GOW, and other 2016 games?

You are talking about GTX 970 and GTX 980 and posting benchmarks of 1440p that is the reason even developers do not take AMD community seriously.

So you mean to say that Journalist should underclock GTX 980 Ti to fury X level like 1050hmz and memory to 500hmz right.

I did post Rise of the Tomb Raider, you have a problem with English charts?

Sites that tested the pre-release build (the one gamers did not have access to), are invalid. Only sites that test the actual release/Steam build have data that are correct.

You haven't heard of VSR/DSR? 1080p gamers can enjoy better image quality too. -_-

The videos I linked are all 1080p in fact, and they are 2016 games. Not sure what you are getting at, maybe you just don't care for facts..

I meant to say tech journalists should do reference cards vs reference cards like they used to do and like reputable sites still do. If they want to do OC vs OC, then do an article on that separately.

Dial the clocks back a few years, the 7950 with it's 50% OC trashed the 680 OC for half the price. Yet it would be very unfair to pit OC AMD GPUs vs stock NV GPUs, so reputable sites did not do that.

What if in the next-gen, Polaris is an amazing overclocker like the 7800/7900 series, do you want review sites to throw OC AMD GPUs against stock NV GPUs?? Don't throw OC GPUs into a stock lineup, it's clearly biased. Do you not understand?

That's why we have standards.

Review site do not that because even a normal person know that Fury X cannot be OC and if it is OC then results are only 5% better. It is a waste of time the review site to show a GTX 980 TI OC vs Fury X OC.I did post Rise of the Tomb Raider, you have a problem with English charts?

Sites that tested the pre-release build (the one gamers did not have access to), are invalid. Only sites that test the actual release/Steam build have data that are correct.

You haven't heard of VSR/DSR? 1080p gamers can enjoy better image quality too. -_-

The videos I linked are all 1080p in fact, and they are 2016 games. Not sure what you are getting at, maybe you just don't care for facts..

I meant to say tech journalists should do reference cards vs reference cards like they used to do and like reputable sites still do. If they want to do OC vs OC, then do an article on that separately.

Dial the clocks back a few years, the 7950 with it's 50% OC trashed the 680 OC for half the price. Yet it would be very unfair to pit OC AMD GPUs vs stock NV GPUs, so reputable sites did not do that.

What if in the next-gen, Polaris is an amazing overclocker like the 7800/7900 series, do you want review sites to throw OC AMD GPUs against stock NV GPUs?? Don't throw OC GPUs into a stock lineup, it's clearly biased. Do you not understand?

That's why we have standards.

Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

Review site do not that because even a normal person know that Fury X cannot be OC and if it is OC then results are only 5% better. It is a waste of time the review site to show a GTX 980 TI OC vs Fury X OC.

Fury X and the 980Ti aren't the only GPUs gamers care about.

With +vcore (which is available in Afterburner for awhile), at least it can reach 1.2ghz, not great, but not 5%. -_-

Now, you didn't address the important point about having standards and being neutral, and why sites with a good reputation, have maintained this standard practice.

Do you not understand, that randomly throwing a massive OC GPU into a stock line-up is biased?

Would you be OK with that if Polaris OC amazing like the 7800 and 7900 series and sites started throwing OC Polaris GPUs into their charts to compare against stock NV GPUs?

Fury X and the 980Ti aren't the only GPUs gamers care about.

With +vcore (which is available in Afterburner for awhile), at least it can reach 1.2ghz, not great, but not 5%. -_-

15% OC for 10% in game perf gain, pretty good I'd say.

Why you....

you have a problem....

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 22K

-

News NVIDIA and Intel to Develop AI Infrastructure and Personal Computing Products

- Started by poke01

- Replies: 384

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.