- Jan 15, 2000

- 7,052

- 0

- 0

I'm studying some practice problems for a programming interview and wanted to get some guidance from you guys. The question asks to write a method that will print out the path to the deepest leaf node in a binary tree. I've figured out how to do that, but wanted to make sure I'm computing the run-time of my algorithm correctly.

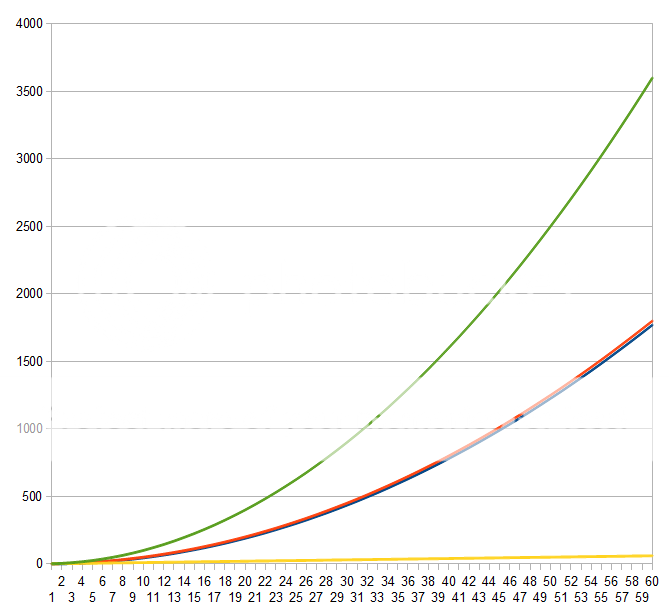

From my observation, the runtime of this algorithm will be O(n^2). It's n^2 because in the worst case (unbalanced tree) we will visit every node, and for each node we must revisit (n-1), (n-2), (n-3) nodes again and computing their heights as we traverse down the tree. Is this correct or am I missing something here?

From my observation, the runtime of this algorithm will be O(n^2). It's n^2 because in the worst case (unbalanced tree) we will visit every node, and for each node we must revisit (n-1), (n-2), (n-3) nodes again and computing their heights as we traverse down the tree. Is this correct or am I missing something here?

Code:

void PrintStringToDeepestNode(Node *pRoot)

{

if (!pRoot)

{

// Root is NULL, base case to break out of recursion

return;

}

// Print current node value

printf("%c\n", pRoot->c);

// Determine which child subtree has the greater height then recursively

// traverse down that path. If both heights are equal, the right subtree

// is automatically chosen

if (ComputeHeight(pRoot->left) > ComputeHeight(pRoot->right))

{

PrintStringToDeepestNode(pRoot->left);

}

else

{

PrintStringToDeepestNode(pRoot->right);

}

}

int ComputeHeight(Node *pRoot)

{

if (!pRoot)

{

// If root is NULL, return -1 to indicate no height

return -1;

}

else if (!pRoot->left && !pRoot->right)

{

// If root has no children, return 0

return 0;

}

else

{

// Determine node height as follows:

// height = 1 + height(max_child_subtree)

return std::max(1 + ComputeHeight(pRoot->left),

1 + ComputeHeight(pRoot->right));

}

}