ASUS has done a tremendously good job at engineering a robust video card that can take the AMD Radeon RX 480 GPU to new heights. The ASUS ROG STRIX RX 480 O8G GAMING is capable of a high voltage setting, and it works, with the combined excellent cooling of DirectCU III and the Power Limit slider we are able to experience a high consistent clock speed overclocked. The DirectCU III cooling solution works very well, keeping the GPU at cool temperatures with no noise. Even overclocked, where we manually increased the fan speed the noise level was more than tolerable and the temps were excellent. You could probably lower the fan speed from what we had set with the overclock achieved.

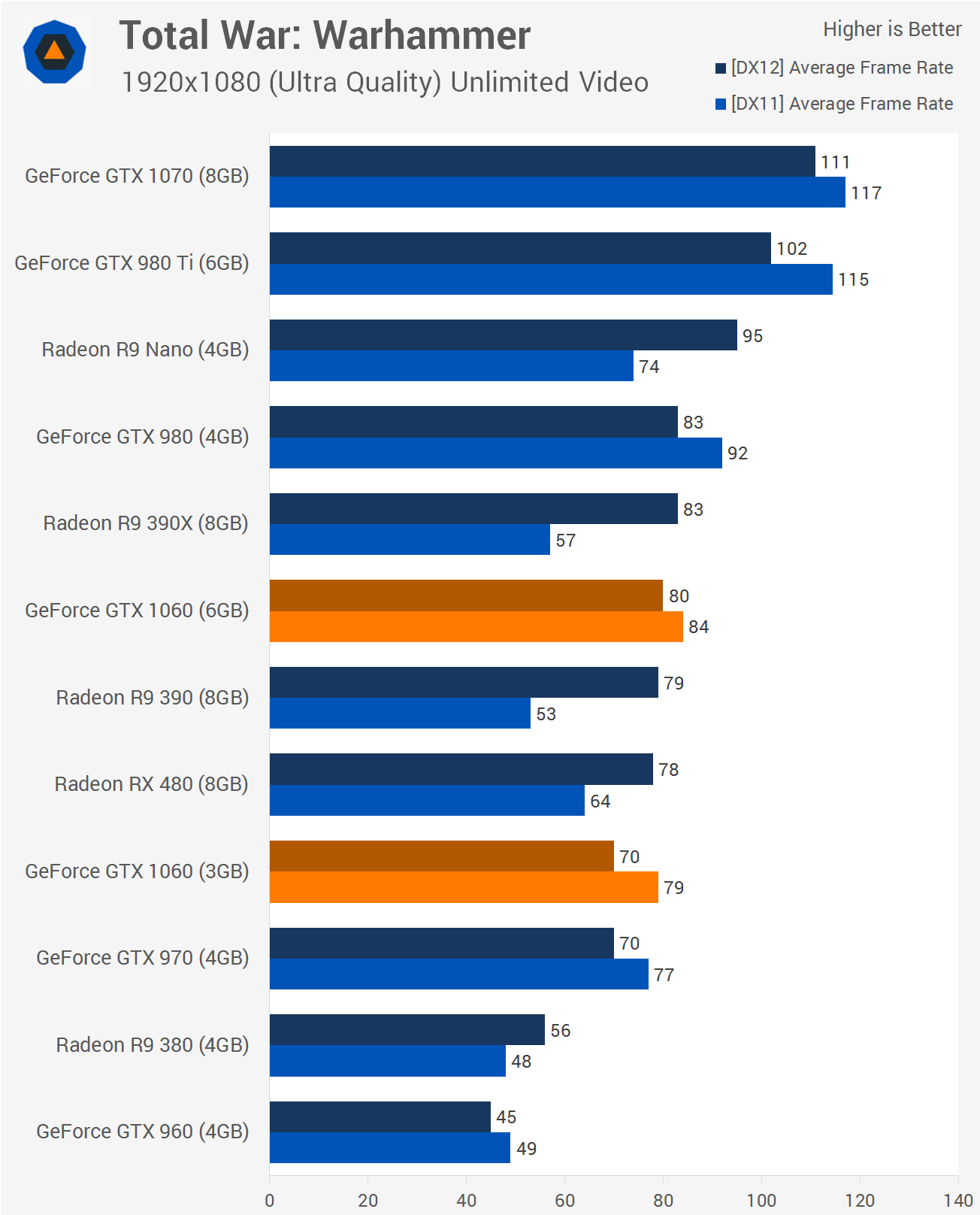

As we mentioned, this is the highest overclock we’ve achieved yet with any AMD Radeon RX 480 GPU. The overclock has improved performance over the card’s default out-of-box clock speeds as well as a reference AMD Radeon RX 480 by a great deal. The overclock provides a real, tangible benefit to the gameplay experience. With the overclock the ASUS ROG STRIX RX 480 O8G GAMING video card is very good competition to highly overclockable GeForce GTX 1060 video cards as we have shown.

There is an MSRP of $299 on the ASUS ROG STRIX RX 480 O8G GAMING video card, however, you do get a lot of potential and customized hardware with that price. Thankfully however there are price savings and rebates currently making this video card much less expensive. It can be had at

$259.99 after $20 MIR at both Amazon and Newegg.

At $260 this video card competes well with the likes of highly overclockable GeForce GTX 1060 video cards like we have compared to in this evaluation. At $260 you know you’ll be getting a video card that has what it takes to push the AMD Radeon RX 480 GPU to its limits in performance.

If you are looking for one of the best AMD Radeon RX 480 GPU based video cards out there this holiday season, the ASUS ROG STRIX RX 480 O8G GAMING should be on your short list.