What an understatement

And it looks like it doesn't want to die. Yet.

Yes, A13 is competitive against Intel chips but the emulation tax is about 2x. So given that A13 ~= Intel, for emulated x86 programs you'd get half the speed of an equivalent x86 machine. This is one of the reasons they haven't yet switched.

Another reason is that it would prevent the use of Windows on their machines, something some say is very important.

The level of ignorance in this thread would be shocking if it weren't depressing.

Let's state some basics:

(a) History. Apple has never let backward compatibility limit what they do. They are not Intel, they are not Windows. They don't sell perpetual compatibility as a feature. Christ, the big weeping and wailing THIS YEAR (every year there's a big weeping and wailing...) is about Catalina dropping all 32-bit compatibility.

Apple has switched from 68K to PPC to x86 (to ARM on iDevices). They have switched from 32 to 64-bits multiple times. Every one of those transitions was smooth. Most people never even noticed. Of course there is that very particular internet personality who is still whining five years later how some transition killed his cat and ate his dog... Do you want to base your analysis of the future on Mr Grumpy, or on the lived experience on most users?

(b) Why don't most users notice? Because

- most users only use Apple apps. I cannot stress how important this is. Apple apps cover most of what people want, and they are very good. And Apple apps will transition to ARM COMPLETELY when Apple transitions. Apple is not MS, randomly leaving bits and pieces of the OS to rot away and never recompiling them.

- most Apple developers are used to change. If they're not, they're bankrupt. Apple tells them years in advance to adopt some technologies and stop using others, and if they refuse to do so THEIR APPS STOP WORKING. (Which includes, as a side effect, that people stop paying for them...) If you ship a GL app today, you're an idiot. If you ship a Carbon app today, it won't work on Catalina. If you ship a PPC assembly app, well, good luck being part of you little community of three other fanatics living in the past. Apple developers have apps written in high level languages, not assembly. So they retarget by recompiling.

- Apple has long experience in how to allow their developers to easily create fat binaries that run on multiple architectures. They'll have a version of Xcode that does this as appropriate (probably announced at WWDC next year) and any developer that's not actually dead will be shipping fat binaries by the time the consumer ARM Macs ship.

- Apple is used to shipping emulators, JITs, and similar machinery that can handle old binaries. Apple doesn't have much interest in supporting this long-term. But they will provide such support for the first few years. People's old x86-64 apps will work (not as fast as before, but they will work) for a year or so, while people who rely on them find an alternative.

(c) Why is this all true for Apple but not Microsoft? Because, repeat after me kids, APPLE IS NOT MICROSOFT!

I'm not interested in arguing about the values of perpetual compatibility and caution vs perpetual change and risk taking. Both have their place, both attract different personalities. It's just a fact that MS is one, Apple is the other. One consequence is that when Apple makes a change like this, it goes all in. Once Apple has decided on ARM Macs, the entire line will be ARM Macs within a year. Within ~three years the old x86 Macs will stop getting new APIs. After ~five years they'll stop getting OS updates, after ~seven years they'll stop getting Security updates. Meanwhile MS still can't decide 20 years later whether it wants to fully support .NET and CLR or not. It remains wishy-washy about its ARM support.

This has consequences. It means that Apple developers are used to rebuilding every year, whereas many Wintel developers never even bother trying to compile for ARM. It means few Apple users are running code that was compiled more than a year ago --- because such code, even if it's still sorta compatible doesn't work well on the newest devices; and because the kinds of developers who don't update their code frequently don't make any money on Macs.

(d) This may come as a shock to you, but the number of people who actually give a damn about Windows running on their Macs is miniscule. Why would they? Everything they want exists in Mac form. Sure, if you hang out in certain places it sounds like this is a super-important issue --- just like if you hang out in certain places everyone will insist that gaming on Linux is like, totally, the economic foundation of the computer entertainment industry...

(e) For those people who absolutely insist on still running Windows on their Macs, here's another surprise: It's no longer 1995, and we have new technologies!

If you require x86+Windows in the modern world, the way you will get it is through a PC in a thumb drive that will communicate with the host Mac's virtualized IO and screen. Basically what Parallels does today, but with the x86 code running on a thumb PC and communicating via USB-C. Apple probably won't even do the work, they'll just let Parallels or VMWare write the code and drivers for both ends and sell the thumb PCs; all Apple will do is work with them if there are any particular technical issues that come up. Expect to see one or both of them demo-ing such a solution when Apple announces the ARM Macs to consumers.

NO-ONE is going to bother with some sort of hydra chip that either executes both ARM and x86 code, or even that puts an x86 core on the ARM SoC.

There is a constant thread going through the people who say Apple can't do this: they are people who haven't studied the history of Apple, and who don't use Apple products very much. They look at this from the point of view of how it would fall out if MS did this. But, what did I say, kids, APPLE IS NOT MS!

Apple USERS are not MS users -- different expectations, different software on their machines.

Apple DEVELOPERS are not MS developers -- used to change, used to supporting multiple OSs and ISAs.

As for why Apple would do this

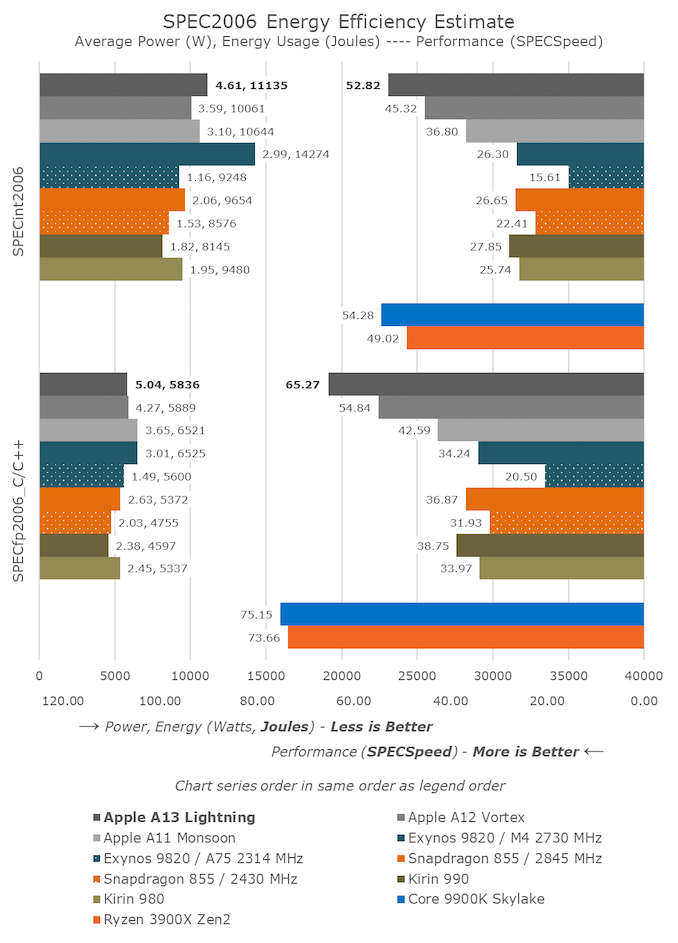

(a) performance. Apple ALREADY matches the best performance Intel has in their "standard" cores. Look at the numbers. The phone cores do this under phone constraints (most significantly a current constraint). The iPad cores tend to run 5..10% higher performance partially because the current constraint is not so strict, partially because they have wider memory. Obviously the Mac cores would be at the very least the iPad cores, with that wider memory, and with even less severe current constraints.

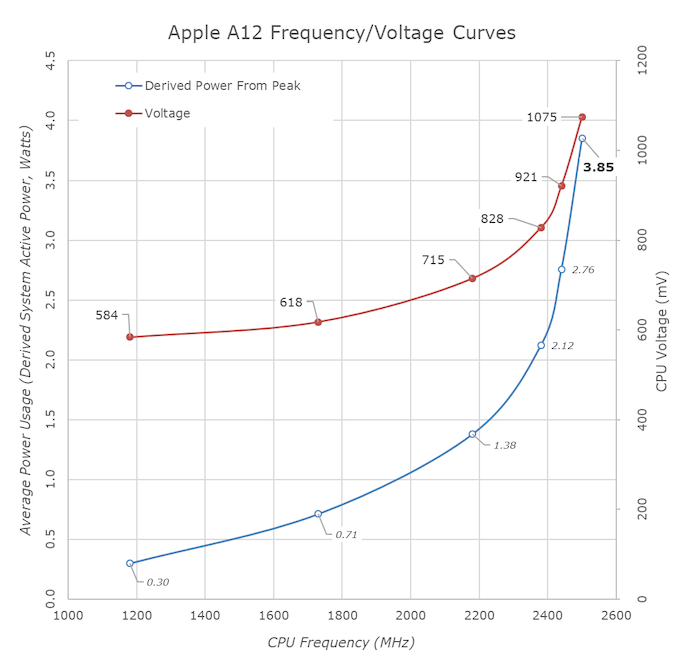

Apple does this by IPC, not frequency, and if you insist that frequency matters, all you're showing is your ignorance. Intel built themselves a massive hole (that they now apparently can't get out of) because they obsessed over frequency too much (apparently learning NOTHING from how the same obsession failed with the P4).

The one place Apple still lags Intel in a measurable way, for some tasks, is code that can use wide vectors (ie AVX-512). Presumably Apple will soon (maybe even next year) have SVE which takes care of that.

(b) SMT. Another sure sign of the ignorant.

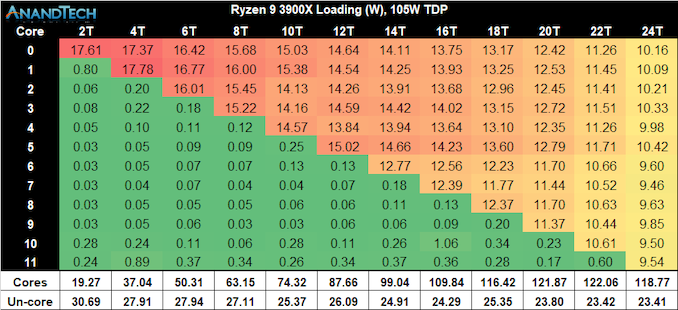

SMT is a DUD. It gives 25% extra performance (so your 4 cores have the throughput of five cores -- for some types of code) at the cost of enormous validation and security headaches.

There are ways to add SMT-like functionality that are much smarter than SMT (in particular forget the "symmetric" part, have fibers that are user-level controlled, not OS controlled, so they never cross protection boundaries) and I certainly hope that if ARM or Apple ever go down the "SMT-like" path, they do that, not Intel-style SMT.

But for now, the ARM/Apple "answer" to SMT is small cores. If you need extra throughput, use the small cores. An A12X (4 large, small cores) has the throughput of ~5 large cores --- same, effectively as SMT"s 25% boost. Different ways to solve the same problem of optionality --- how do I ship a system that maximizes for all of latency, throughput, AND power? SMT gives you some flexibility for the first two, big.little gives you that same flexibility but for all three.

(c) Control. Look at what Apple has done with control of their own SoC. Obviously continual performance improvements.

Much better security. (Including IO security.)

Continually adding features to GPU, plus adding NPU, plus now adding what's apparently for on-machine learning (AMX).

Other functionality we don't even really know yet (U1).

Against that, with Intel all they get is more of the same, year after year. Intel turnaround times are SO slow. Upgrades appear to have actually stopped. Security remains an on-going issue.

Sure Apple can try to deal with some of this through heroic interventions like adding the T1 then T2 chips to retrofit some security. But god, what a pain!

Look at things like how long Apple wanted to use LPDDR4 in Macs --- but couldn't because Intel. I'm sure at least part of the delay for the new Mac Pros was that Apple kept hoping (or being promised by Intel) that soon Intel would be shipping something with PCIe4. But no, still waiting on that.