Very interesting, thank you all.

I wonder when we'll actually start seeing more card/processors designed for specific tasks in the way described?

So this is admittedly a niche market for an Afterburner type FPGA/ASIC... (1) do you think we might see someone else (Blackmagic Design, other) have the capability to design and manufacture such a card for sale? (2) any chance one may be able to buy the Afterburner card and get it supported on a Windows PC (I mean, it is PCIe afterall and Adobe Premiere / DaVinci resolve are being coded to support it on Mac).

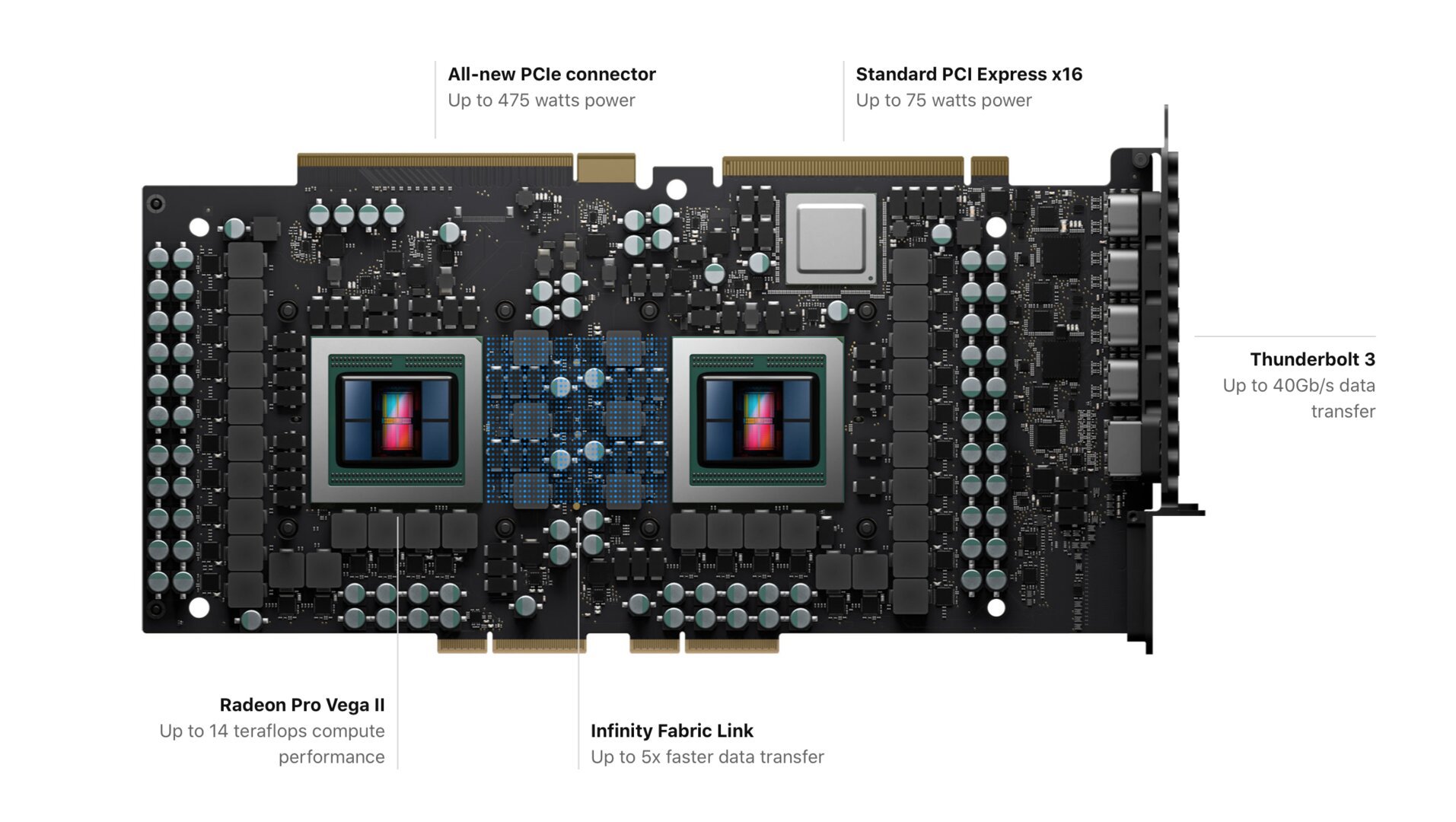

I didn't realize Infinity Fabric was just an AMD thing. So basically, the MDX card Apple is touting is completely feasible on a PC since we can just jack more power to the card right through the PSU? Apple's custom port addition is for power only, not more data throughput?

We already do. Just not in the consumer space. I'm not sure we'll see any big ones there, I think for consumers they'll do that at the chip level. They already do in mobile processors, and they're moving to do similar (but instead of integrating it in the same die, they're doing it as multiple different chips and looking to fit them all on the same package - like how AMD has multiple CPU dice on a single CPU package - which even that's not really new, as both AMD and Intel did kinda similar in the early days of going multi-core; but now they're looking at doing GPU die alongside CPU die, and AMD split the input/output into its own separate die now).

But in data centers they already have dedicated AI processors (see Google's TensorProcessingUnit or TPU). And there's a bunch of other stuff that's been made for those markets (wouldn't be surprised if big broadcast companies and movie studios have some specialized video hardware).

In a lot of ways this stuff isn't new at all and is a call back to earlier computing, where there would be lots of specialized chips, you'd have main processor but then you'd have math co-processors, dedicated input/output chips, video chips, audio chips, and a bunch of others. The computing industry kinda fluctuates between specialized and general purpose depending on the production capabilities. Transistor production was advancing so quickly, making it possible to just keep improving general purpose chips like CPUs (and then later GPUs) to where it'd be difficult to keep pace with dedicated chips (because software was where most of the real development was happening, so getting constantly faster CPUs made it able to run software faster, and dedicated chips need software as well so it kinda cut out the development of the specialized processing chip by just focusing on the software side and letting the improvements in CPUs keep pushing performance; and so instead of a bunch of companies doing their own processing chips, they were all buying from say Intel, who then had the money to pour back into advancing their CPUs further).

Its pretty fun to look at old 70s and 80s computers. So many chips. A quick aside, one of the reasons I loved the show Halt and Catch Fire is that the first season delves into that nitty gritty (while not becoming too technical and getting boring, they kinda boil things down to "oh, what if we put chips on both sides of the mainboard instead of just one?" which was a real development that happened in order to make more compact computers).

Er, sorry for the long winded responses!

Yeah its a niche market (although I do think even general consumer market could use some advancement there). AMD actually talked about stuff like this about a decade ago (they talked about making a "holodeck" where one of the steps in getting there is just having lots and lots of displays - they mentioned ridiculous amount like 16 or maybe even 32 displays; which that was behind their push for Eyefinity - which was them pushing multiple displays - especially for gaming although that kinda fell off with consumers and most only ever did 3x1; there are day traders that do lots of displays but they just use multiple video cards to do that). Stuff like that did already exist (ever see in movies where they have like a wall of TVs showing a single video feed?), but a single card/chip solution is something fairly new.

Which Apple's chip isn't really outputting to displays but rather handling video feeds (which there's similarities but its not fully the same thing, they were showing someone having a video editing timeline with like 8 video feeds that they could then sync, splice, merge and do general video editing things with, but it was all on one display; it assuredly could do multiple displays though but I think that'd be handled by the video card - which they seem to have beefed up the video output of with it having several Thunderbolt ports). I'm not sure if the Afterburner chip can do any actual processing beyond that (like decode/encode, I imagine it'd at least need to have some decoding capability, but they were talking about putting raw un-encoded video streams - which is actually more difficult as they're much larger in size, hence why normal systems bog down so much handling multiple feeds of that - so it might not or would need to be decoded by the CPU or GPU before the Afterburner chip would take over handling the video feed).

Its definitely possible that someone could make such a card for Windows. It might already exist actually even in those niche markets (companies aren't going to advertise to general consumers, so unless you worked in those fields you likely wouldn't even know about such products) that would need it as its not unheard of. While its not the same thing, look up Video Toaster for such a product that was built for professional video editing (it was developed by Dana Carvey's brother - well he was one of several that developed it).

I doubt you'd be able to get this Afterburner card to work on Windows. It might possibly be able to hacked to work (after all people make "Hackintosh" where they get MacOSX running on non-Apple systems), but even if you got it working it almost certainly wouldn't work anywhere near as well as it does in Apple's system so it'd be pointless anyway.

Yeah InfinityFabric is just an AMD protocol (they said they'd license it to anyone, but Intel and Nvidia probably aren't going to, especially since there's other similar industry protocols for systems that mix different brands of hardware). It mostly will make it so AMD can have their CPUs and GPUs communicate better.

Its feasible. We already had cards like that (for like 7 or so years there, we'd got high end dual-GPU graphics cards, often targeted at PC gamers when Crossfire and SLI had more popularity than they do now). Cooling, power (supposedly the dual GPU stuff would be limited to 300W, but people would overclock them and they'd push up to like 500W pretty easily), and cost (they were often like $1000+, which now single GPU cards are in that price range) were big issues though. And then multi-GPU support (for Crossfire and SLI) in games has gotten worse so it further pushed consumers from them. A lot of data center tasks that GPUs are used for though still scale very well (as in like almost totally inline with how many GPUs you have, so 2 you get 2x the performance, and 4 you get nearly 4x, but they're starting to bump into issues with moving all that data - hence protocols like InfinityFabric and others to improve that).

I don't know if Apple's special PCIe connector does more than power (I would guess even if it doesn't with this dual GPU card, that it has the flexibility to do data as well). Without knowing the spec itself (which Apple might not release to the general public) though I can't say. They didn't say it was just for power, but no idea what data capability it might have.

I think Linus mentioned that there are some PCIe power connectors in the new Mac Pro - but that's a butt ugly solution for a high end Apple product

Haha, that figures. Which, totally understand if they wanted to "Apple" some nicer power cable setup, but this just seems weird to me. I guess its not that weird but seems like there were better solutions. Frankly I don't even entirely get the dual GPUs on a card that close together. I get they didn't want the noise of blowers and whatnot from using Vega 20 cards, but that's why I said make sockets that can support CPU and GPU. Have that be on a board along the bottom of the case, and make it so you could have like 1-4 of them.

I feel like Apple could've innovated some much more interesting stuff, and I don't know that it would've taken any more engineering to accomplish it. They really could've made a splash if they'd developed some fiber optic interconnect, and it enable low latency and speeds of like 100GB/s now (with the ability to expand it in the future). That's where things are heading, and Apple could've been ahead of the curve. Plus it would enable external stuff to make Thunderbolt look silly (which Thunderbolt was originally supposed to be fiber optic).