The problem as I see it that modern CPUs have a huge dynamic range of clock frequencies, with very different efficiency figures along that curve. And all the marketing is about obfuscating data and confusing the user. The worst part: the users seems ok with it, and even tech journalists don't mind.

While marketing folks and fanboys can read a lot into things from CPUs that have such a wide dynamic range, it isn't for nothing that they're doing that.

Back in the old days (not the real old days, I'm talking 90s here) CPUs would always run at the same speed and burn pretty much the same power. When the system wasn't doing anything it just ran an idle loop which just repeatedly checked if there was anything to do millions of times a second.

The first power based optimization was adding a "halt" instruction that could basically stop the CPU until the next interrupt, and when operating systems implemented it systems that were mostly idle used less power. It was certainly a win for those early laptops!

Over time we've added ways for CPUs to run slower when there's less work, and run faster for a short time when there's more work. How much effort to put into saving power depends a lot on your power budget - Apple has had a lot more reason to worry about saving power as its CPUs were for phones first, while Intel's were desktop/server first though after P4 when they dropped the desktop/server focused P4 for a core originally designed exclusively for their laptop line they've tried to balance both sides.

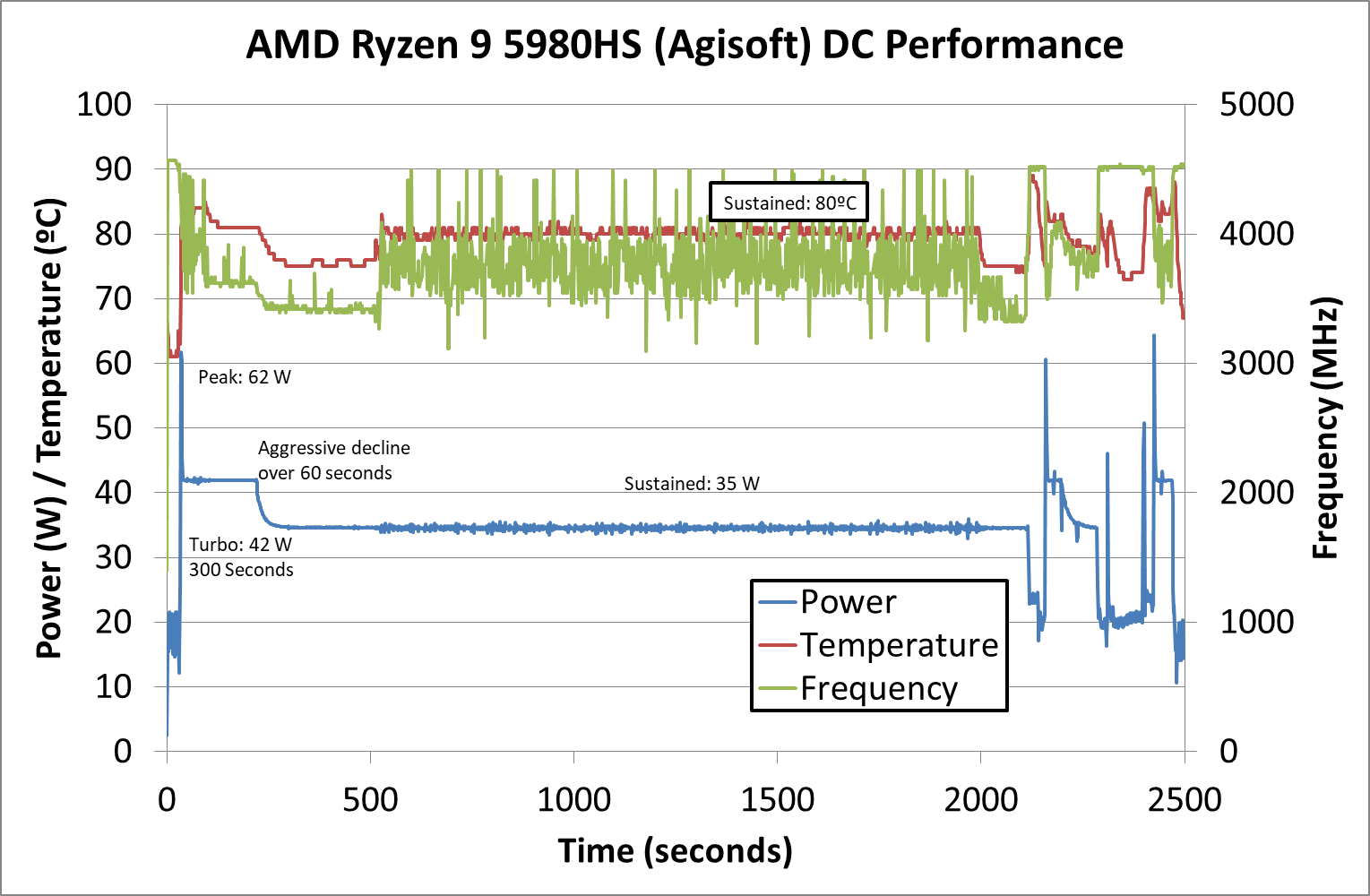

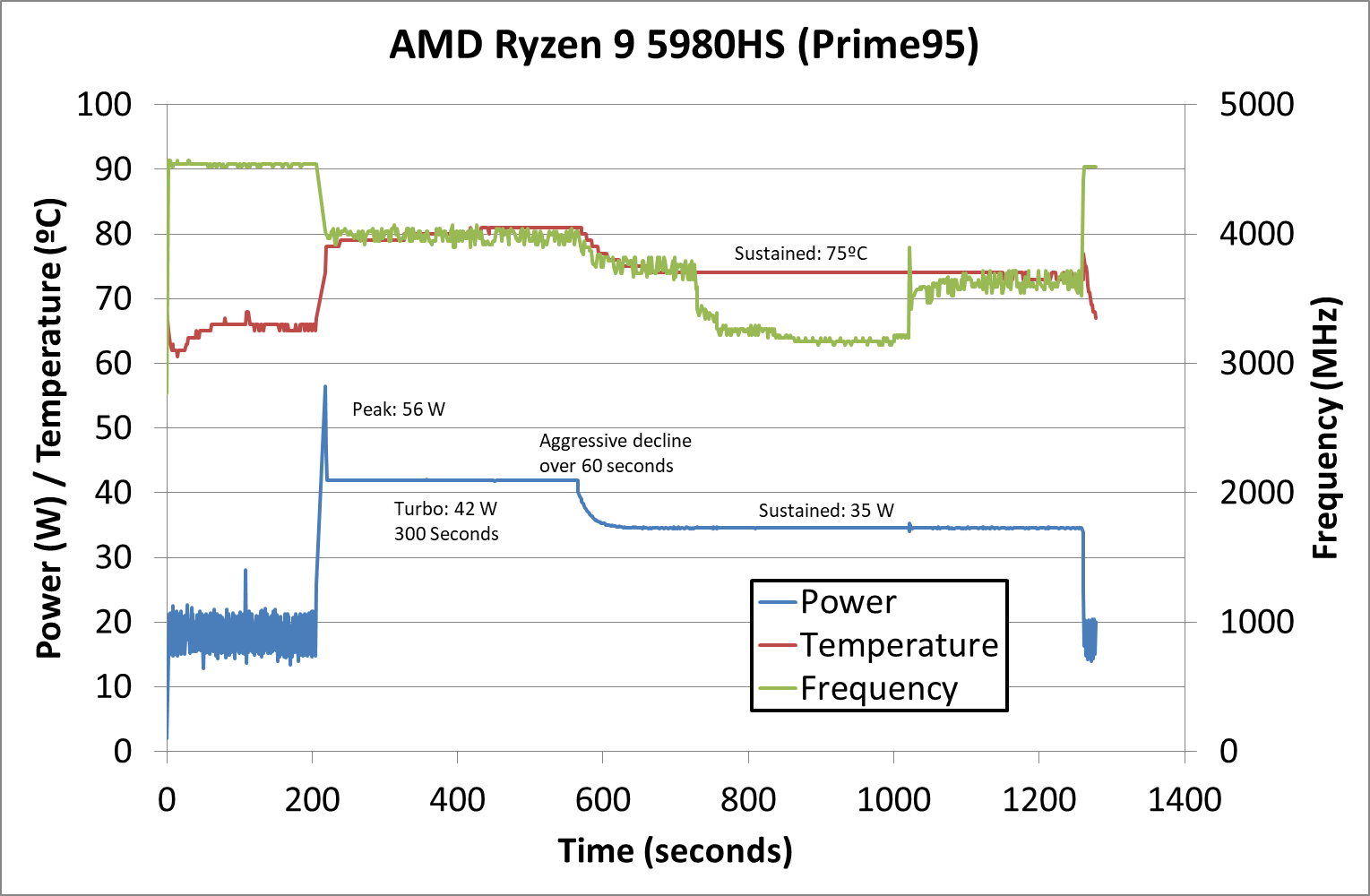

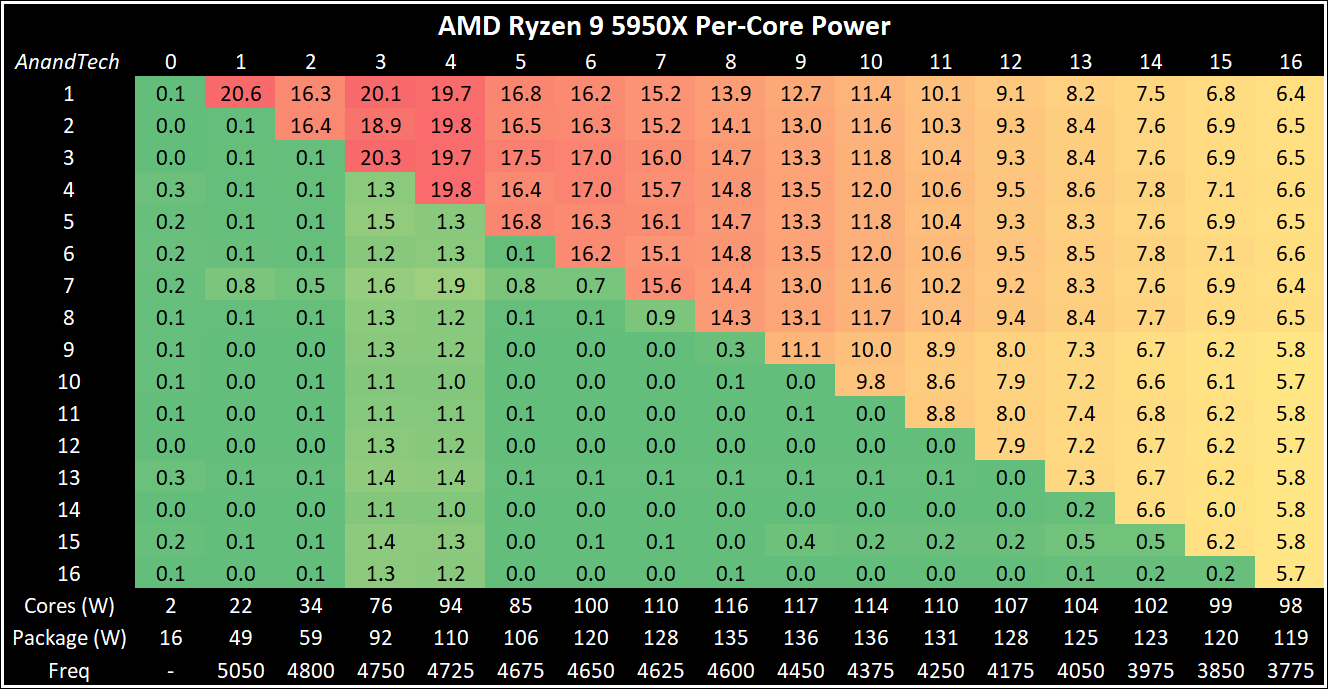

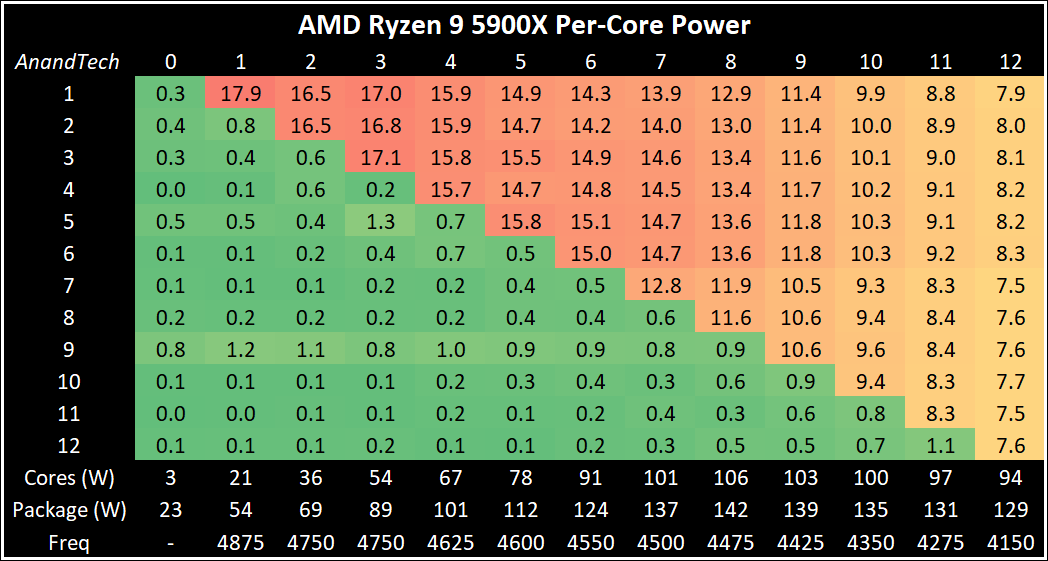

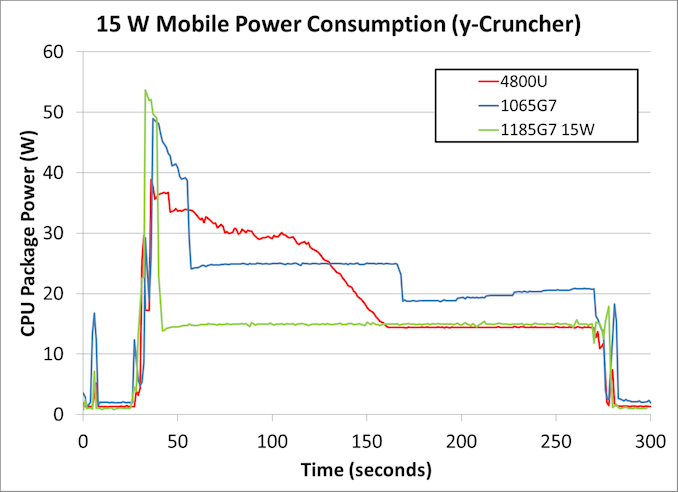

If you balance both sides like Intel attempts to do your CPU will operate across the entire power curve, not just the lower end where Apple is forced to live but into the upper reaches where only overclockers used to dare tread. That makes it really hard to say "this core draws x watts" because when it is clocked down in scenarios where it has little to do it can draw very little power. Maybe not as little as Apple's big cores when doing a similar "little to do" but not terribly far away. It can also for short periods draw dozens of watts for a single core, which may be sustainable for a while if you only need the one core but you can't run all of them that way for long with any sort of reasonable cooling solution.

If you calculate performance by "who finishes first" you give the advantage to designs that allow cores to operate at the extreme end of the power curve. If you calculate efficiency by "who completes task X with the least amount of power" you give the advantage to designs that force cores to operate at the nice flat part of the power curve (or better yet have small cores designed to perform less well in exchange for less power)

Unless you have a core that both finishes first AND uses less power to complete the task than its competition, you can't measure both at once. Even then, arguably, that core could either finish even MORE quickly by allowing it to operate higher on the power curve or be even MORE efficient by forcing to operate lower on the power curve.

Since such a "double win" is pretty rare, and even when it happens is not likely to be an advantage maintained for long, you're left trying to measure one thing while controlling multiple variables. Its like the Uncertainty Principle, you can't measure both performance and efficiency at the same time; the more you try to hold one constant the more difficult an honest proper measurement of the other becomes.