Nothingness

Diamond Member

- Jul 3, 2013

- 3,365

- 2,459

- 136

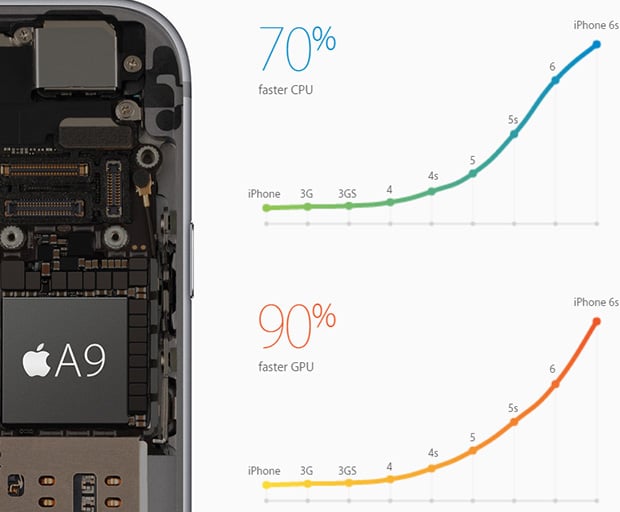

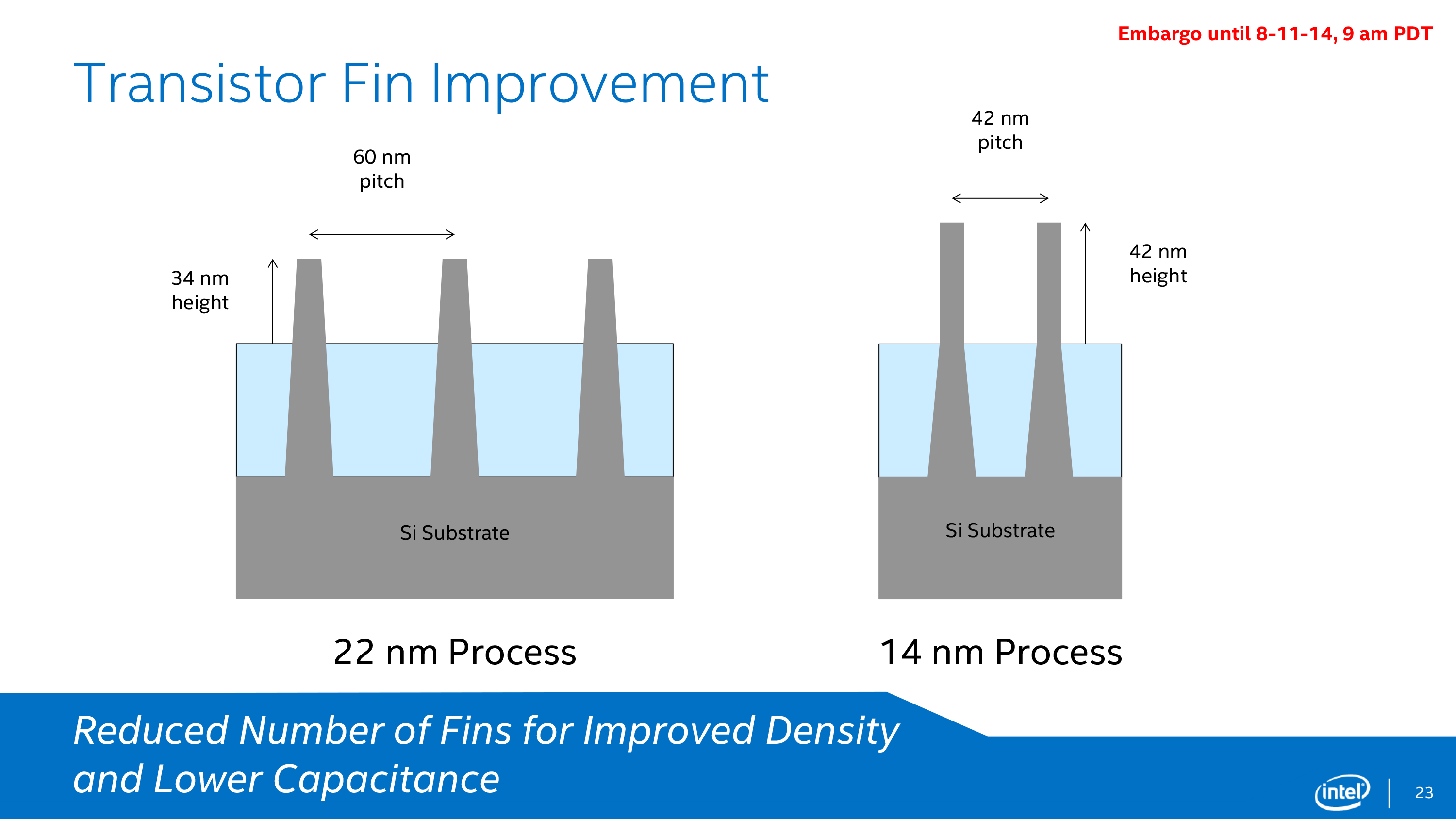

Yeah they must be doomed. They should quickly get in touch with Intel, they'll provide them with a nice CPU for smartphones... Oh wait!LOL. Sign of power hungry cores (like A15), even with FinFET?

Nice job, Apple!

It's interesting Apple finally went to b.L like configurations. OTOH it took them much longer to have to use it.