Ampere Altra Launched with 80 Arm Cores for the Cloud

The Ampere Altra 80-core Arm server CPU is upon us with 128 PCIe Gen4 lanes per CPU, CCIX, and dual-socket server capabilities. We assess the impact.

Saying NEON doesn't even reach SSE is insane, please name one thing you cannot do with NEON?

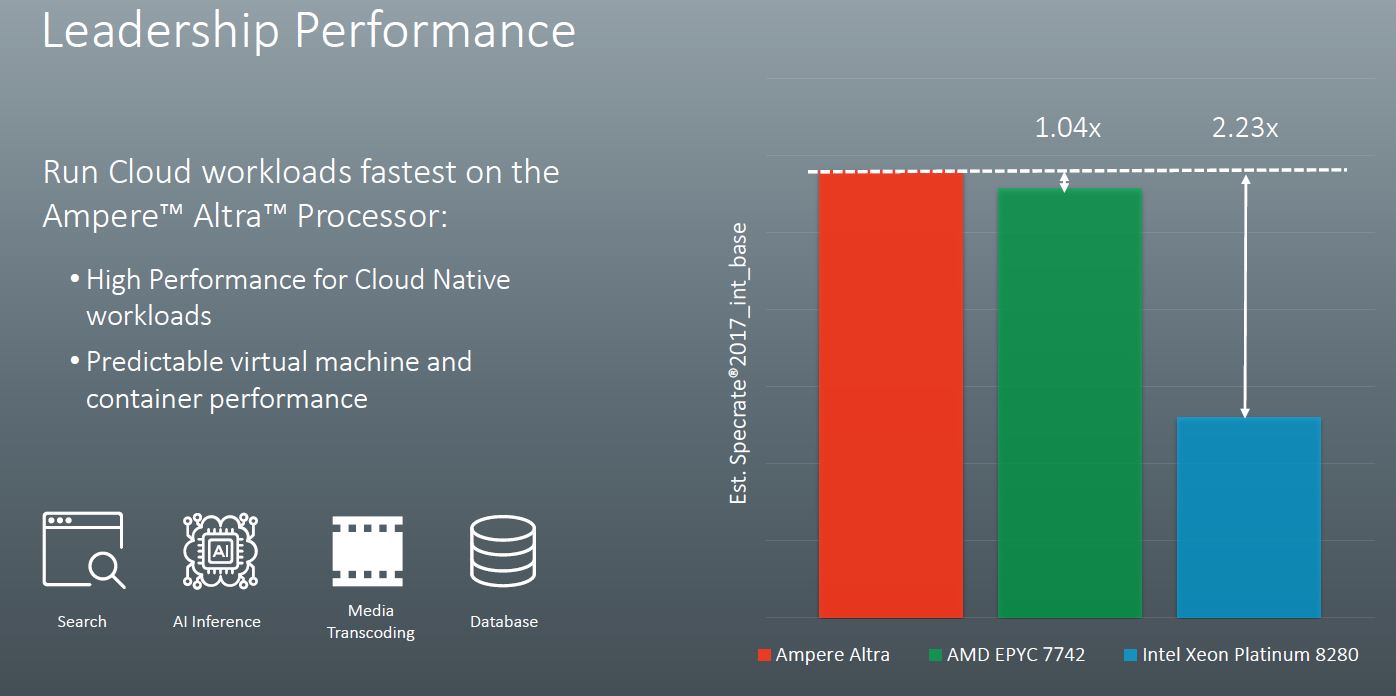

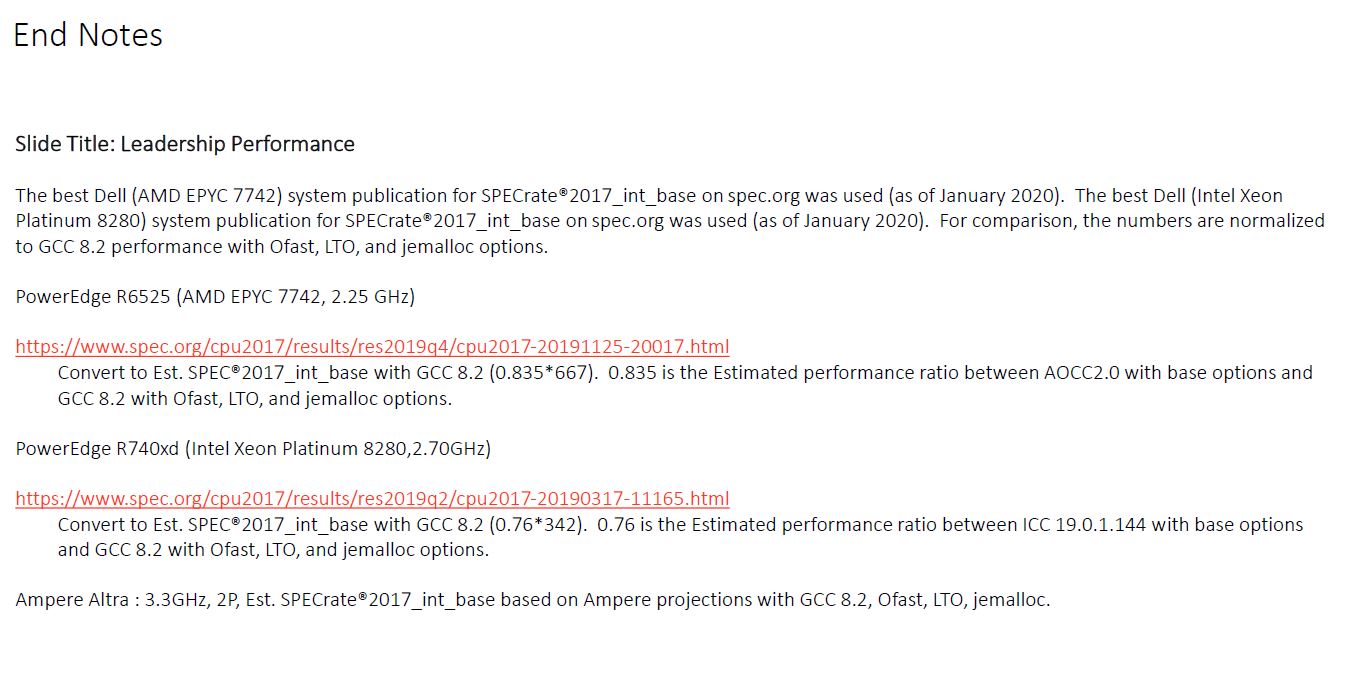

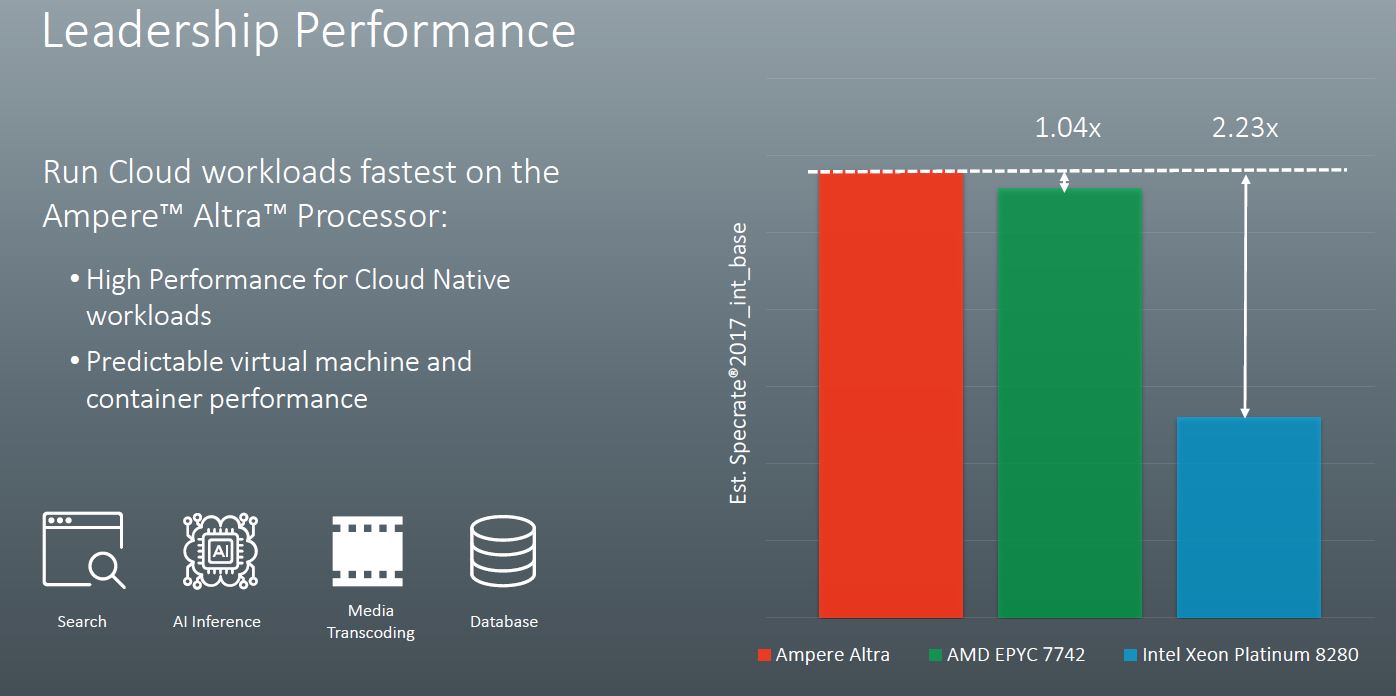

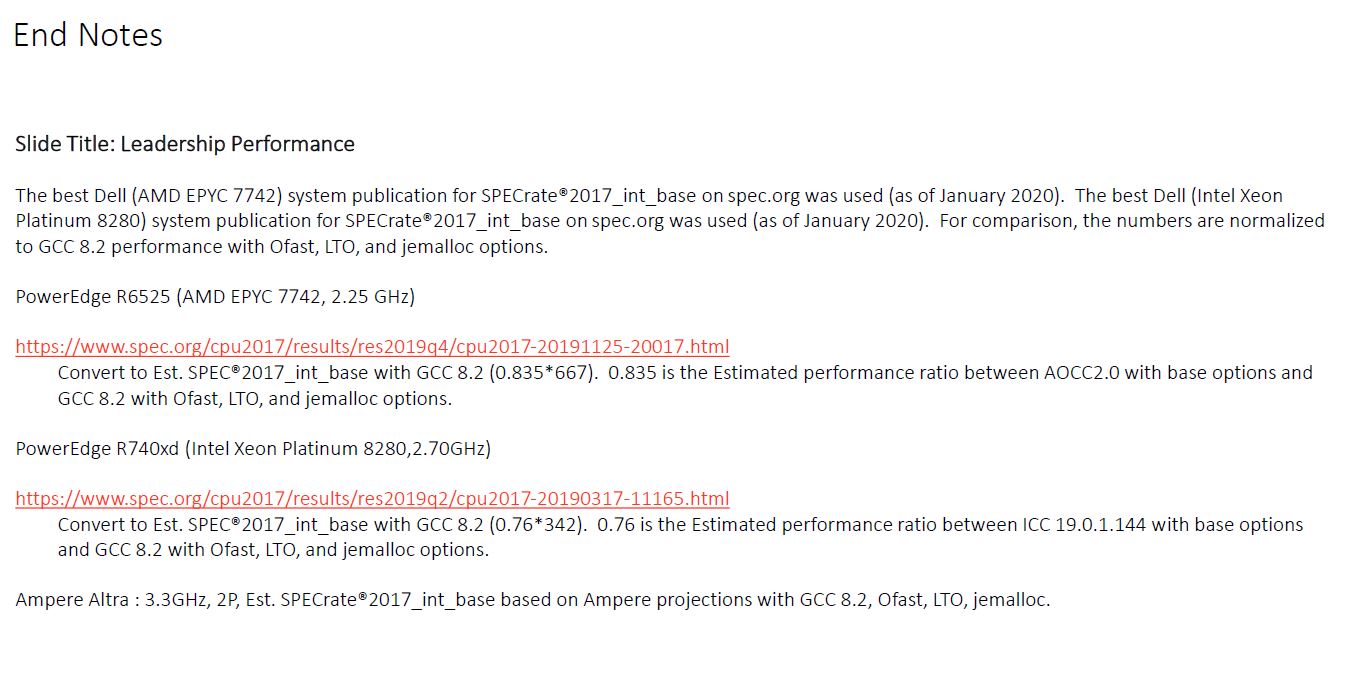

According to the STH article the 80 cores ARM chip is a 210W TDP part as well. And the End Notes above are a huge bummer with those Epyc and Xeon results being retroactively reduced to make the results of different compilers "more comparable". They should have excluded Epyc from the comparison, the latest Epyc really is way too close to those ARM efforts to make one wonder why bother.

I'd like you to list where you think NEON is lagging behind SSE. I know people who wrote assembly routines for FFmpeg and they said NEON is better than SSE. If you don't mind I'll take their words rather than yours, unless you provide evidence.Estimates, rates, synthetics, overwhelmingly stream oriented workloads, may favor a certain niche. Other workloads not so much. x86 is on it's forth major iteration of simd. neon while moving fast, has still not even reached the level of sse.

I'd like you to list where you think NEON is lagging behind SSE. I know people who wrote assembly routines for FFmpeg and they said NEON is better than SSE. If you don't mind I'll take their words rather than yours, unless you provide evidence.

Could be very good at video as anyone who's worked with neon and their cousins understands from their color channel muxing.

Well given that you admit that for video it's good which is the info I had, I have no other source to counter your previous claim, so no provable reason not to believe youI actually said the exact same thing Tuesday at [H] (can't go back in time)

That actually is an area where neon is a bit better or at least more specifically optimized for rgb. Their vector load interleaves and d-interleaves rgb seamlessly, where sse would require shuffles and blends. They have the equivalent vzip vuzp to pack unpack to alternate data quickly.

Please take other peoples word for the best available information.

ARM isn't following the silly Intel path to create a new ISA for each widening of vectors. They have SVE to go beyond NEON which is vector length agnostic. Obviously I guess to get the best you'll have to stick to some vector length but that's still the same instructions for 128-bit up to whatever chips will implement (up to 2048-bit but I don't think anyone will go that far). And no I'm not qualified enough to comment on whether it's better than AVX-512 or notI often wonder how arm's 256bit simd will differ from AVX. Laneing has it's advantages as well as it's annoyances. There were a lot of growing pains in the early days of intel simd. ARM will have to go through the same process to reach parity.

I actually said the exact same thing Tuesday at [H] (can't go back in time)

That actually is an area where neon is a bit better or at least more specifically optimized for rgb. Their vector load interleaves and d-interleaves rgb seamlessly, where sse would require shuffles and blends. They have the equivalent vzip vuzp to pack unpack to alternate data quickly.

Please take other peoples word for the best available information.

I often wonder how arm's 256bit simd will differ from AVX. Laneing has it's advantages as well as it's annoyances. There were a lot of growing pains in the early days of intel simd. ARM will have to go through the same process to reach parity.

Different architectures have different trade offs, which is what most of my arguments against the IPC is greater expand on. However, IMO there is at most a small set of operations a simple efficient core can out perform a monolithic x86.

When full reviews are made, I hope I am pleasantly surprised.

The 128 core version will probably be clocked a bit lower, but should still be a good option for workloads that can use all the threads.Wow, that's quite a chip! Wonder how it would be doing if it wasn't constrained by a fun-size LLC.

Looks like the perf/W sweet spot is probably a bit further down the SKU list - the Q64-24, in particular, looks like a heck of a lot of compute in a pretty minimal power profile.

The 128 core version will probably be clocked a bit lower, but should still be a good option for workloads that can use all the threads.

Milan should be competitive with it (at a bit worse power-draw but often a bit better performance), but Genoa vs 5nm ARM cores is going get tight for AMD.

ARM promises +50% IPC for Neoverse V1. To compete, genoa really has to do some magic on the Uncore/packaging side as well as have another around 20% IPC uplift to compete.

AMD on the same process had to resort to a ton of chiplets for 64 cores

The latter.Had to? or was it just smart in terms of available 7nm capacity and re-usability?

What's impressive is that despite what was labeled as PR fluff in March, this chip actually met expectations - SPEC 2017 rate-n int scores on GCC are 1.05-1.06x 7742, and 2.35x 8280, which is pretty close to what they claimed.The 128 core version will probably be clocked a bit lower, but should still be a good option for workloads that can use all the threads.

Milan should be competitive with it (at a bit worse power-draw but often a bit better performance), but Genoa vs 5nm ARM cores is going get tight for AMD.

ARM promises +50% IPC for Neoverse V1. To compete, genoa really has to do some magic on the Uncore/packaging side as well as have another around 20% IPC uplift to compete.

According to the STH article the 80 cores ARM chip is a 210W TDP part as well. And the End Notes above are a huge bummer with those Epyc and Xeon results being retroactively reduced to make the results of different compilers "more comparable". They should have excluded Epyc from the comparison, the latest Epyc really is way too close to those ARM efforts to make one wonder why bother.

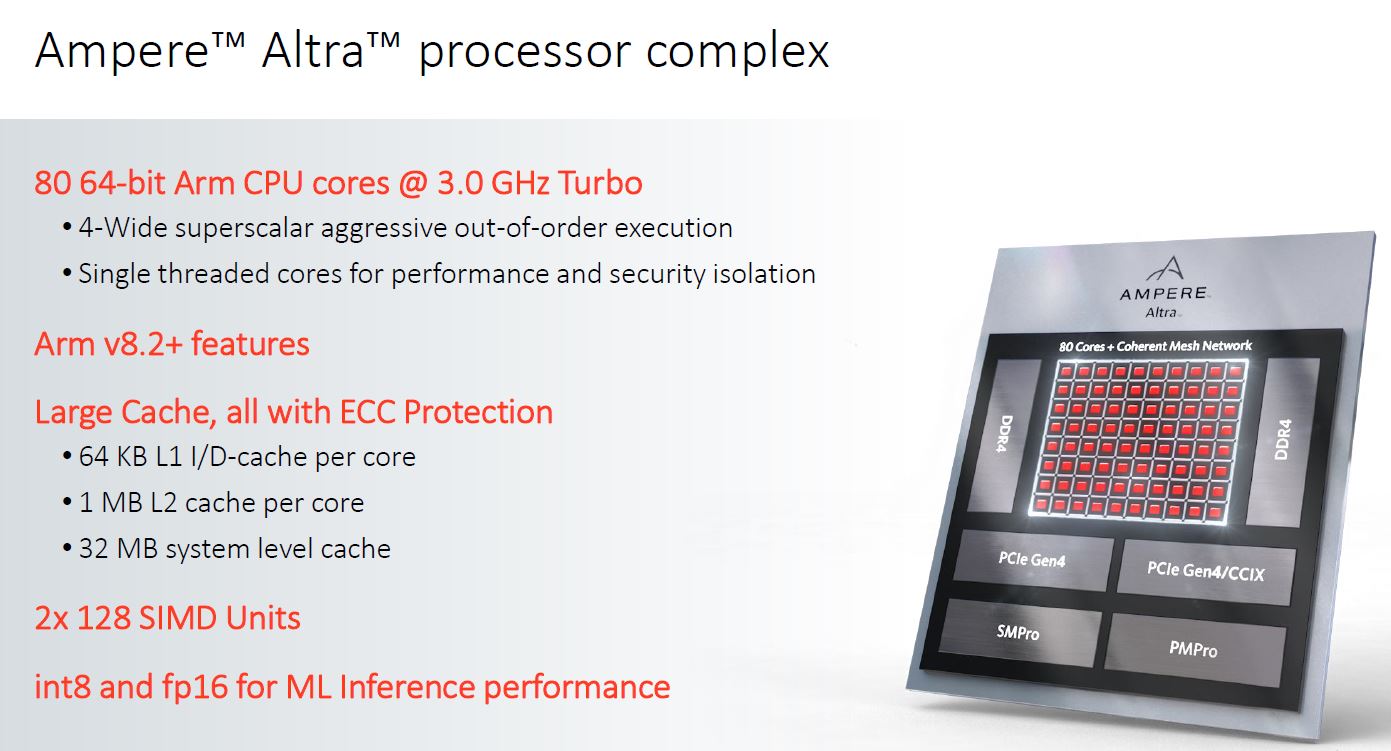

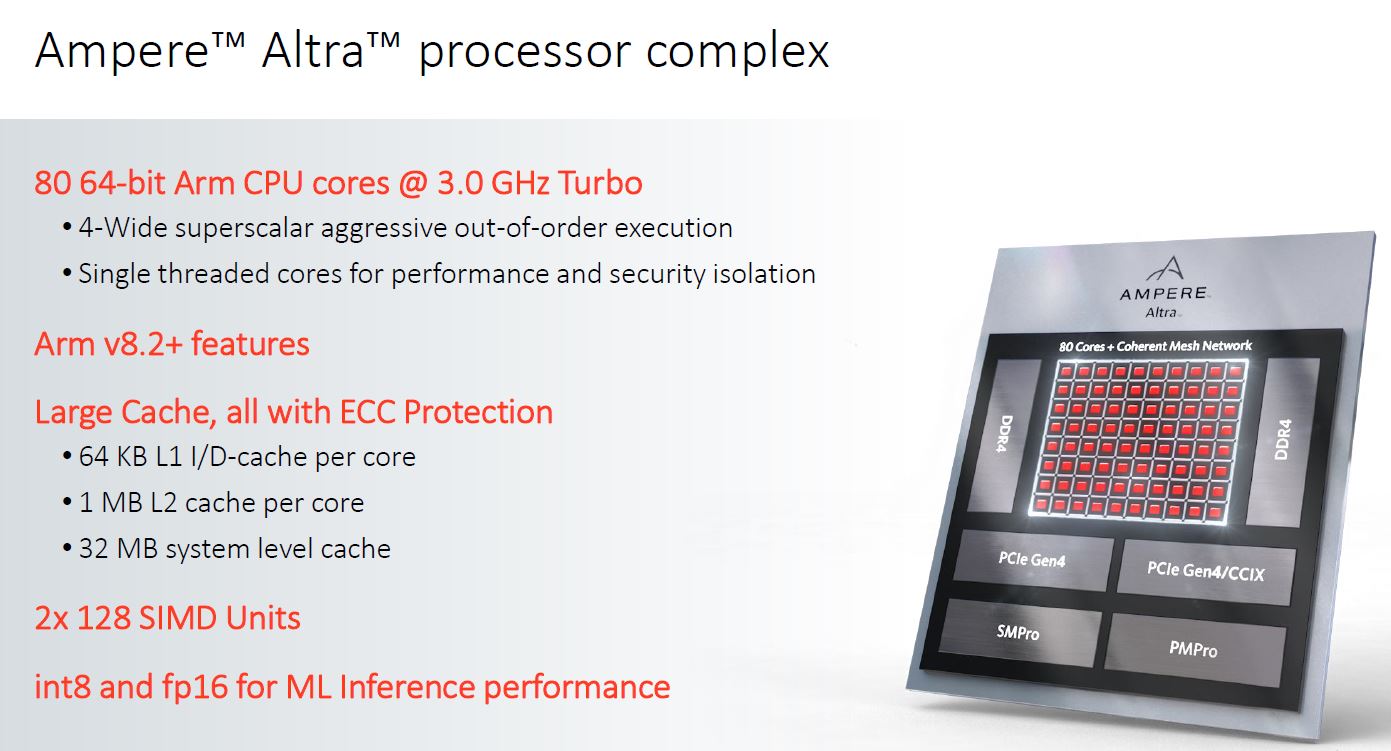

Single lane 128bit simd. Certainly not as beefy as it could of been but fp16 support is good.

Probably the best balance for where arm is in this stage of development.

lemire.me

lemire.me

I actually said the exact same thing Tuesday at [H] (can't go back in time)

That actually is an area where neon is a bit better or at least more specifically optimized for rgb. Their vector load interleaves and d-interleaves rgb seamlessly, where sse would require shuffles and blends. They have the equivalent vzip vuzp to pack unpack to alternate data quickly.

Please take other peoples word for the best available information.

I often wonder how arm's 256bit simd will differ from AVX. Laneing has it's advantages as well as it's annoyances. There were a lot of growing pains in the early days of intel simd. ARM will have to go through the same process to reach parity.

Different architectures have different trade offs, which is what most of my arguments against the IPC is greater expand on. However, IMO there is at most a small set of operations a simple efficient core can out perform a monolithic x86.

When full reviews are made, I hope I am pleasantly surprised.

The reason for "bothering" is an expectation that ARM will get faster more rapidly than AMD. Which means that the large companies that aren't Amazon (and Apple?...?), if they have any sense, will be buying a few today to start preparing for their large scale transitions over the next few years.

The actual EPYC CPU turned out to have eight 75mm^2 chiplets and a ~450mm^2 14nm IO die. A hypothetical monolithic die to fit those together might straight up exceed what can be fabbed on 7nm due to the size alone.Had to? or was it just smart in terms of available 7nm capacity and re-usability?

My 2cents is that we can't yet? compare based on list-prices from AMD/Intel. As finally getting the budget to get a nice server for data science stuff.he TCO for an entire server based on ARM is going to be higher for volume customers because storage/memory costs are what really drives Server Costs.

You ARM fan boys don't really live in the real world do you?

Also almost all non hyperscalres buy via an OEM/ODM, until people like Dell and HPE are fully on board, the TCO for an entire server based on ARM is going to be higher for volume customers because storage/memory costs are what really drives Server Costs.

Maybe in 2-3 gens time when an advantage might start to exist people might start planning but right now they don't give a crap. Also Enterprise licensing SKU's don't favour massive core counts, the big outlier here is RHEL , lets wait and see how long it takes IBM to "fix" that , just like they fixed centos.

The thing that will be interesting to me is how ARM server companies sustain themselves until that can reach viable levels of market share, the TAM for there SOC's are smaller compared to x86 and with single SOC products they will eat more cost in the lower and mid SKU's where most servers are sold at ( intel 18-24 , 32 for AMD) compared to AMD's chiplet and intels multi SOC approach. In a race to the Bottom both AMD and Intel have big advantages to "floor" pricing , Intel can eat manufacturing Margin , AMD amortises costs and yield over both consumer and sever.

if i've learnt anything over 15 years of designing/selling Datacentre infrastructure is the market in general always cares way less then you think they do, you have to have massive undeniable advantage over multiple generations to gain inertia.