AMD Ryzen (Summit Ridge) Benchmarks Thread (use new thread)

Page 158 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,226

- 13,306

- 136

1. You should really be using the 6600K vs 6700K for the calculations regarding HT yield, as they're both 91w, hence why the former performs slightly better.

I would think the opposite. If you set the 6700k to a static clockspeed and then do a bench with and without HT, that's the best way to isolate the gains from HT . . . unless you're trying to bench "real world" use of HT, e.g. measure its impact within marketed TDP limitations (91w in this case).

But if you want to isolate HT all by itself and measure its full potential, its best to eliminate TDP limitations from the bench by using a fixed clockspeed/voltage and then test with it on then off.

I would think it highly informative if a future Summit Ridge owner would do the same with their Ryzen CPU next month.

coercitiv

Diamond Member

- Jan 24, 2014

- 7,491

- 17,902

- 136

One more thing to note is the 50% is a worst case scenario, since we're using a test that partially uses more than 4 threads: since these games have a number of choke holds starting with ST perf for main thread and MT perf for the rest, one can easily argue some of the frequency scaling of the i5 is lost due to additional resources being diverted to feed secondary threads (past 4 threads). This would not be the case for 4c/8t or even better 8c CPUs, since any increase in frequency will translate into maximum ST perf conversion - with whatever efficiency is possible under linear increase ofc.So the only reasonable approach to take is ignore the Skylake results, and focus on Zen 8 core vs BW-E 8 core. Then you can take the Frequency scaling data from the i5's and apply it to this comparison, which is approximatly 50% according to the tests, which is handy..

In other words, the more games you have running on 4 threads or less, the less relevant the test is in revealing Zen 8c/16t potential, and the more games you have running with more than 4 threads, the less relevant 4c/4t Skylake frequency scaling is in creating a model for Zen 8c/16t clock scaling.

We are in dire need of additional data points, be it more frequency points for Skylake 4c/8t or at least FPS data for individual games where we can approximate number of threads.

Arachnotronic

Lifer

- Mar 10, 2006

- 11,715

- 2,012

- 126

I would think the opposite. If you set the 6700k to a static clockspeed and then do a bench with and without HT, that's the best way to isolate the gains from HT . . . unless you're trying to bench "real world" use of HT, e.g. measure its impact within marketed TDP limitations (91w in this case).

But if you want to isolate HT all by itself and measure its full potential, its best to eliminate TDP limitations from the bench by using a fixed clockspeed/voltage and then test with it on then off.

I would think it highly informative if a future Summit Ridge owner would do the same with their Ryzen CPU next month.

If Summit Ridge becomes available next month, I'd be happy to do that test. I will be buying it day 1, if availability is not an issue.

MajinCry

Platinum Member

- Jul 28, 2015

- 2,495

- 572

- 136

If Summit Ridge becomes available next month, I'd be happy to do that test. I will be buying it day 1, if availability is not an issue.

Oh my. If you will be nabbing the AMD proccy, would you fancy doing a wee draw performance test with me? The ideal game to test with is Fallout 4, due to the absurdly long shadow distances, as well as the game having that ENB mod that will show us the number of draw calls being made.

If yer game, I'll fire you the optimal .ini settings (minimum texture/shadow/display resolution, maximum draw distances) once the hardware's ready.

Main reason for the test, is to see if AMD has finally fixed their awful draw call performance. Intel had the same fps impact with draw calls, until Core 2 came around, and Intel's CPUs have only been getting better at them.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,226

- 13,306

- 136

If Summit Ridge becomes available next month, I'd be happy to do that test. I will be buying it day 1, if availability is not an issue.

I'll be doing my best to get one too, barring shortages and or insane markups.

Thanks. It has been refreshing to get a proper response, majord

To expand a bit further:

1- I've not used Core i5-6600K because that pesky "multicore enhancement". I have a i5-6600 myself and I know it clocks at just 3.6GHz under all-core-load (clock ratios hardcoded in non-K Skylake chips), while K-series could go to single core turbo even under all cores loaded, depending on that BIOS/UEFI setting. My Asrock (Z170Extreme4) defaults to "multicore enhancement" enabled, but do nothing in my non-K SkylakeSo, Core i5-6600K could score better than i5-6600 because has more power headroom... or because is running at 3.9GHz. Thats why I skipped it.

2- Yeah, as we only know the total score in gaming, we couldn't discern in what way each game contributes to that global score. Then, I'm not sure you could extrapolate Skylake scaling to other architectures, but yes, as a first approximation it should work. So let's say that Ryzen get 6+/-2% less IPC than BW-E (taking into account differences between architectures and differences in max boost clock); yep, as is, it's really close, but then, recent architecture scaling from Intel has been less than stellar: a 6% difference is almost the jump between Sandy and Ivy Bridge, or Haswell vs Skylake. Therefore my "rather behind".

Thats all. I hope we get a full review soon; Intel's new cores have been mostly incremental advances, so it's really refreshing to look at a new architecture that also looks really promising (Bulldozer was truly a new architecture, but also a flop).

As you say, MB bios influence on Turbo ratio's muddys waters even further.

But regarding IPC, I personally see Haswell as the point where Intel kind of lost there way in regard to performance per watt and per mm, falling out of the 'sweet spot' in order to push IPC marginally higher, and make the move to 256b datapaths+doubled L1 bandwidth+ [arguably] an execution resource overload for the soul purpose of AVX2. Certainly when your workload is able to take full advantage of it, it pays off in spades, but in the real world, which is always a mix of workloads, not so much.

So the idea that Zen, at a high level being a somewhat beefed up sandy/Ivy bridge class core as being 'behind the times' is not particularly logical to me.. To use a non partisan analogy, it's like saying Intel's brand new Goldmont core is 13 years behind the times, because it ultimately represents a beefed up Pentium M in performance and High level uArch.. But of course it's not since it uses many lower level techniques to make it radically better in perf/watt, even ignoring the process tech it's built on.

Let's be honest, do you really think Intel couldn't have released an 8c/16t IVy-E? Of course they could. Problem is it would still be embarassing Broadwell E today.

http://www.anandtech.com/show/10337...6900k-6850k-and-6800k-tested-up-to-10-cores/6

Have a nice big look at Cinebench on the 4960X next to its newer hex-core contenders. I think it paints a really good summary of how AMD have been able to catch up so much.

Arachnotronic

Lifer

- Mar 10, 2006

- 11,715

- 2,012

- 126

Oh my. If you will be nabbing the AMD proccy, would you fancy doing a wee draw performance test with me? The ideal game to test with is Fallout 4, due to the absurdly long shadow distances, as well as the game having that ENB mod that will show us the number of draw calls being made.

You will have to tell me what equipment to buy to test power, but yes, I'd be happy to do that (unless it's like prohibitively expensive to do so).

If yer game, I'll fire you the optimal .ini settings (minimum texture/shadow/display resolution, maximum draw distances) once the hardware's ready.

Okay, sure!

Main reason for the test, is to see if AMD has finally fixed their awful draw call performance. Intel had the same fps impact with draw calls, until Core 2 came around, and Intel's CPUs have only been getting better at them.

Sure, happy to help.

superstition

Platinum Member

- Feb 2, 2008

- 2,219

- 221

- 101

...Blameless said:Stilts build is just a recent source run through an updated compiler with all optimization flags checked. The reference Windows binaries explicitly do not use newer instruction sets like AVX/FMA...probably to make it easier to bug check, or because whoever they have pushing out the Windows binaries doesn't feel like using an updated compiler. This is why reference Blender is so much faster in Linux; newer compiler/more options, rather than any intrinsic Linux advantage.

Anyway, all the points you've noticed are generally correct. Reference binaries are trash, and if you want to do any serious work with Blender, you are probably compiling your own.

Last edited:

MajinCry

Platinum Member

- Jul 28, 2015

- 2,495

- 572

- 136

You will have to tell me what equipment to buy to test power, but yes, I'd be happy to do that (unless it's like prohibitively expensive to do so).

Okay, sure!

Sure, happy to help.

As long as it has at least four cores, we're good. I'm stuck with a 965 BE, so if you happen to splurge on something with more cores 'n' such, just disable them in the BIOS when we do the test. For clockspeeds, might as well keep everything at 3.4Ghz, what with that being the clocks for AMD's reveal.

Arachnotronic

Lifer

- Mar 10, 2006

- 11,715

- 2,012

- 126

As long as it has at least four cores, we're good. I'm stuck with a 965 BE, so if you happen to splurge on something with more cores 'n' such, just disable them in the BIOS when we do the test. For clockspeeds, might as well keep everything at 3.4Ghz, what with that being the clocks for AMD's reveal.

You got it. Ryzen is going to be fun to play with

You will have to tell me what equipment to buy to test power, but yes, I'd be happy to do that (unless it's like prohibitively expensive to do so).

.

http://www.newegg.com/Product/Product.aspx?Item=N82E16882715001

That should be largely enough, hope that it wont get the budget through the roof...

Aye. It's well known in the online community at least since the Conroe days that those high IQ/high res gaming benchmarks don't mean squat for CPU performance, and thus, nothing can be construed from them. I'm not sure why there's a need to rewrite history now.No review about gaming performance of a CPU is valid in my eyes, if the CPU isn't 100% and the GPU lower. That is why many reviews fail, being GPU bottlenecked. If you are testing the CPU you should lower the settings as low as you have to, so as to get the CPU bottlenecking the system.

Plenty of old benchmark testing was done showing this. i.e.

http://www.guru3d.com/articles_pages/cpu_scaling_in_games_with_dual_amp_quad_core_processors,3.html

http://www.tomshardware.co.uk/amd-crossfire-nvidia-sli-multi-gpu,review-31963-7.html

http://www.wsgf.org/book/export/html/27930

http://www.wsgf.org/book/export/html/28343

Look particularly at the core loads charts, from 1-8 on AMD and Intel processors.

http://gamegpu.com/action-/-fps-/-tps/batman-arkham-knight-test-gpu-2015.html

I still remember this old Starcraft II test showing little core load:

http://www.techspot.com/review/305-starcraft2-performance/page13.html

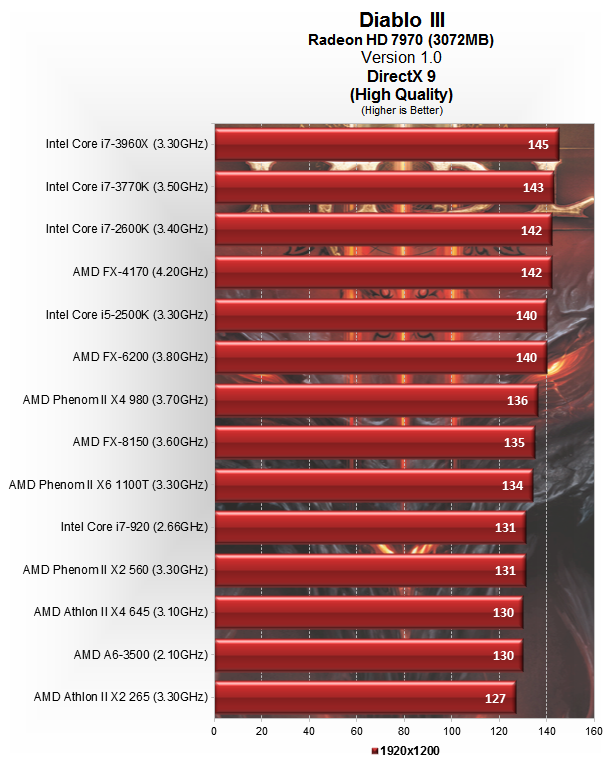

Or their Diablo 3 testing showing BD having +9% gain with +80% clock:

http://www.techspot.com/review/532-diablo-3-performance/page5.html

And then ->

Bottom line: these are GPU benches at such settings... ahh it feels good using a PC after so long!

I'll be testing one very soon... including power. Probably along with a 6900K but I'm waiting to see how AMD price it, so then I can pick it's equally priced competitor.You got it. Ryzen is going to be fun to play with

Bulldozer was a major regression, a disaster. Pre-release it didn't excite me one bit and I could see it as as unimpressive so I didn't even bother testing it officially. Deneb was the last I was slightly looking forward to from AMD, after their 65nm woes. But even back at the Phenom launch, the QX9650/QX9770 were more than +50% in a lot of benches even though that was a good µarch, let down by the poor process.

So any closer to Intel Core-i7 68x0/69x0K today would really be advancement for AMD, and much better than trying to maintain distance parity, which is what they have been doing since 2006.

superstition

Platinum Member

- Feb 2, 2008

- 2,219

- 221

- 101

Bulldozer was a major regression, a disaster.

So many aren't concerned by all the performance being left on the table with the stock Blender build due to a simple compiler difference. That's something that can be easily changed but it seems most don't think it's worth doing or caring about.

By contrast, an architecture that can hold up against the same generation Intel architecture (Sandy) in gaming when the code leverages its strengths is a "major regression, a disaster".

superstition

Platinum Member

- Feb 2, 2008

- 2,219

- 221

- 101

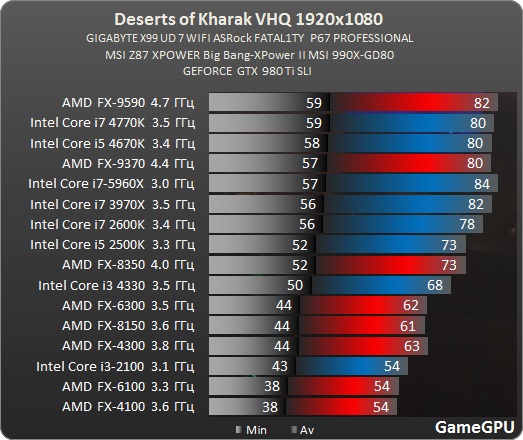

My argument was clearly stated. Deserts of Kharak is not the only gaming result that supports it. It's just the most obvious.And you pull out the one bench in a hundred that shows that pattern... to what end? Proving what?

Furthermore, it's interesting to see the complaint about a single benchmark given the Blender Ryzen demo.

It would be quite misleading to blame Bulldozers poor showing on 'lazy developers' whereas competitors are doing pretty fine with the same. The competitor having +90% of the market doesn't help this line of thinking.

Zen will also look far better in the same software where Bulldozer looked a right mess, which would mean there were obvious performance bottlenecks in the previous uarchs, rather than just lazy code optimizations sandbagging AMD CPUs.

Being a corporate nut though, that doesn't mean I rule out specific optimizations for one uarch/brand occurring, nor the fact that more optimization could be done for any particular uarch. That's just the way the real world works in EVERY bloody sphere.

Many a white sheets have I seen, few whiter than tar black.

Sent from HTC 10

(Opinions are own)

Zen will also look far better in the same software where Bulldozer looked a right mess, which would mean there were obvious performance bottlenecks in the previous uarchs, rather than just lazy code optimizations sandbagging AMD CPUs.

Being a corporate nut though, that doesn't mean I rule out specific optimizations for one uarch/brand occurring, nor the fact that more optimization could be done for any particular uarch. That's just the way the real world works in EVERY bloody sphere.

Many a white sheets have I seen, few whiter than tar black.

Sent from HTC 10

(Opinions are own)

superstition

Platinum Member

- Feb 2, 2008

- 2,219

- 221

- 101

My post didn't blame the developers of Deserts of Kharak, did it? Nor did it blame the other developers that have shown that the statement I made is true, and a rebuttal to the hyperbole.It would be quite misleading to blame Bulldozers poor showing on 'lazy developers' whereas competitors are doing pretty fine with the same.

cytg111

Lifer

- Mar 17, 2008

- 26,835

- 16,106

- 136

My argument was clearly stated. Deserts of Kharak is not the only gaming result that supports it. It's just the most obvious.

Furthermore, it's interesting to see the complaint about a single benchmark given the Blender Ryzen demo.

No it wasnt, when I replied your post only had the graph, you edited it afterwards, check the timestamps. I am going to take this as an accident and not an attempt to manipulate events on your part.

I am not going to drag out the billions af benches that shows bulldozer++ being trounced in gaming benchmarks, if you believe otherwise, so be it, if you assume a role to push this as a truth its not my job to stop you, I dont work here.

superstition

Platinum Member

- Feb 2, 2008

- 2,219

- 221

- 101

Even if you were to, it wouldn't rebut my statement unless it can be proved that the games that show that my statement seems true were designed in a manner that sabotages performance on Intel. And, even if that were the case it would also have to be proven that your citations aren't examples where performance was sabotaged on AMD.I am not going to drag out the billions af benches that shows bulldozer++ being trounced in gaming benchmarks

The bottom line here appears to be that, in contrast with the hyperbole, Piledriver is able to keep up with Sandy in games when they are developed in a manner that utilizes its strengths. That is not a "major disaster" in terms of the architectural design. It just means Piledriver hasn't improved since 2012 which isn't surprising since it's a 2012 architecture. Yes, Sandy is more efficient overall but that doesn't make Piledriver a "major disaster" considering that AMD hardly had the development resources Intel enjoyed.

cytg111

Lifer

- Mar 17, 2008

- 26,835

- 16,106

- 136

My post didn't blame the developers of Deserts of Kharak, did it? Nor did it blame the other developers that have shown that the statement I made is true, and a rebuttal to the hyperbole.

Even if you were to, it wouldn't rebut my statement unless it can be proved that the games that show that my statement seems true were designed in a manner that sabotages performance on Intel. And, even if that were the case it would also have to be proven that your citations aren't examples where performance was sabotaged on AMD.

The bottom line here appears to be that, in contrast with the hyperbole, Piledriver is able to keep up with Sandy in games when they are developed in a manner that utilizes its strengths. That is not a"major disaster" in terms of the architectural design. It just means Piledriver hasn't improved since 2012 which isn't surprising since it's a 2012 architecture. Yes, Sandy is more efficient overall but that doesn't make Piledriver a "major disaster" considering that AMD hardly had the development resources Intel enjoyed.

x86 binaries has a long history, one defined by Intel architectures, that means decades old code, even in the operating systems, compilers and future executables.

So when you are an underdog with no say in the industry as to how an x86 executable flows then you *are* a massive twit if you put out an chip that performs horrible on all past and current binaries but hey, just going forward write according to this spec and it will rock almost the same performance as Intel (oh and I have 2% market share but imma gonna dominate soon mkaybye?)

So Bulldozer was a disaster, yes.

cytg111

Lifer

- Mar 17, 2008

- 26,835

- 16,106

- 136

Even if you were to, it wouldn't rebut my statement unless it can be proved that the games that show that my statement seems true were designed in a manner that sabotages performance on Intel. And, even if that were the case it would also have to be proven that your citations aren't examples where performance was sabotaged on AMD.

Applying boolean logic and deductive reasoning exactly the same can be said for your argument negated. I guess you can take it up with your self then, let me know how it turns out

superstition

Platinum Member

- Feb 2, 2008

- 2,219

- 221

- 101

By that reasoning, Intel should have never added AVX. Or, AMD would be forced into making knock-offs of Intel's CPUs.x86 binaries has a long history, one defined by Intel architectures, that means decades old code, even in the operating systems, compilers and future executables.

So when you are an underdog with no say in the industry as to how an x86 executable flows then you *are* a massive twit if you put out an chip that performs horrible on all past and current binaries but hey, just going forward write according to this spec and it will rock almost the same performance as Intel (oh and I have 2% market share but imma gonna dominate soon mkaybye?)

So Bulldozer was a disaster, yes.

Actually, both.

I don't think this conversation is going anywhere so I'm out.Applying boolean logic and deductive reasoning exactly the same can be said for your argument negated. I guess you can take it up with your self then, let me know how it turns out.

cytg111

Lifer

- Mar 17, 2008

- 26,835

- 16,106

- 136

By that reasoning, Intel should have never added AVX. Or, AMD would be forced into making knock-offs of Intel's CPUs.

1. You made a strawman with Intel here. Was that another accident? Lets say it was.

2. AMD *is* the knockoff always has been. At some point in time the knockoff became better than the original, back and forth.

How much CPU history do you know?

http://www.tomshardware.com/news/amd-intel-x86-cpu,7285.html

http://www.reuters.com/article/amd-intel-idUSN1642295820090316

That is what happens when you loose track of the stack, recursion is not for everyone. LaterI don't think this conversation is going anywhere so I'm out.

- Status

- Not open for further replies.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.